- Paper 1: Jigsaw Clustering for Unsupervised Visual Representation Learning

- Paper 2: Self-supervised Motion Learning from Static Images

- Paper 3: Self-supervised Video Representation Learning by Context and Motion Decoupling

- Paper 4: Skip-convolutions for Efficient Video Processing

- Paper 5: Temporal Query Networks for Fine-grained Video Understanding

- Paper 6: Unsupervised disentanglement of linear-encoded facial semantics

- Paper 7:Bi-GCN: Binary Graph Convolutional Network

- Paper 8: An Attractor-Guided Neural Networks for Skeleton-Based Human Motion Prediction

- Paper 9: Cascade Graph Neural Networks for RGB-D Salient Object Detection

- Paper 10: Coarse-Fine Networks for Temporal Activity Detection in Videos

- Paper 11: CoCoNets: Continuous Contrastive 3D Scene Representations

- Paper 12: CutPaste: Self-Supervised Learning for Anomaly Detection and Localization

- Paper 13: Discriminative Latent Semantic Graph for Video Captioning

- Paper 14: Enhancing Self-supervised Video Representation Learning via Multi-level Feature Optimization

- Paper 15: Exploring simple siamese representation learning

- Paper 16: GiT: Graph Interactive Transformer for Vehicle Re-identification

- Paper 17: Graph-Time Convolutional Neural Networks

- Paper 18: Graphzoom: A multi-level spectral approach for accurate and scalable graph embedding

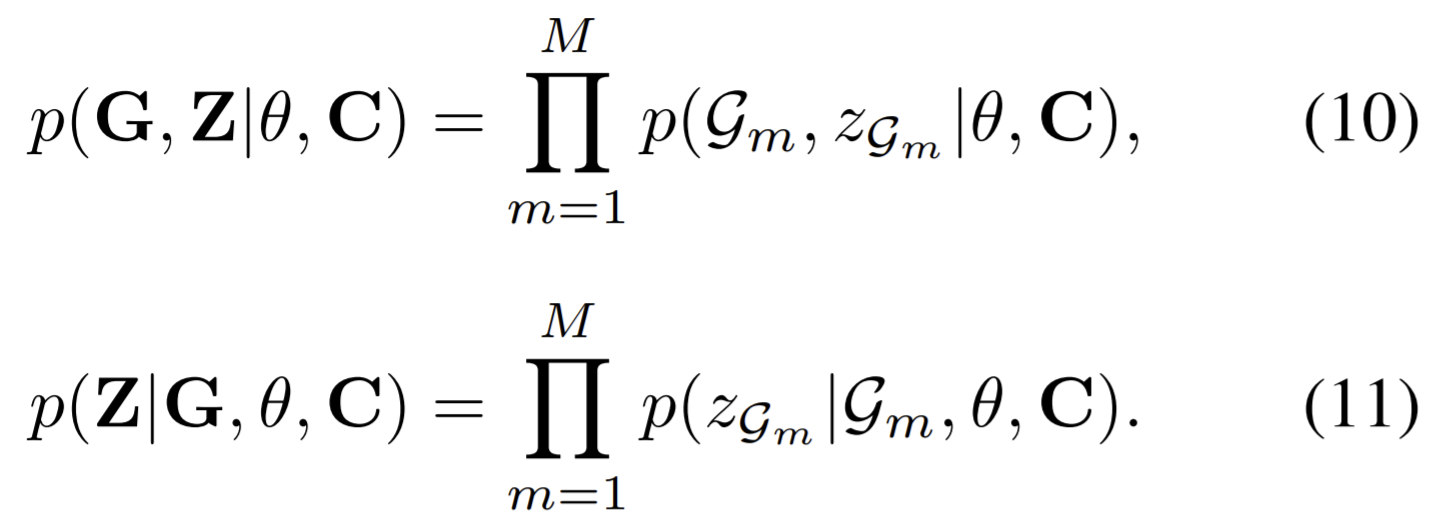

- Paper 19: Group Contrastive Self-Supervised Learning on Graphs

- Paper 20: Homophily outlier detection in non-IID categorical data

- Paper 21: Hyperparameter-free and Explainable Whole Graph Embedding

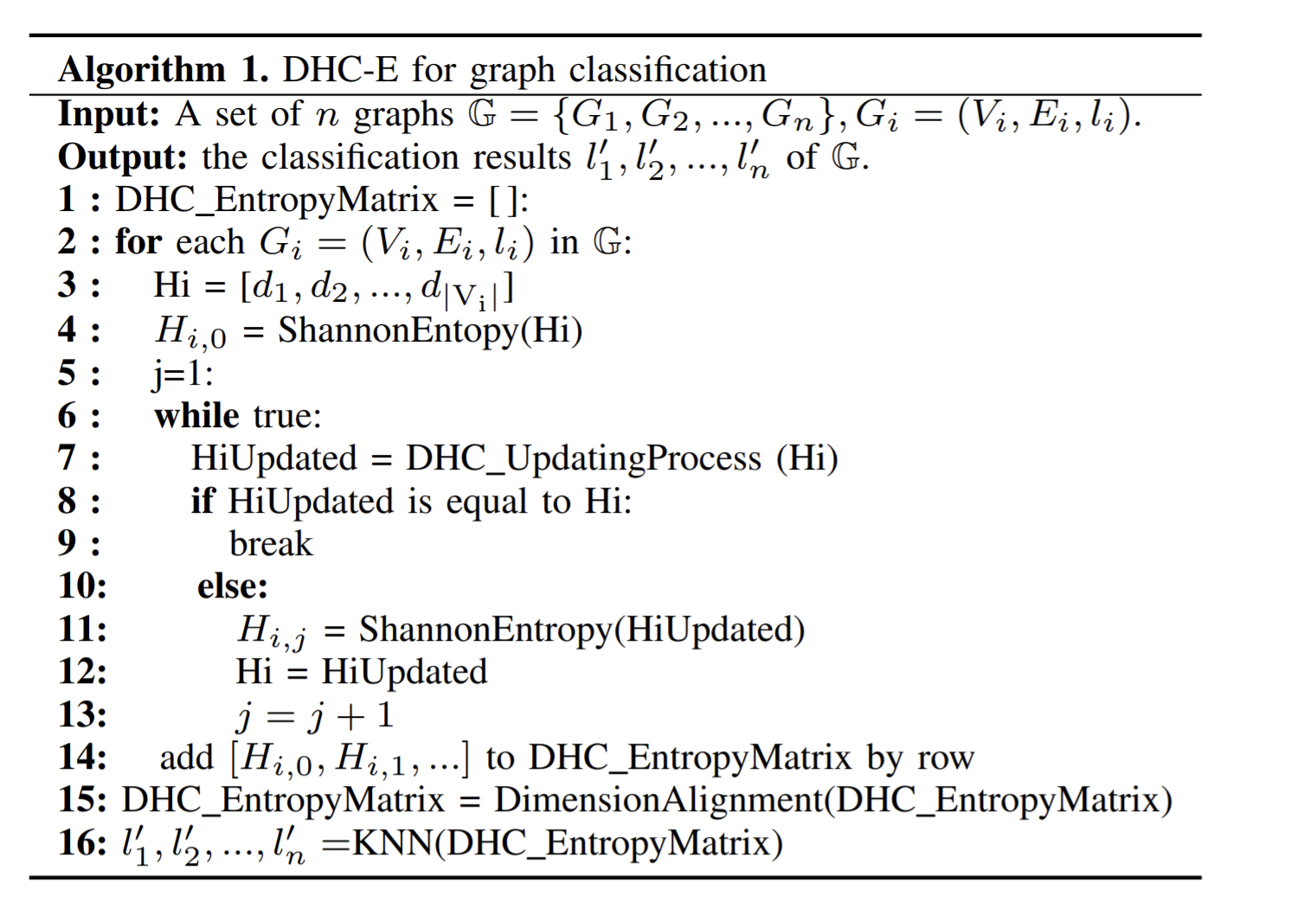

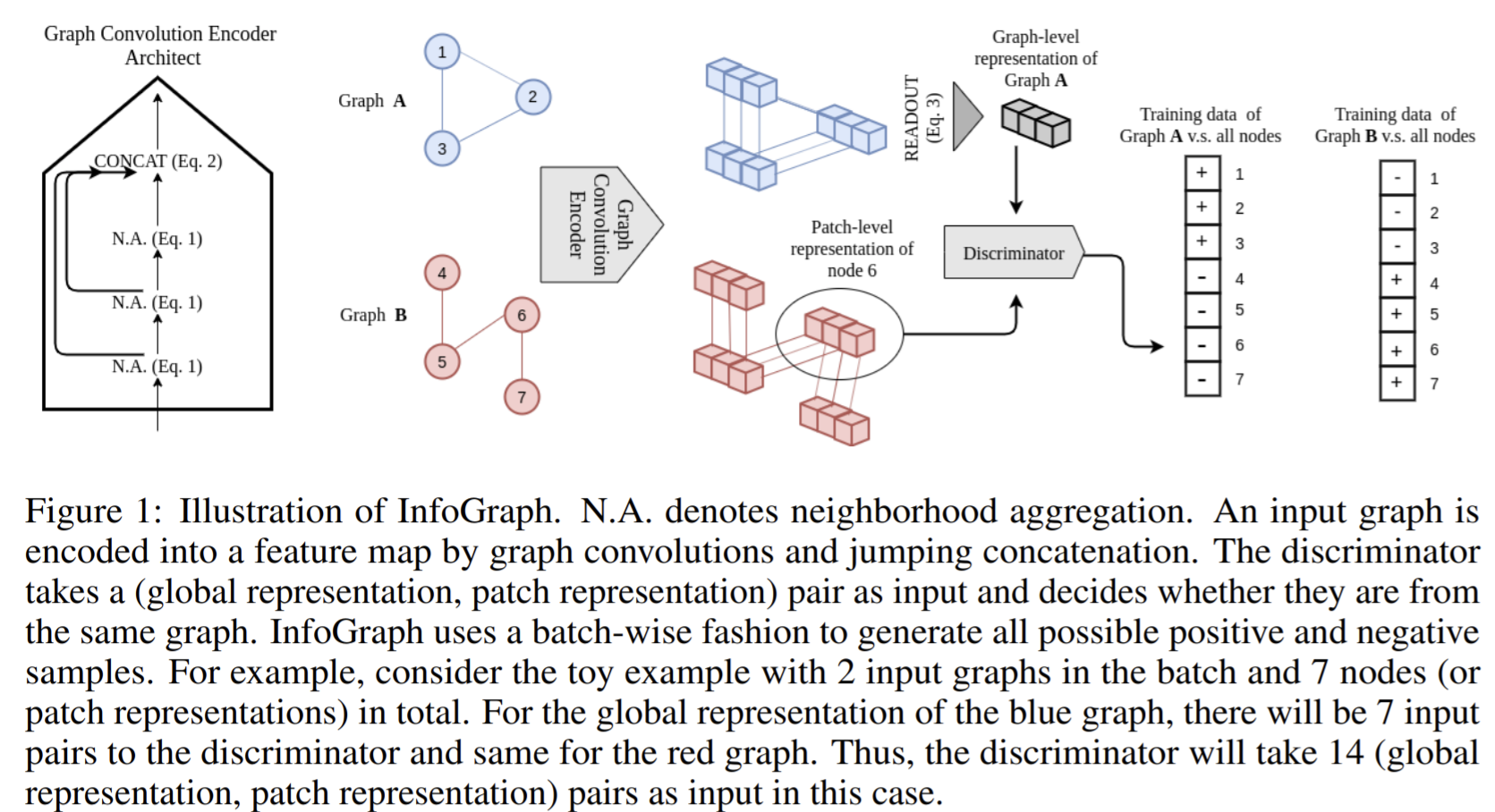

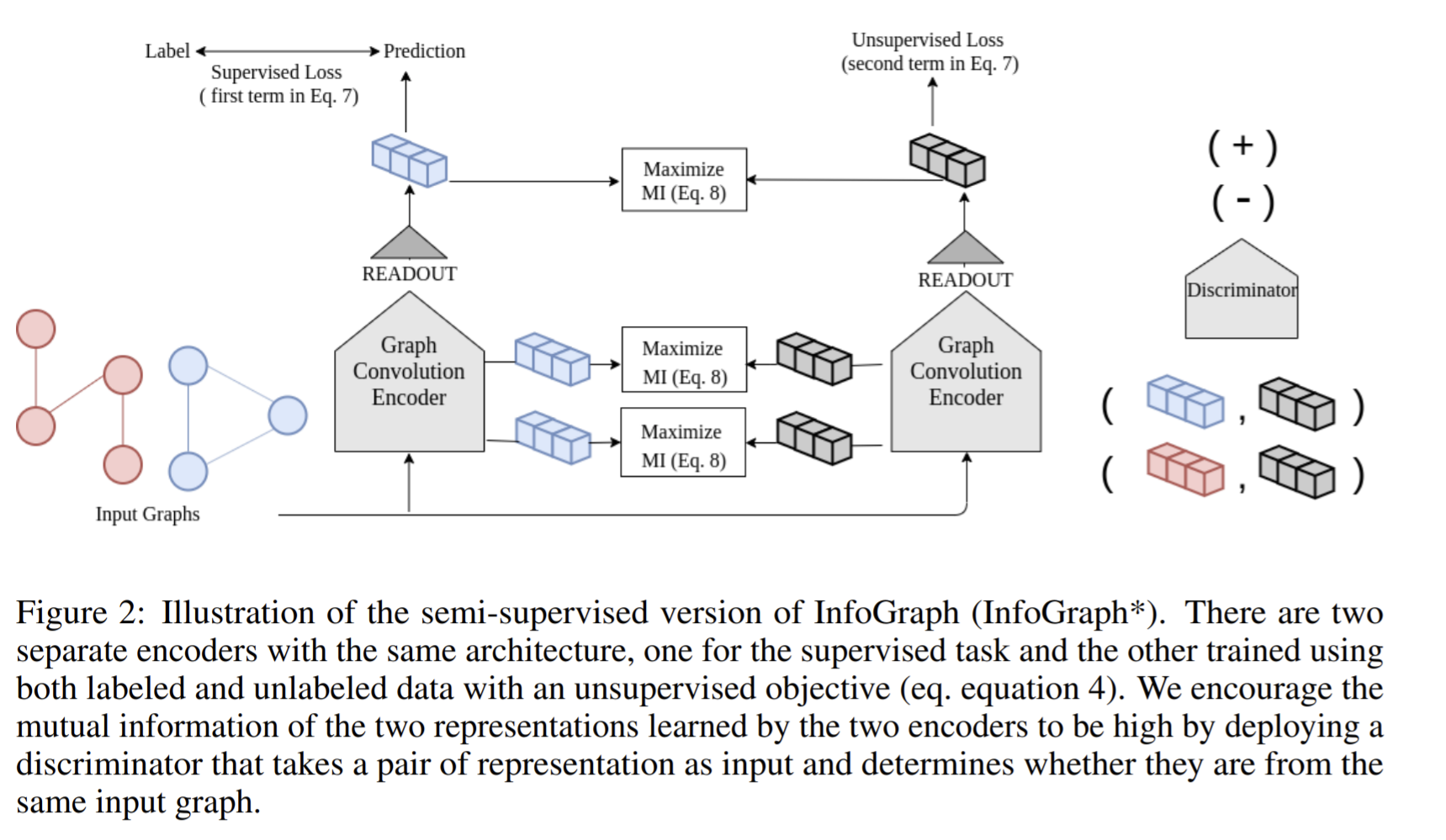

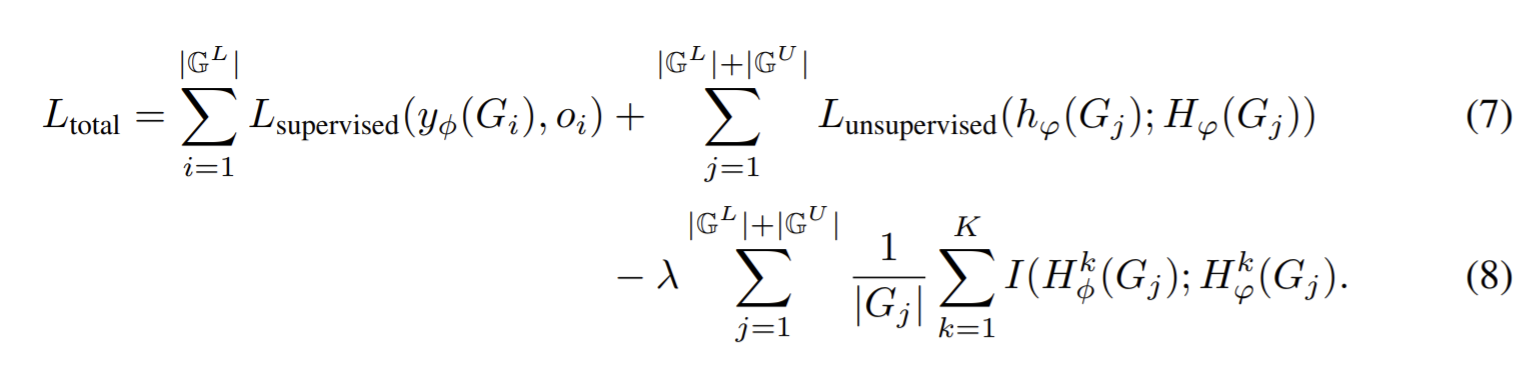

- Paper 22: Infograph: Unsupervised and semi-supervised graph-level representation learning via mutual information maximization

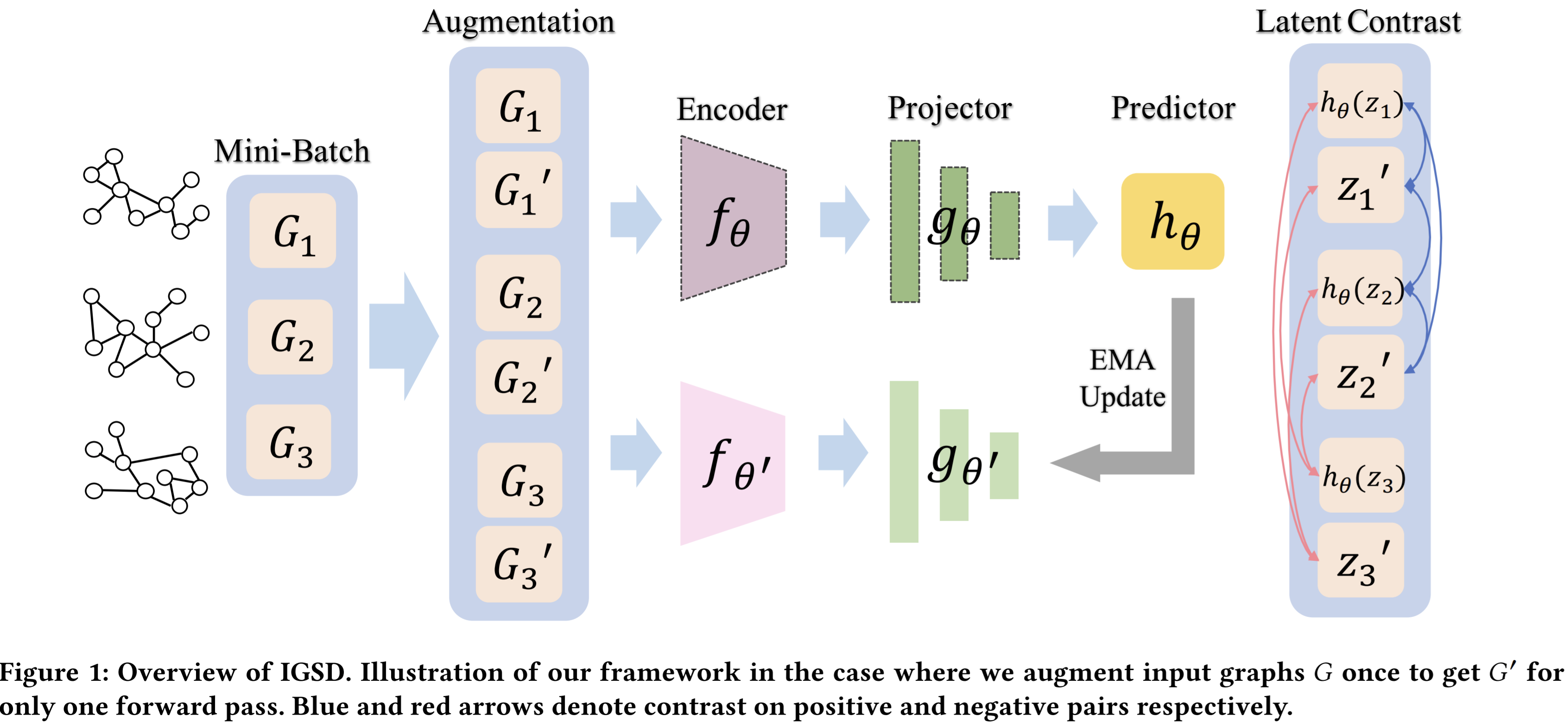

- Paper 23: Iterative graph self-distillation

- Paper 24: Learning by Aligning Videos in Time

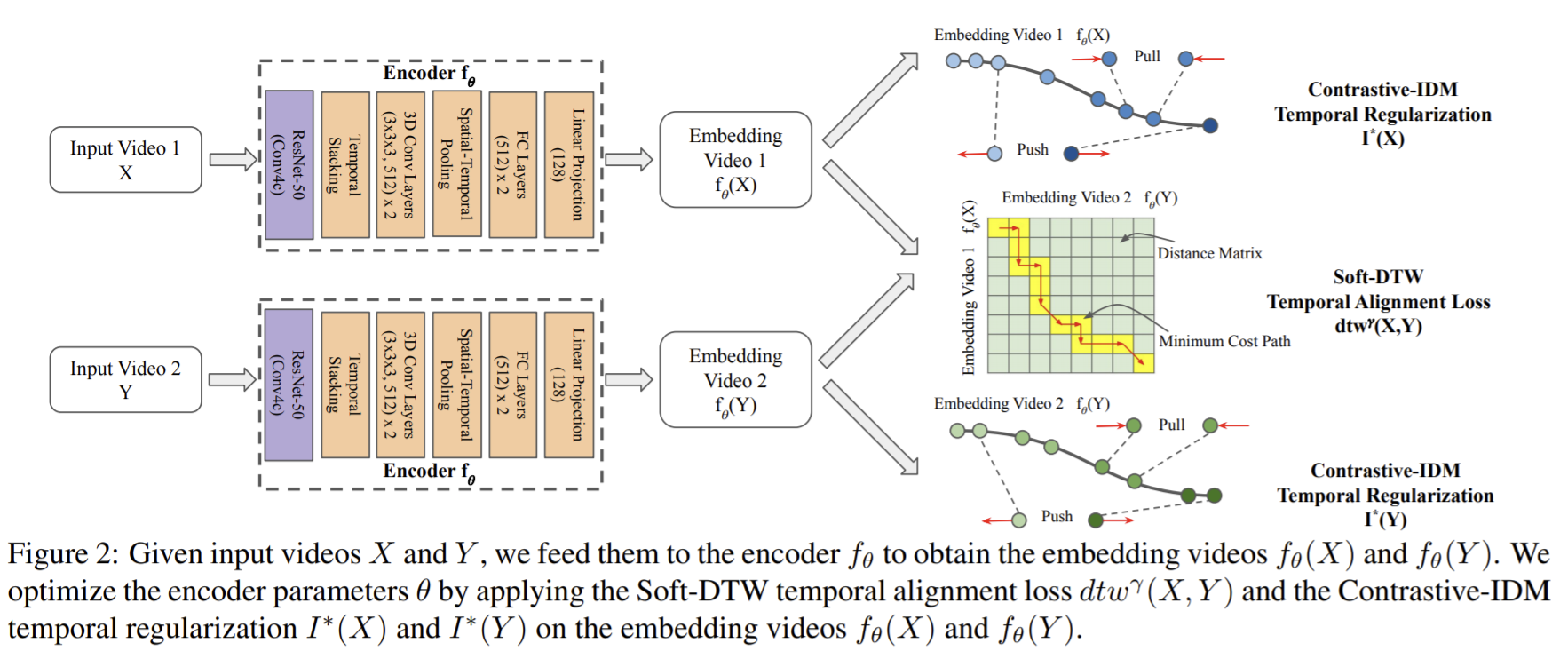

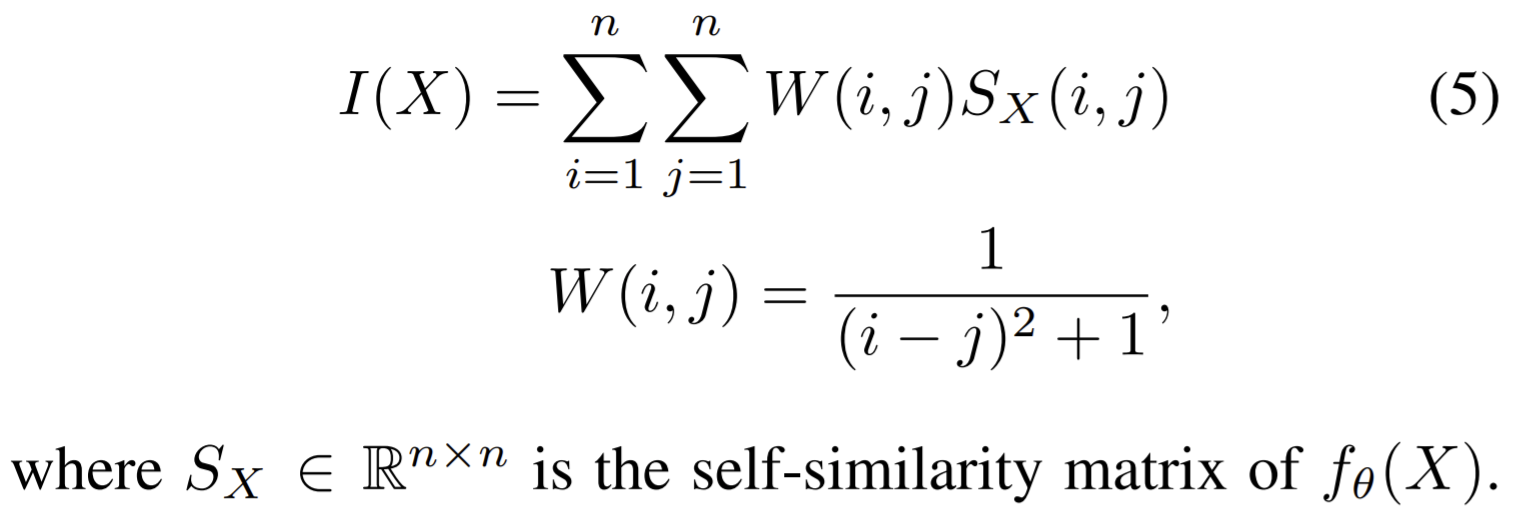

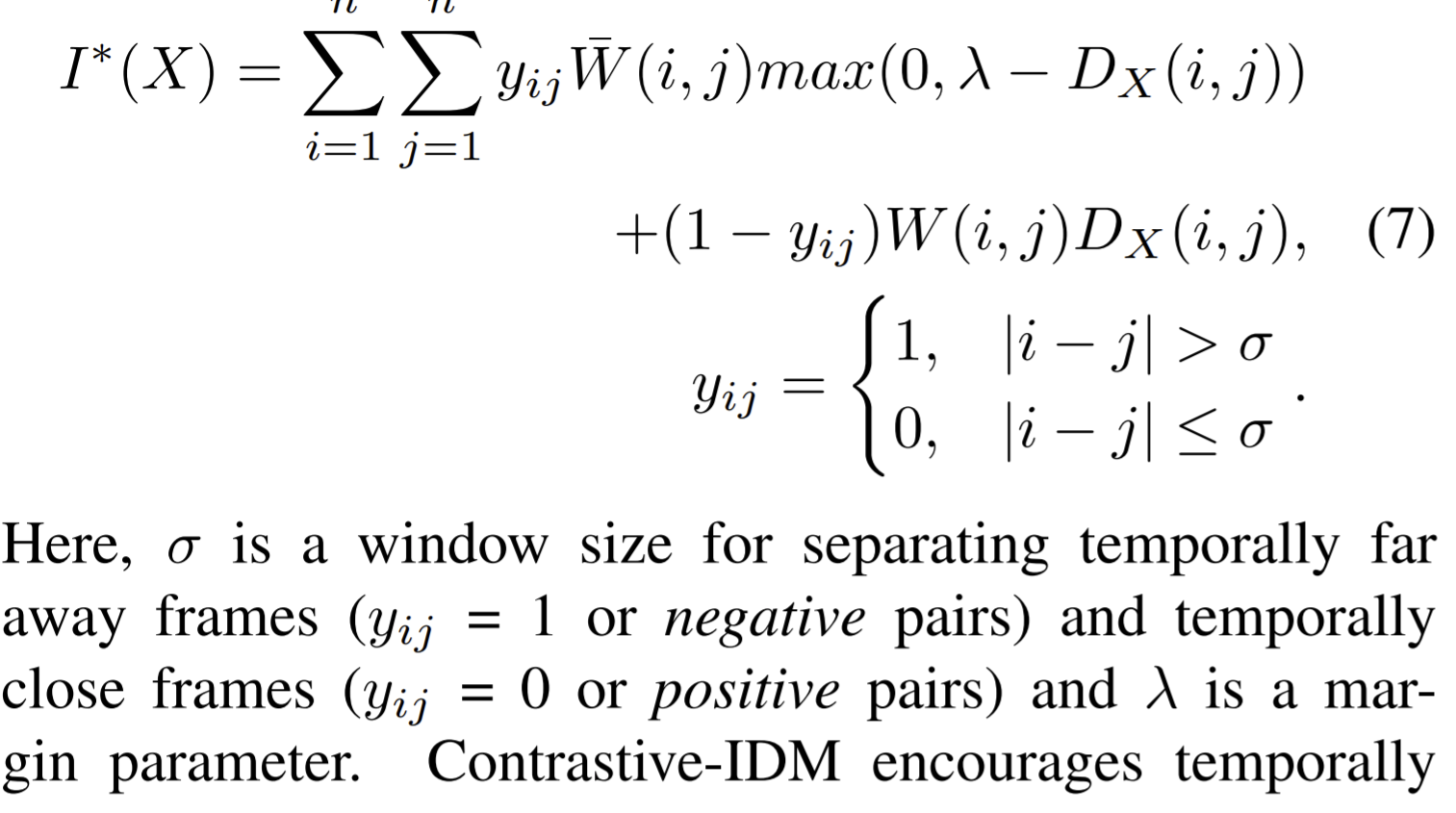

- Paper 25: Learning graph representation by aggregating subgraphs via mutual information maximization

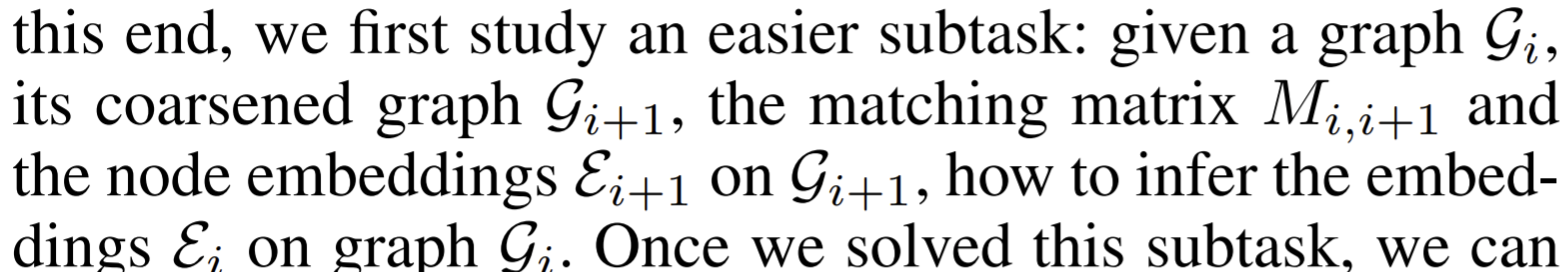

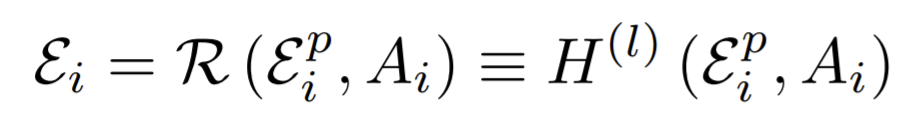

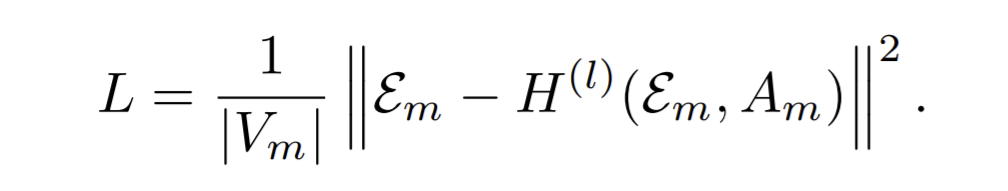

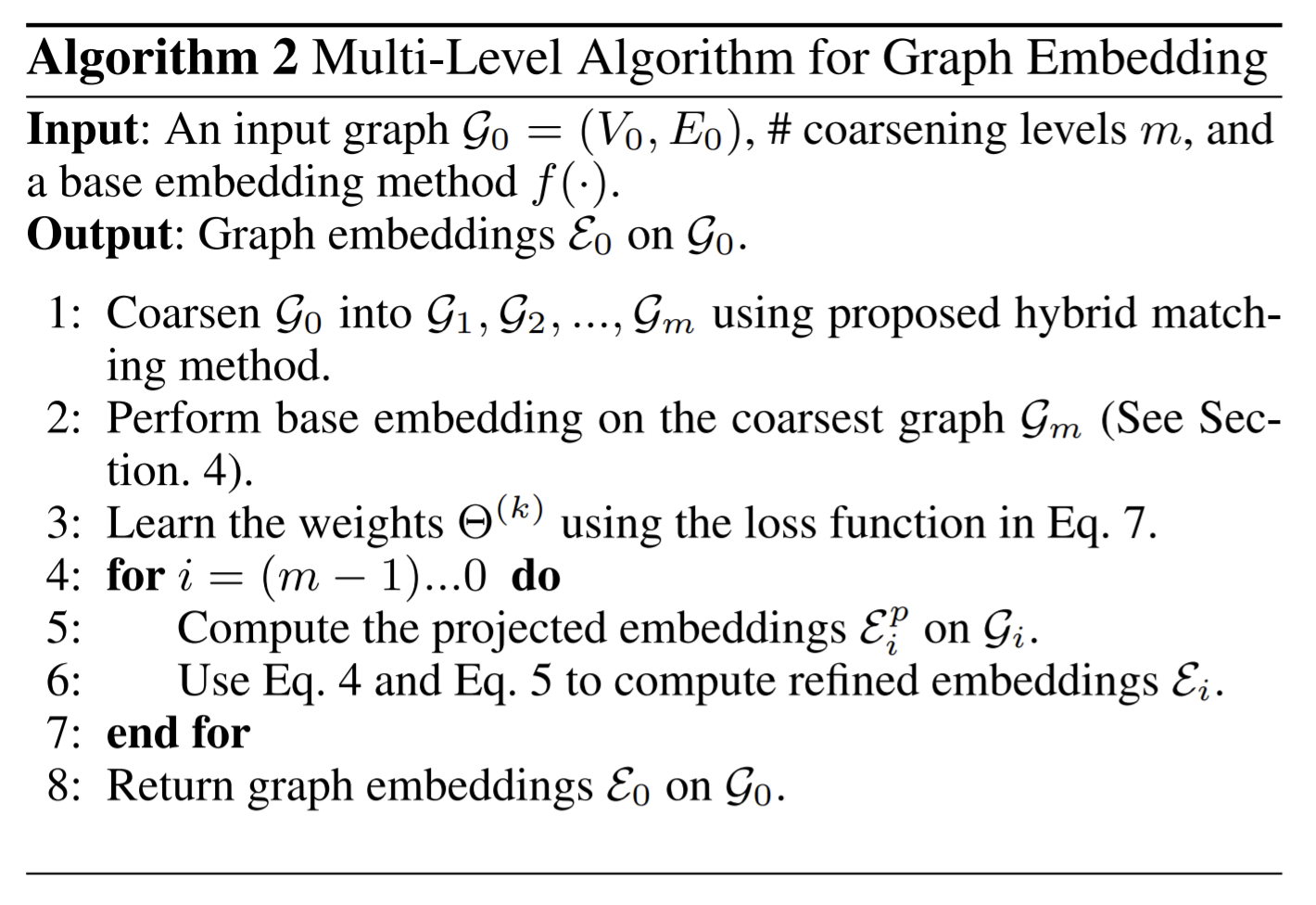

- Paper 26: Mile: A multi-level framework for scalable graph embedding

- Paper 27: Missing Data Estimation in Temporal Multilayer Position-aware Graph Neural Network (TMP-GNN)

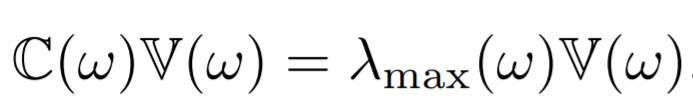

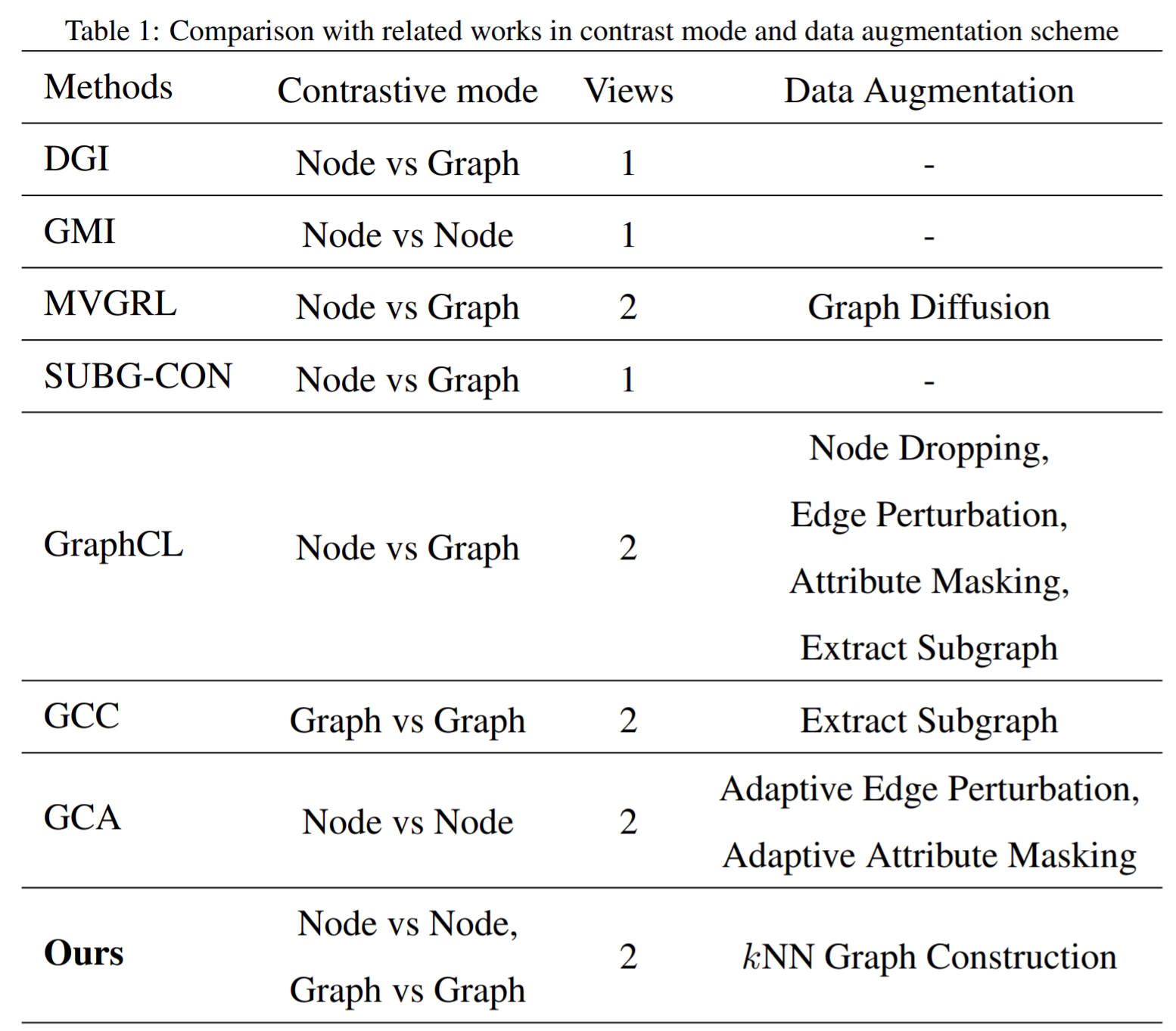

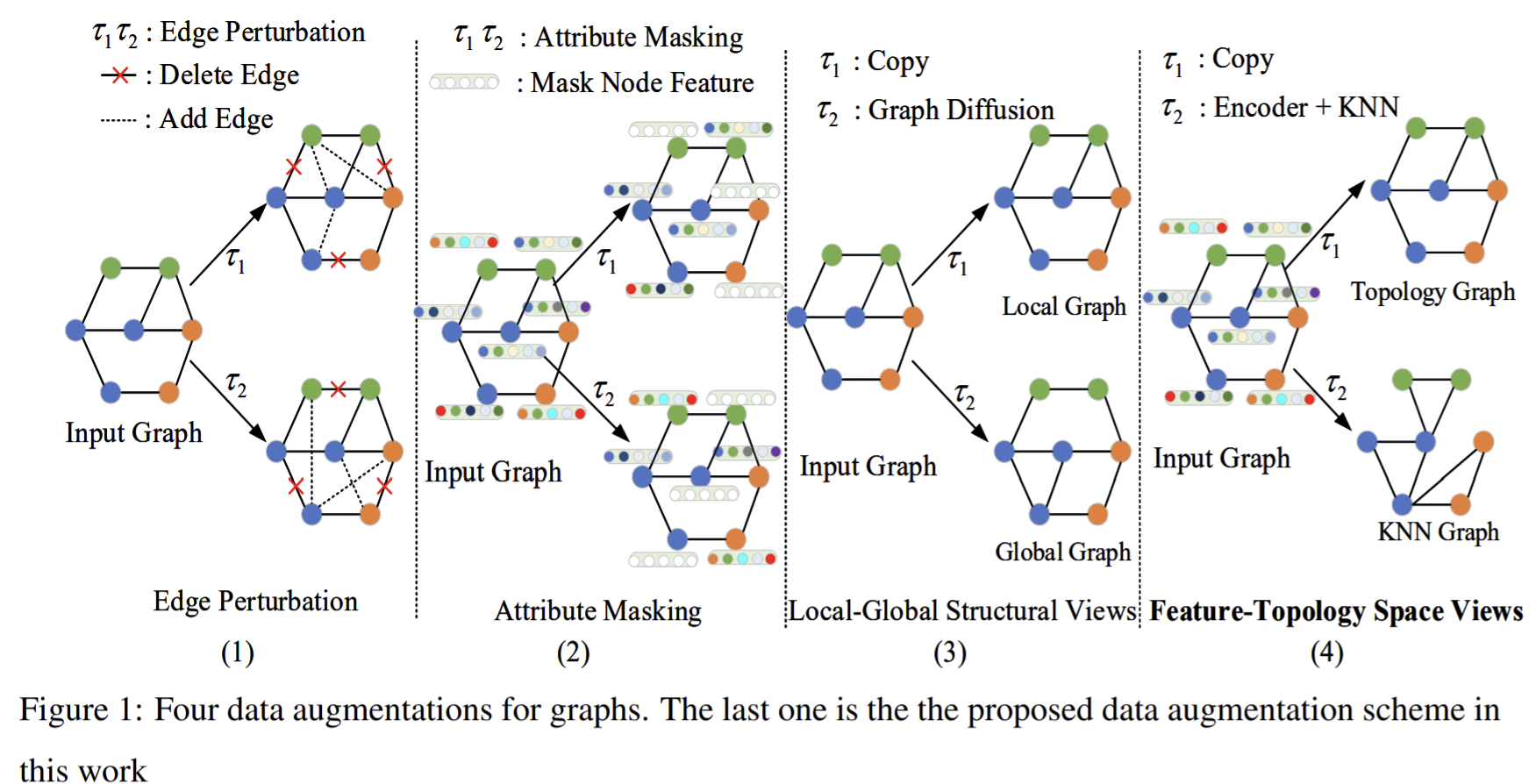

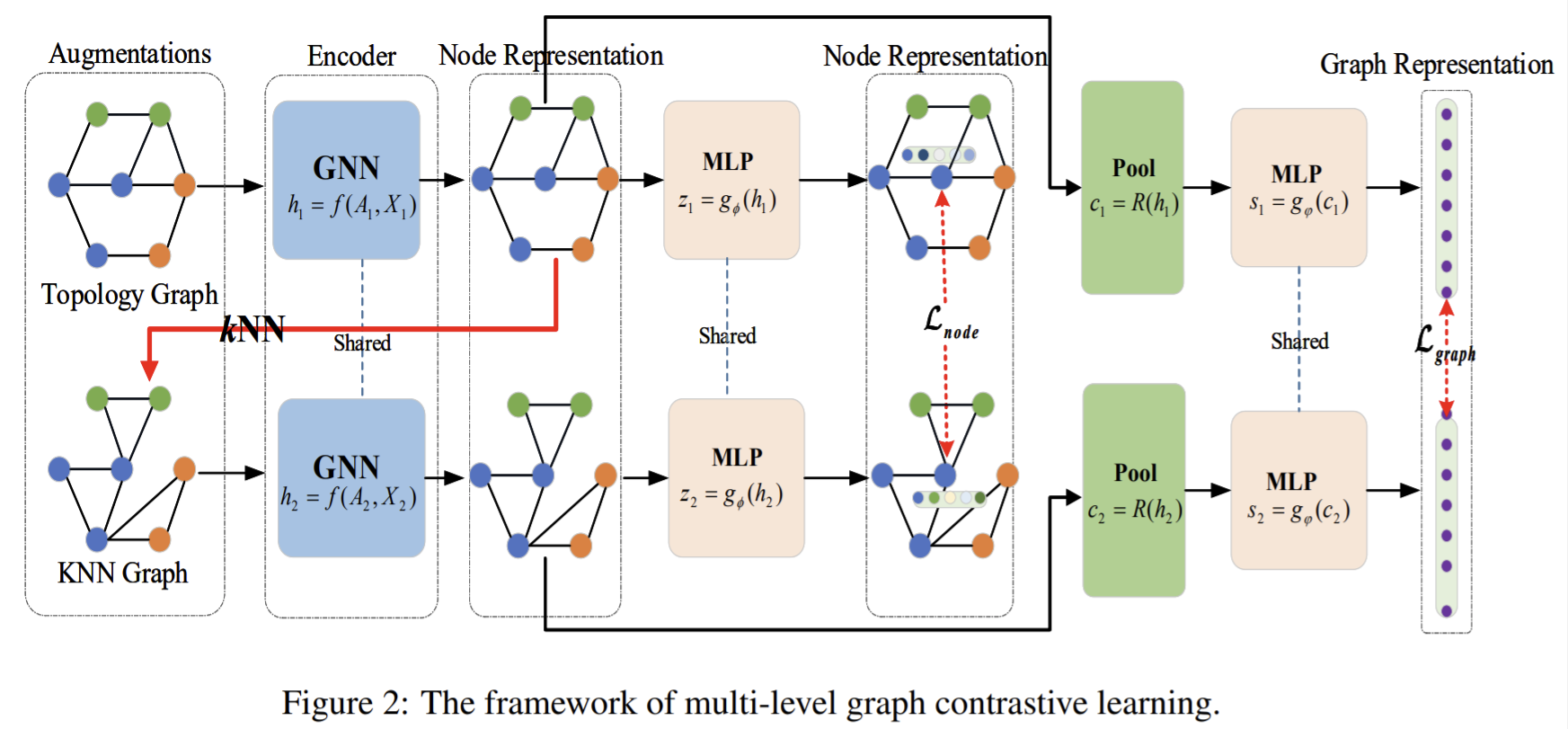

- Paper 28: Multi-Level Graph Contrastive Learning

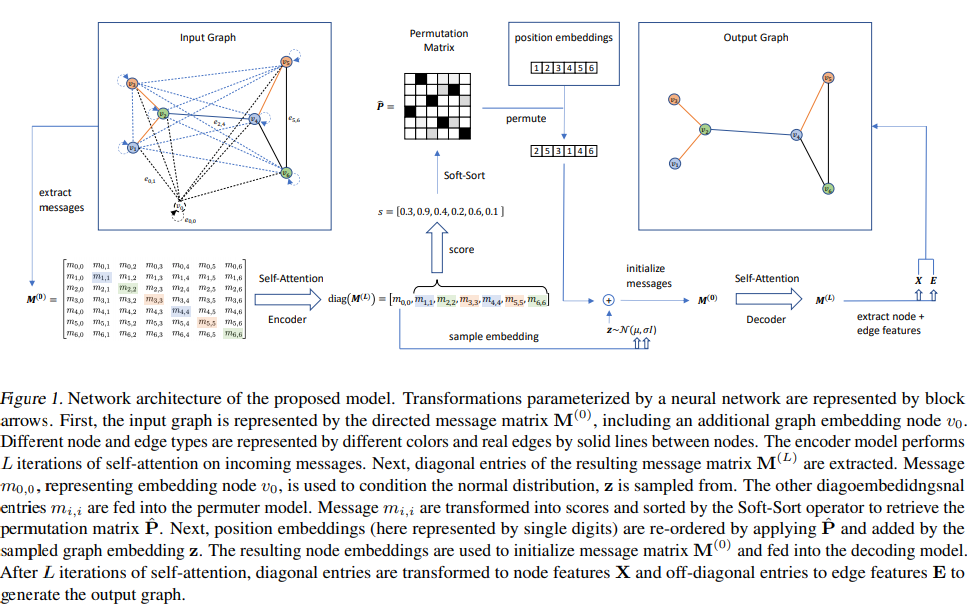

- Paper 29: Permutation-Invariant Variational Autoencoder for Graph-Level Representation Learning

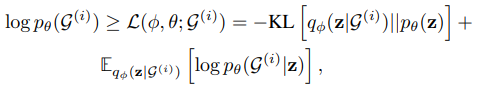

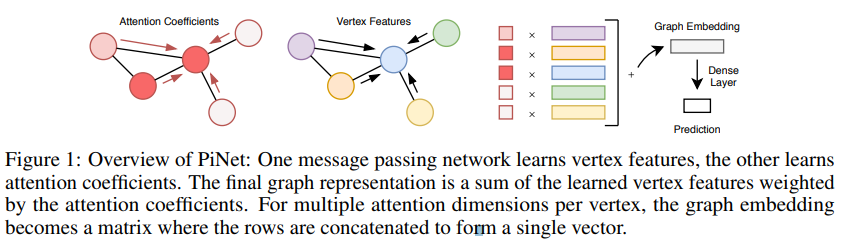

- Paper 30: PiNet: Attention Pooling for Graph Classification

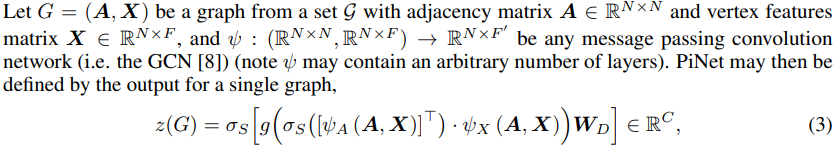

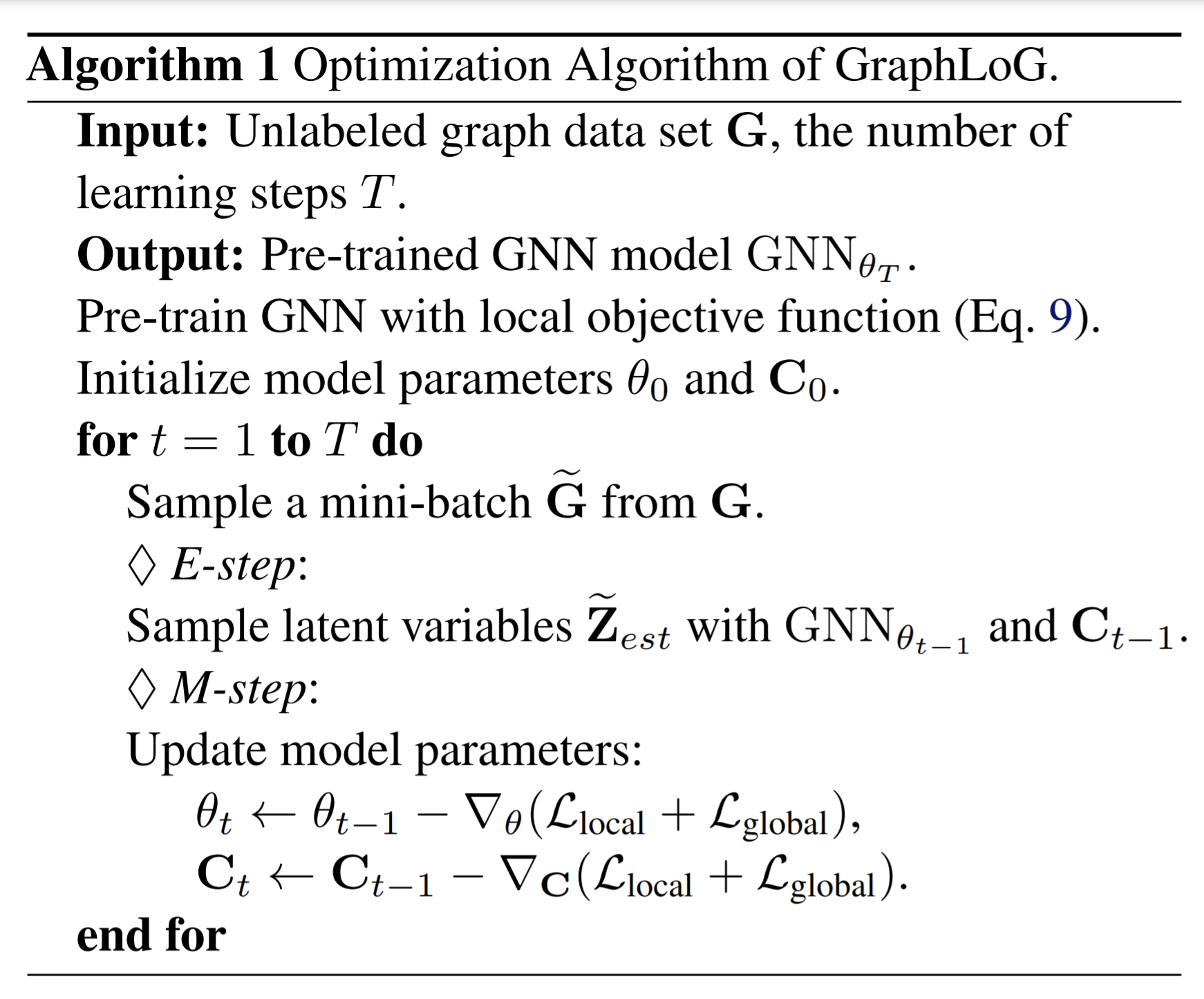

- Paper 31: Self-supervised Graph-level Representation Learning with Local and Global Structure

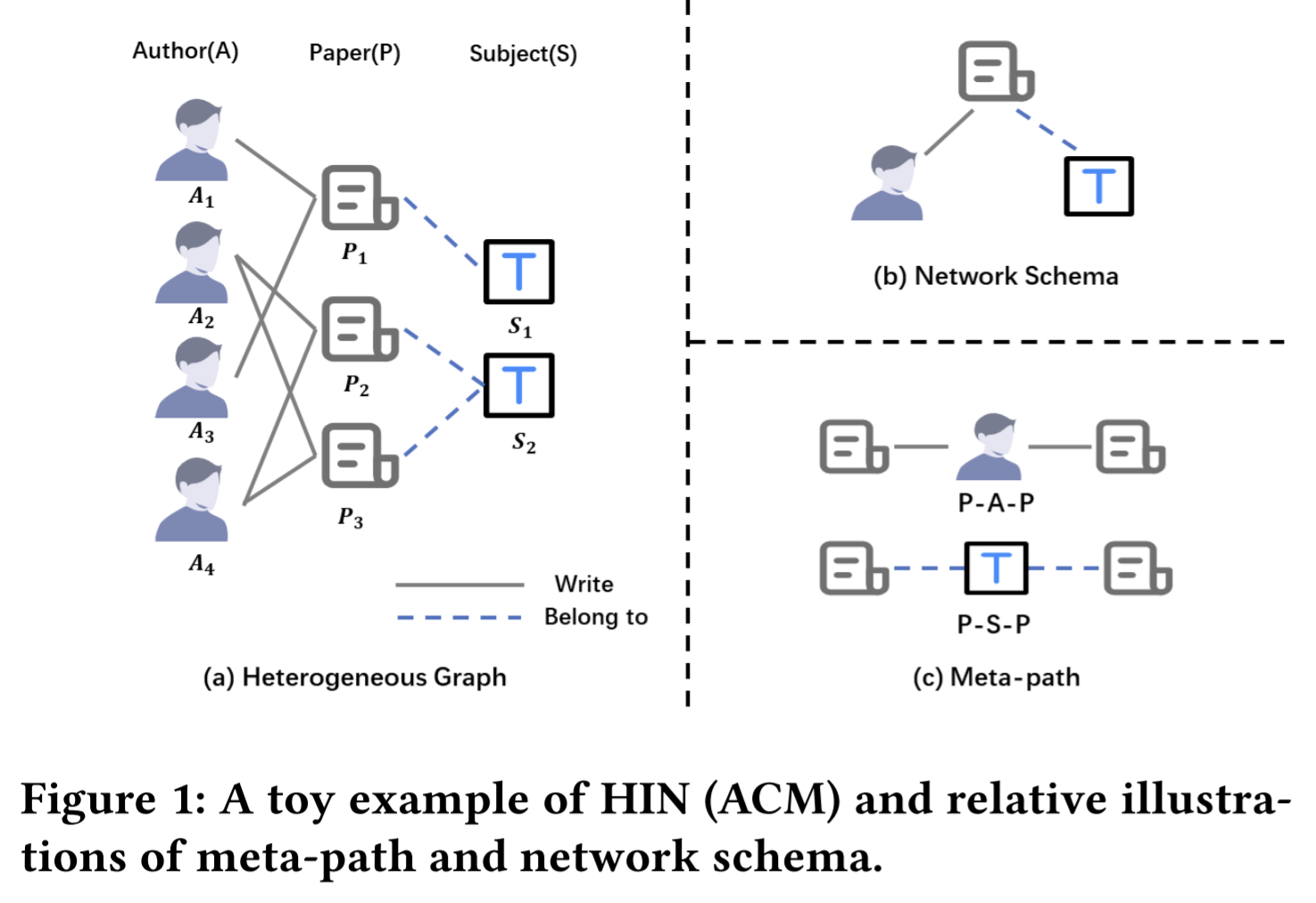

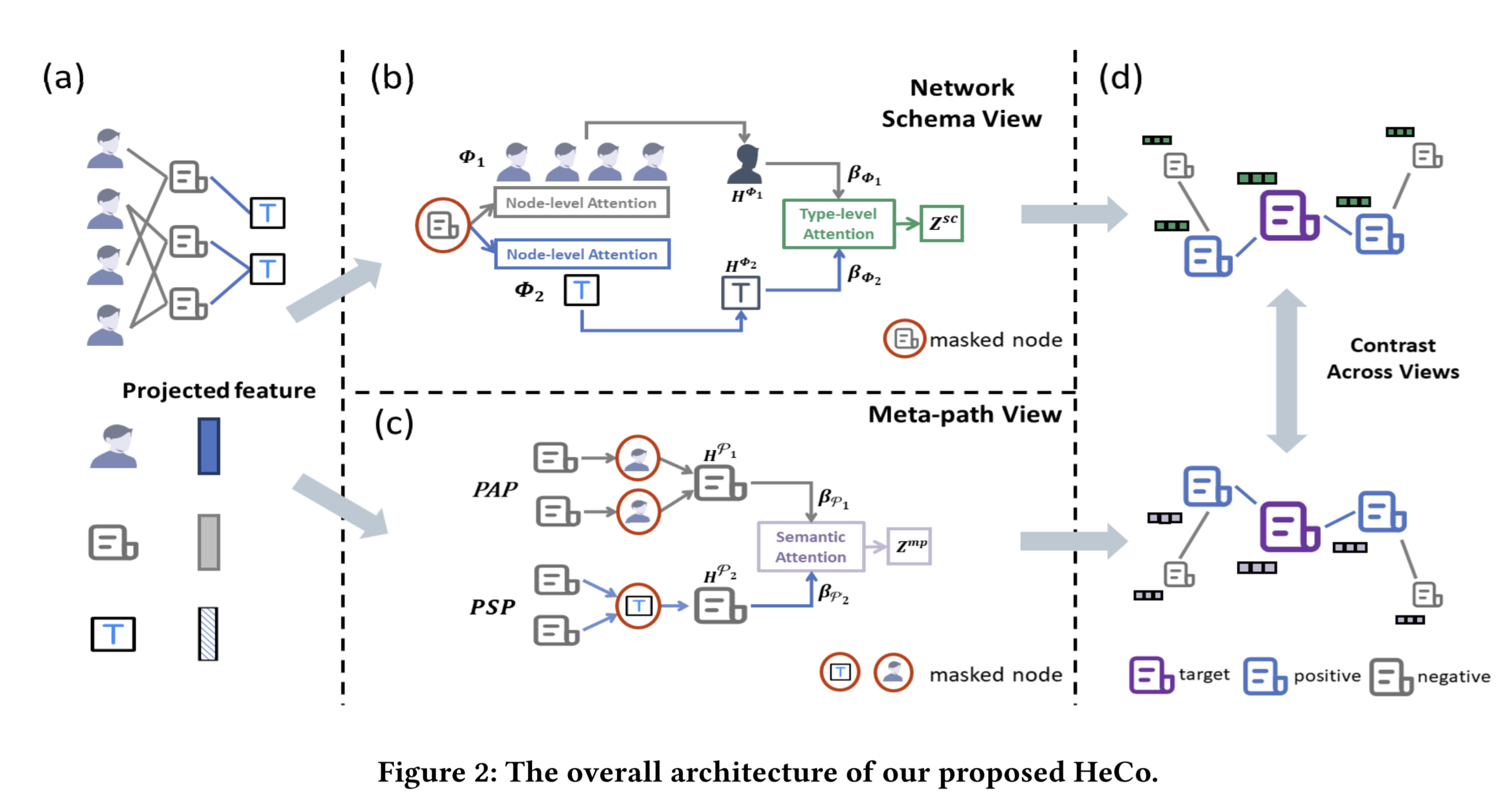

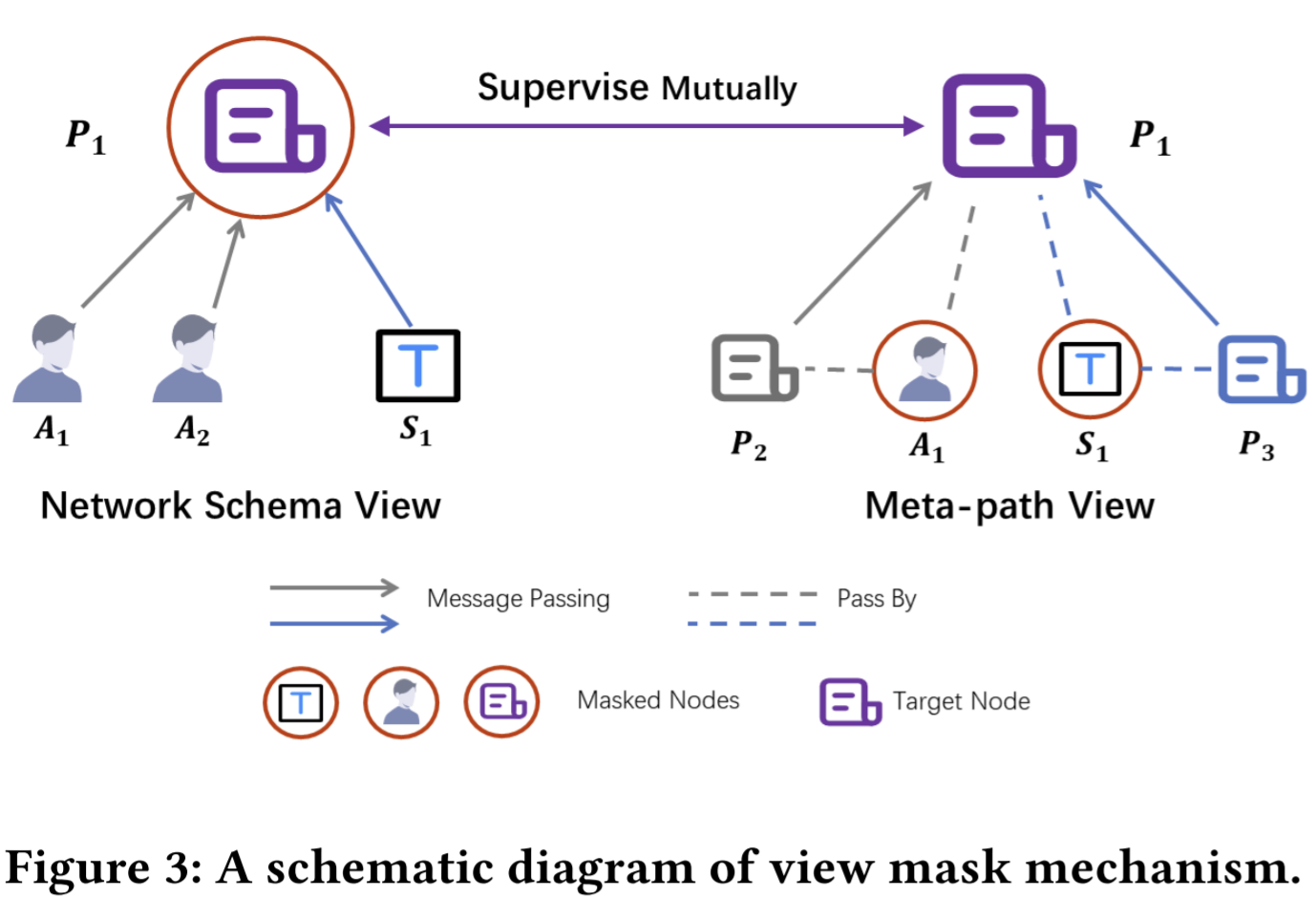

- Paper 32: Self-supervised Heterogeneous Graph Neural Network with Co-contrastive Learning

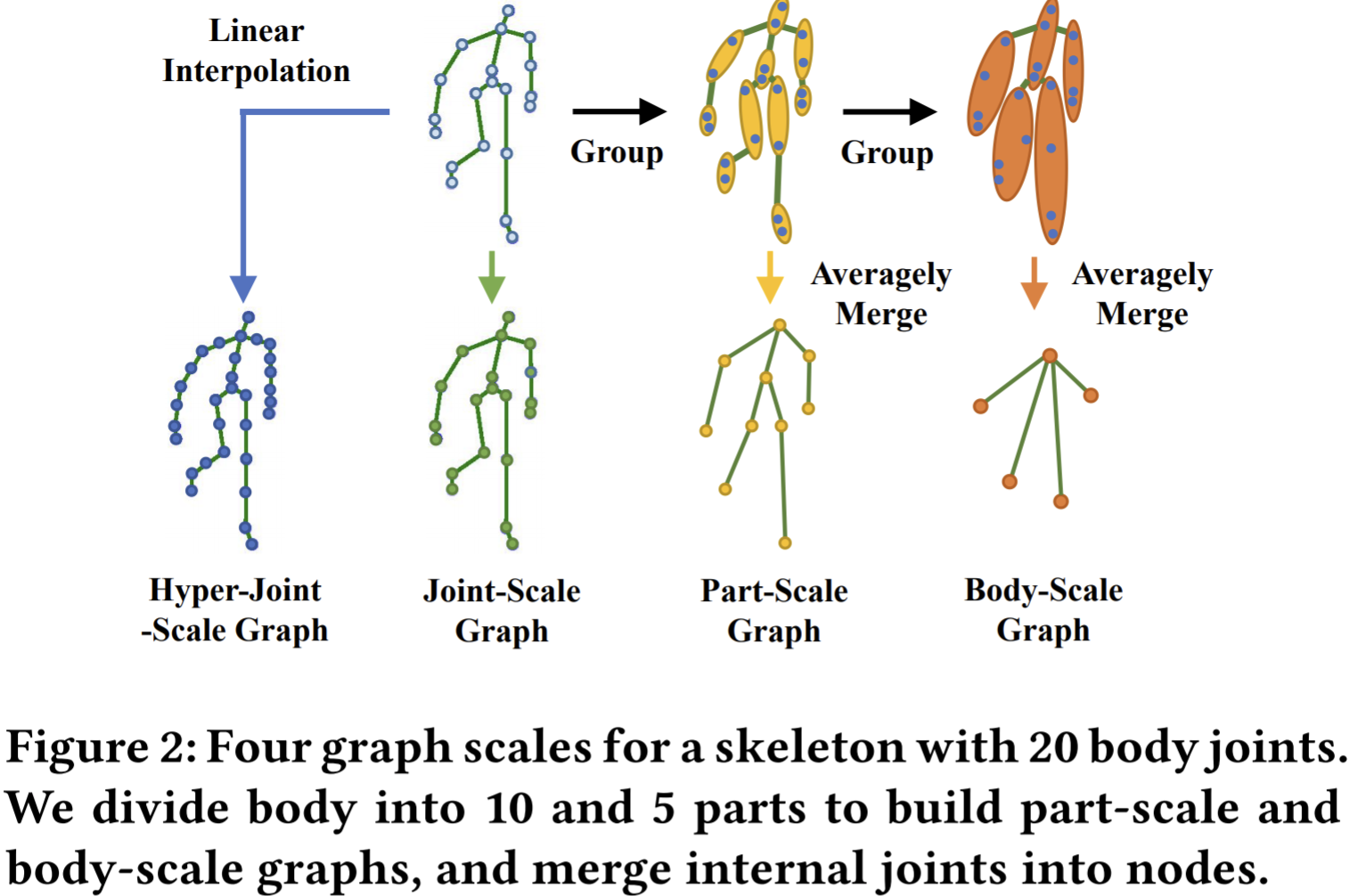

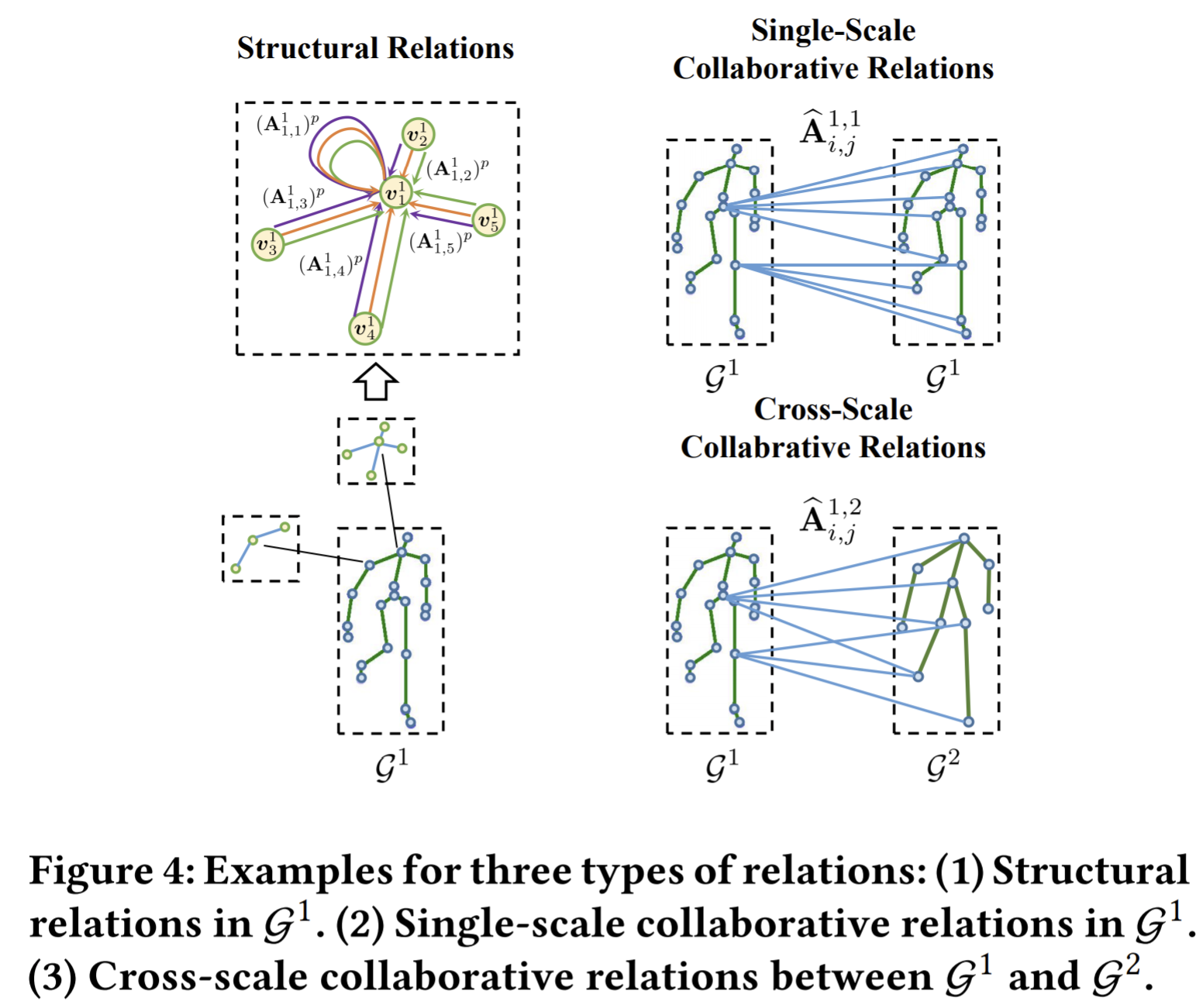

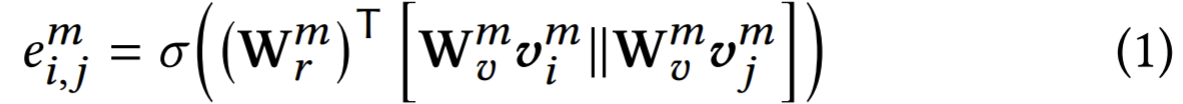

- Paper 33: SM-SGE: A Self-Supervised Multi-Scale Skeleton Graph Encoding Framework for Person Re-Identification

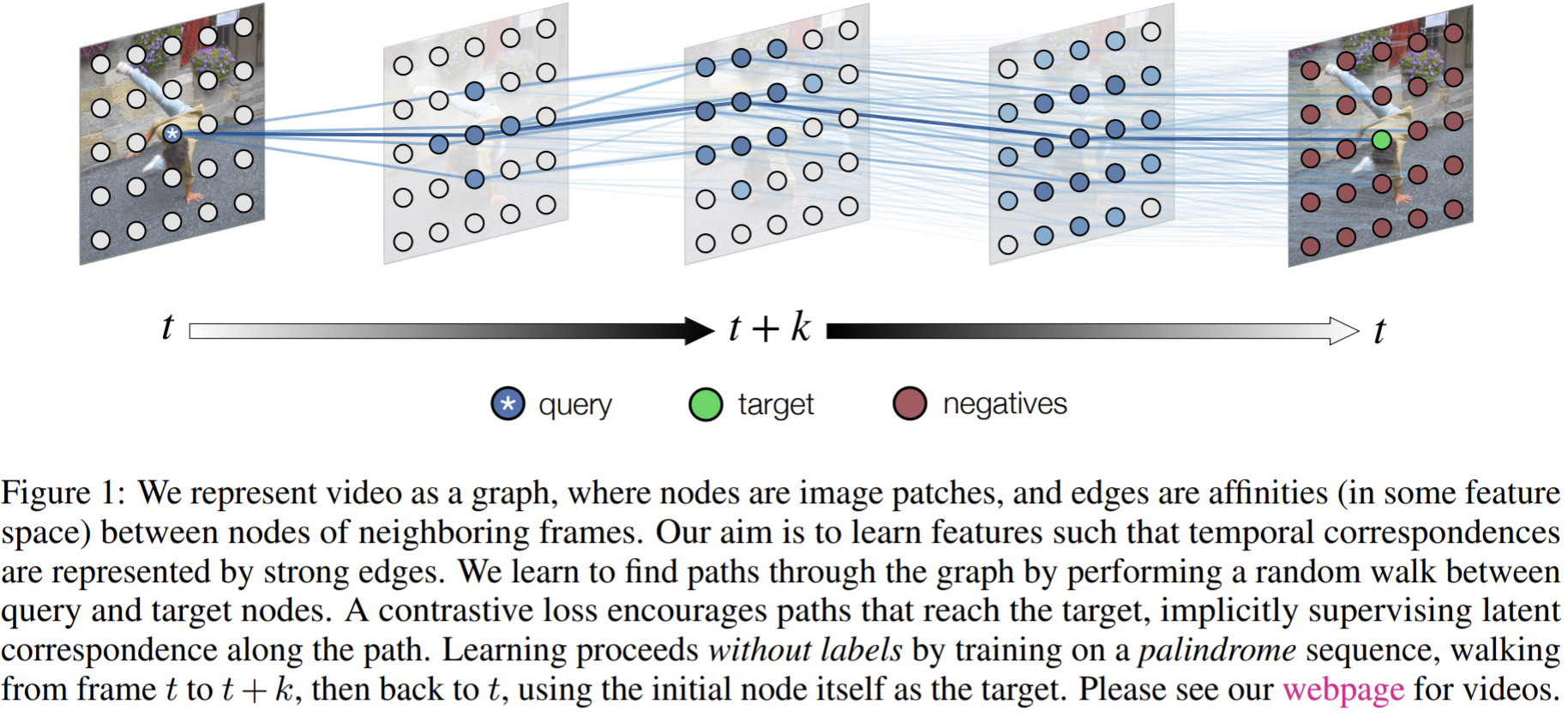

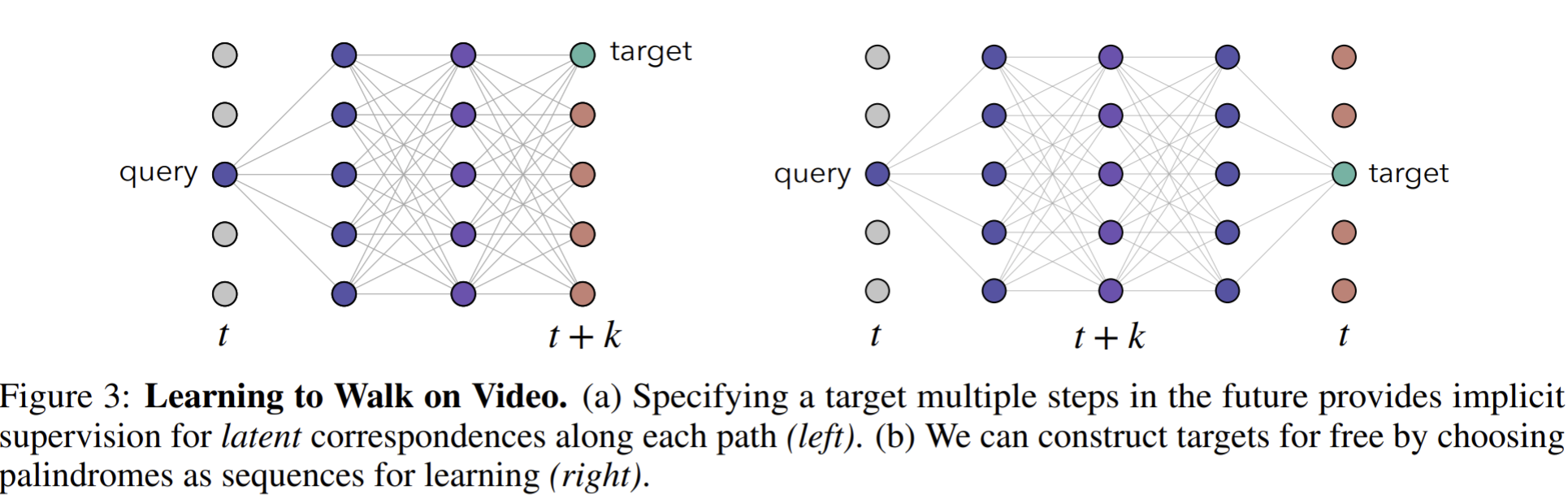

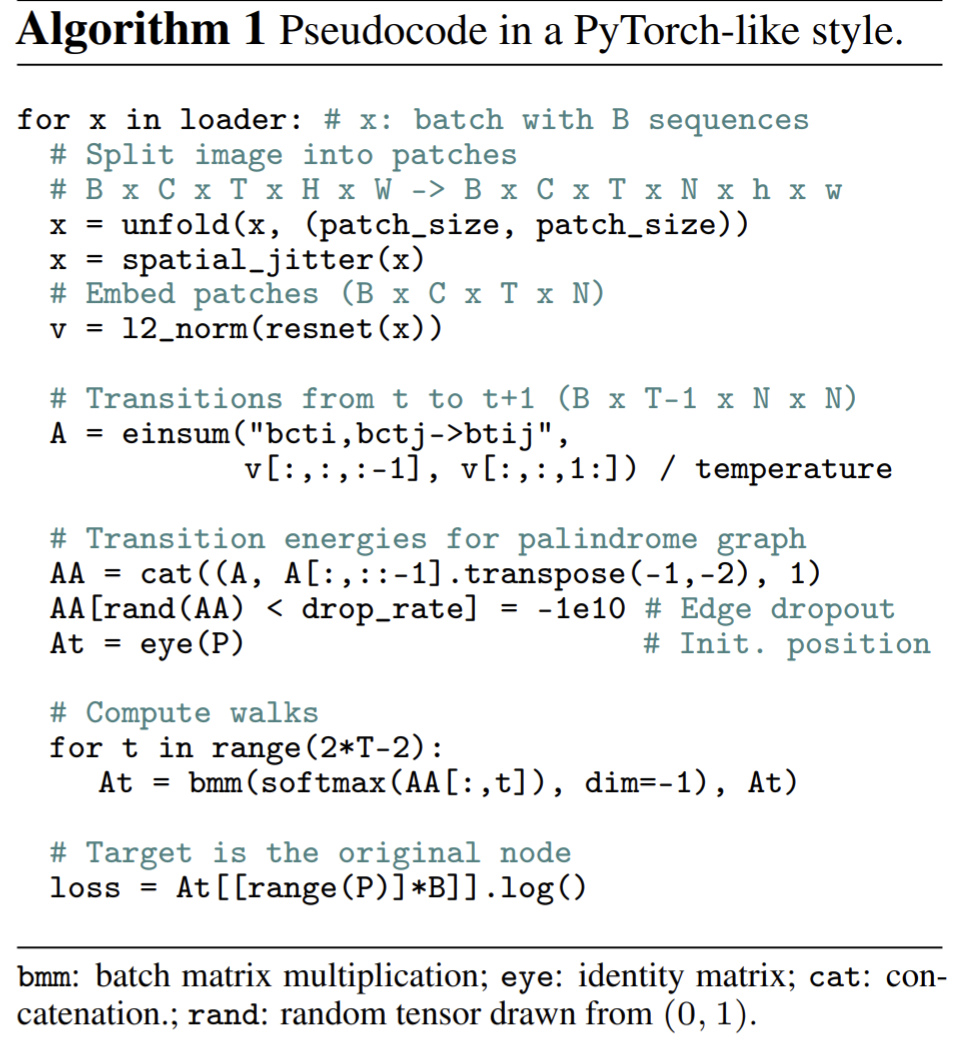

- Paper 34: Space-time correspondence as a contrastive random walk

- Paper 35: Spatially consistent representation learning

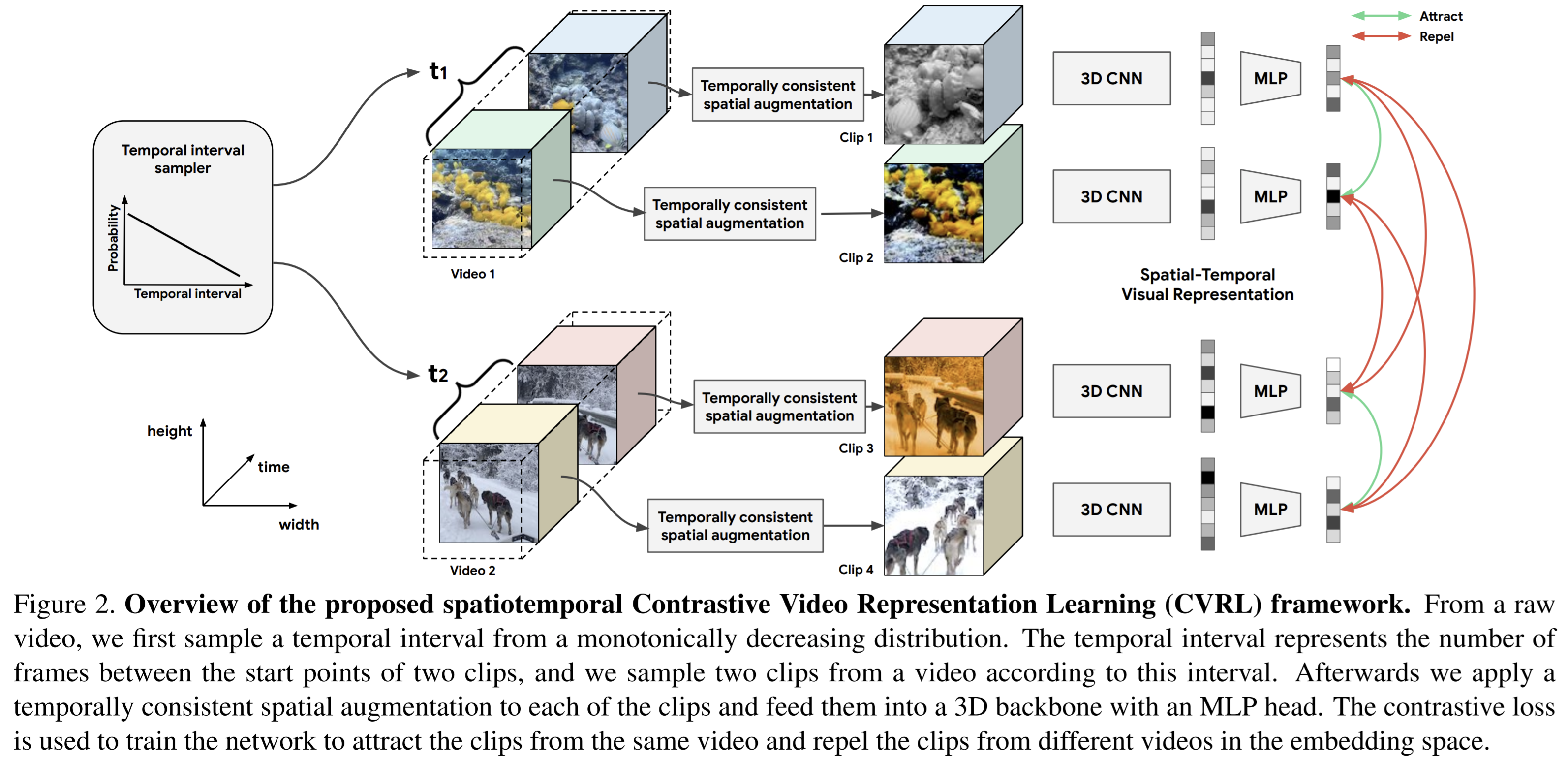

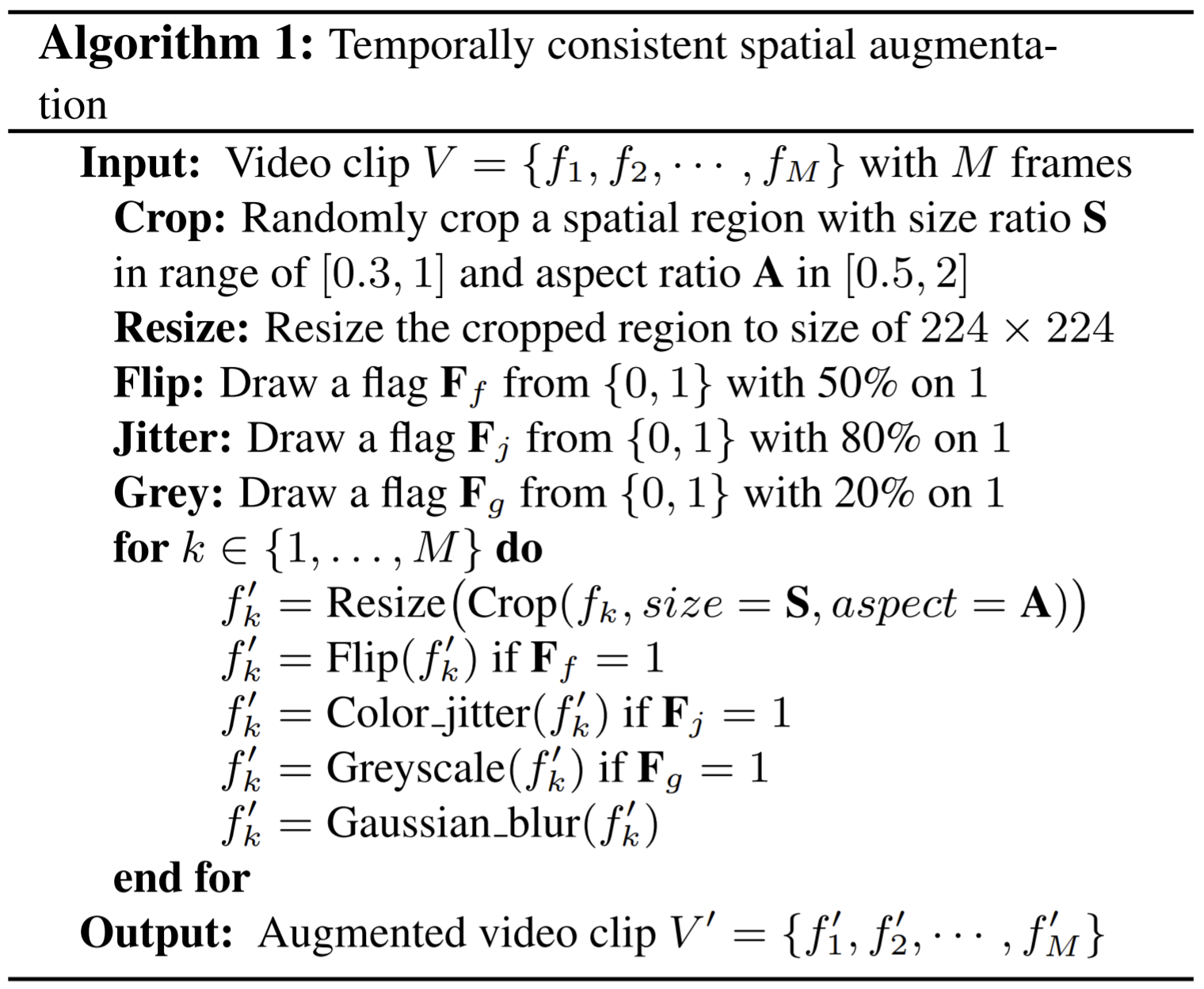

- Paper 36: Spatiotemporal contrastive video representation learning

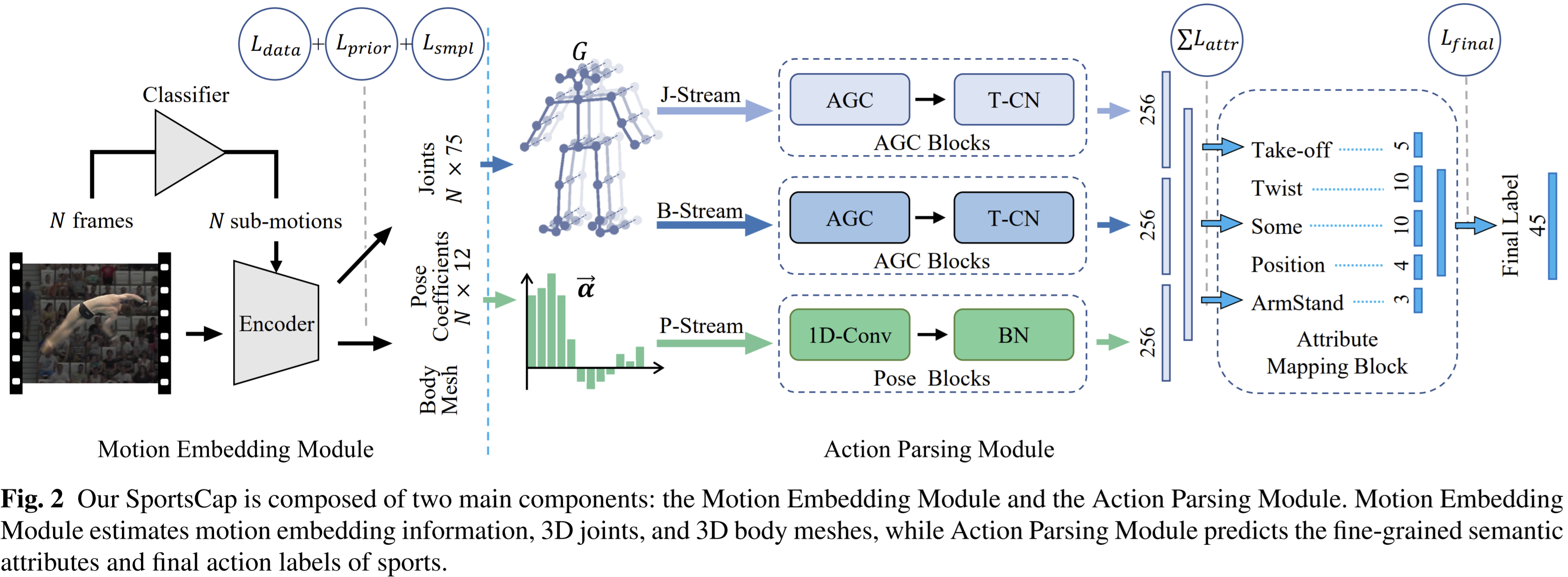

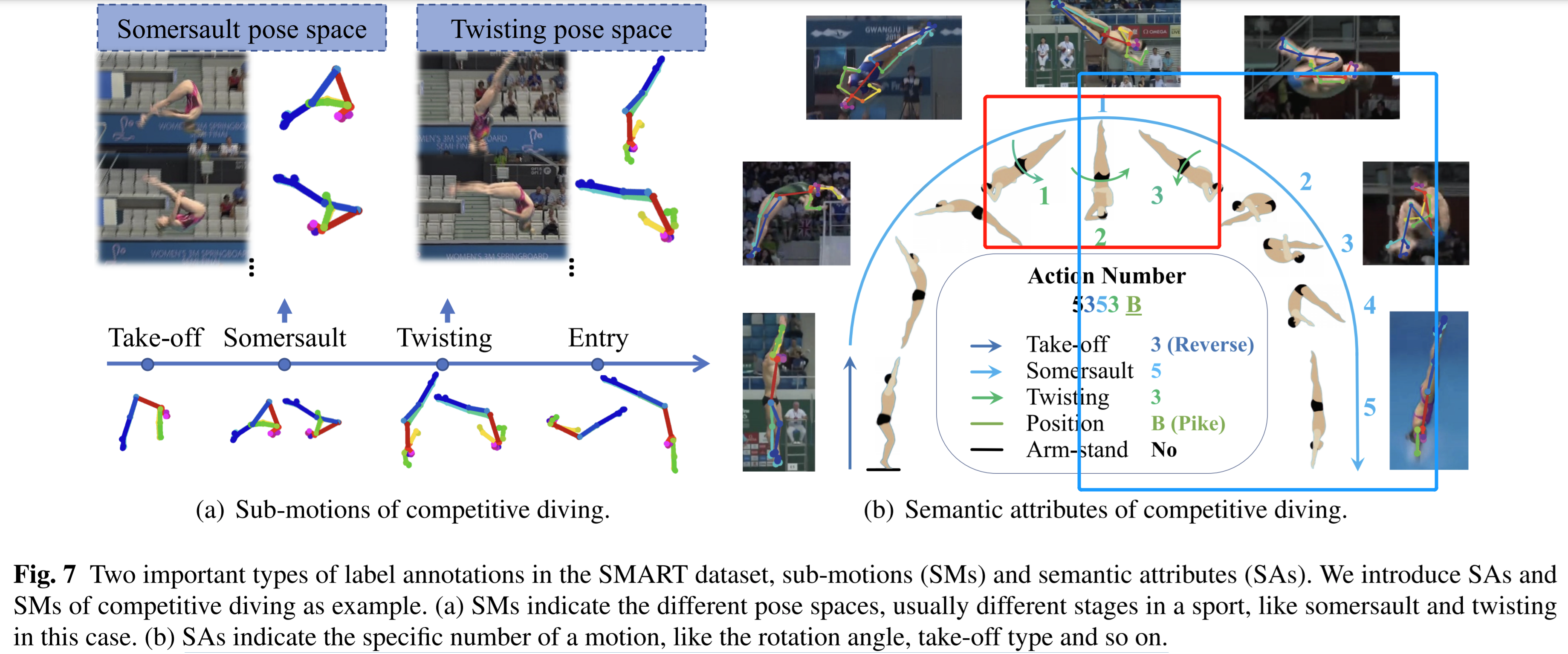

- Paper 37: SportsCap: Monocular 3D Human Motion Capture and Fine-grained Understanding in Challenging Sports Videos

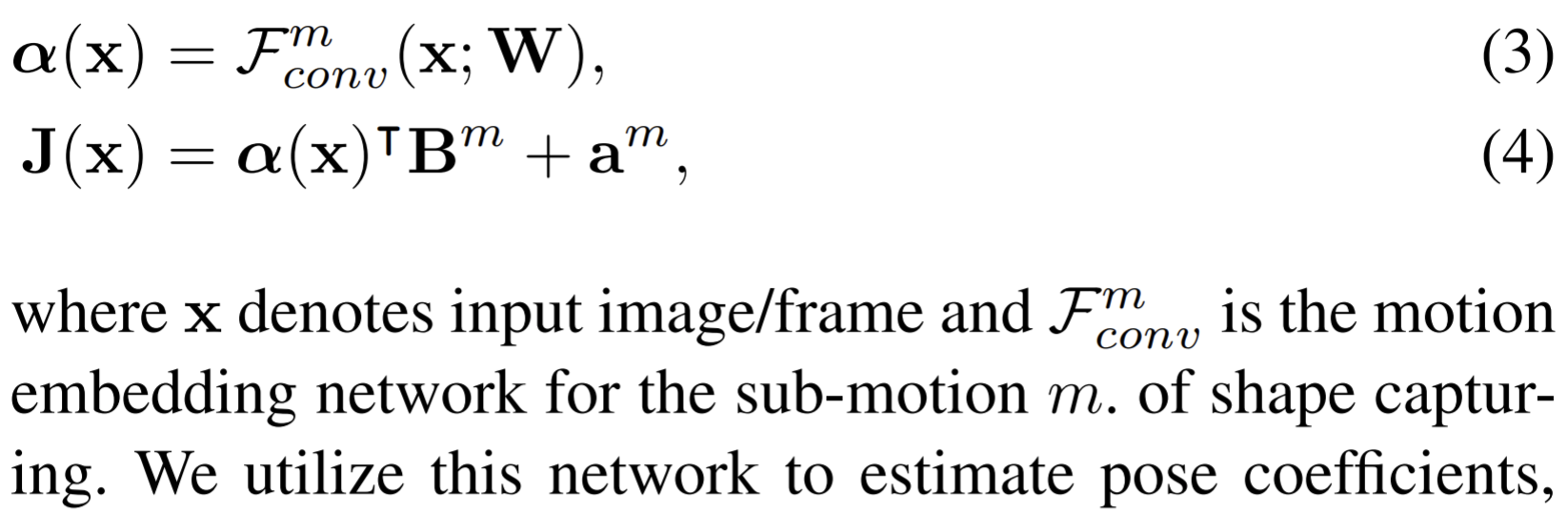

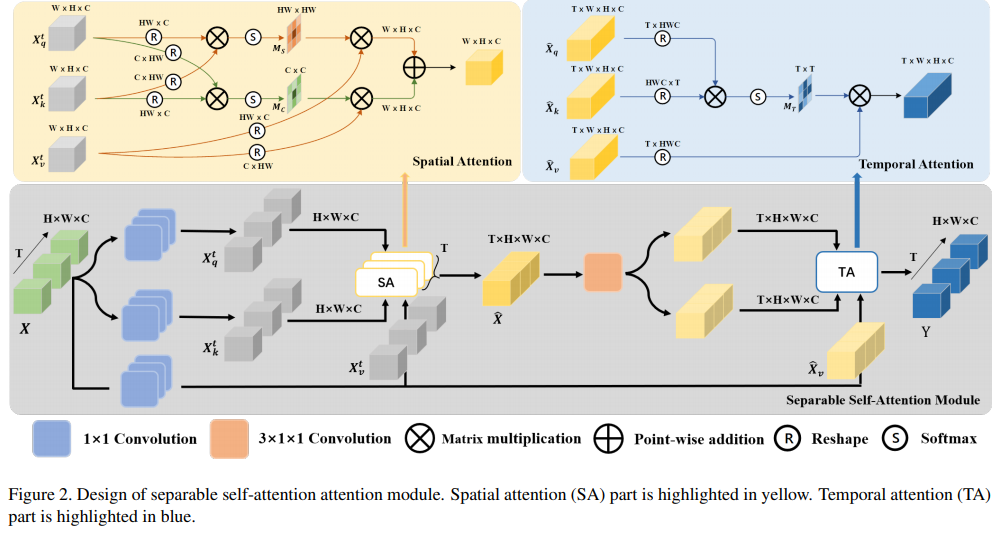

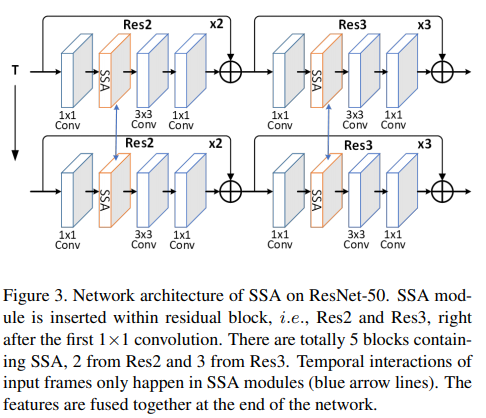

- Paper 38: SSAN: Separable Self-Attention Network for Video Representation Learning

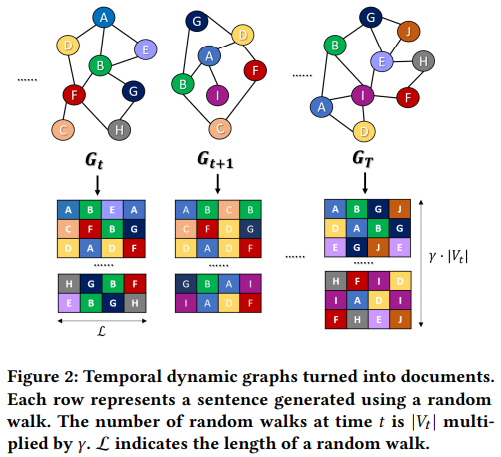

- Paper 39: tdgraphembed: Temporal dynamic graph-level embedding

- Paper 40: Videomoco: Contrastive video representation learning with temporally adversarial examples

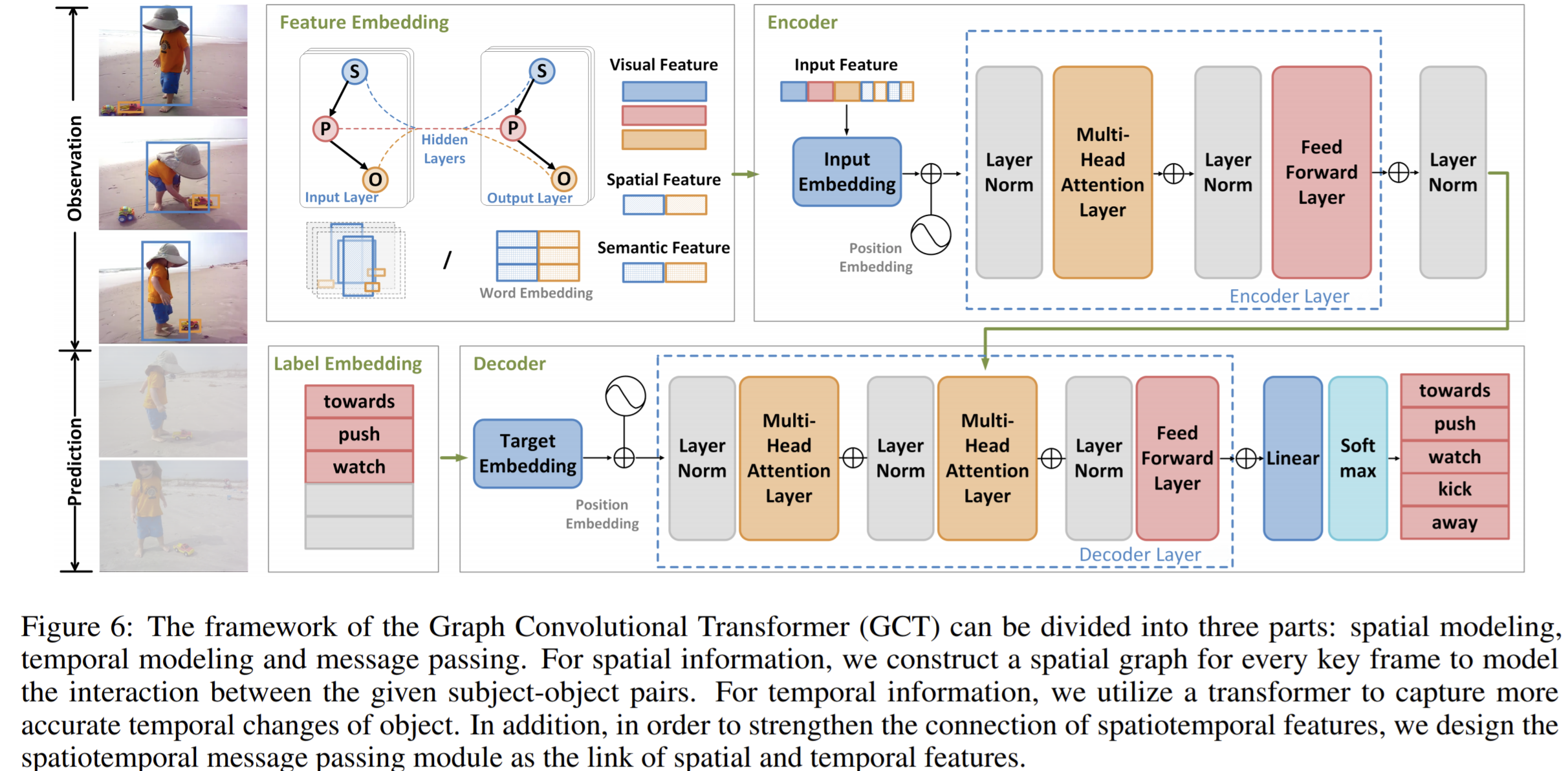

- Paper 41: Visual Relationship Forecasting in Videos

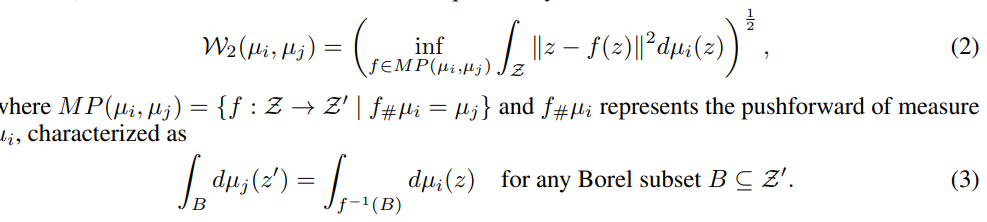

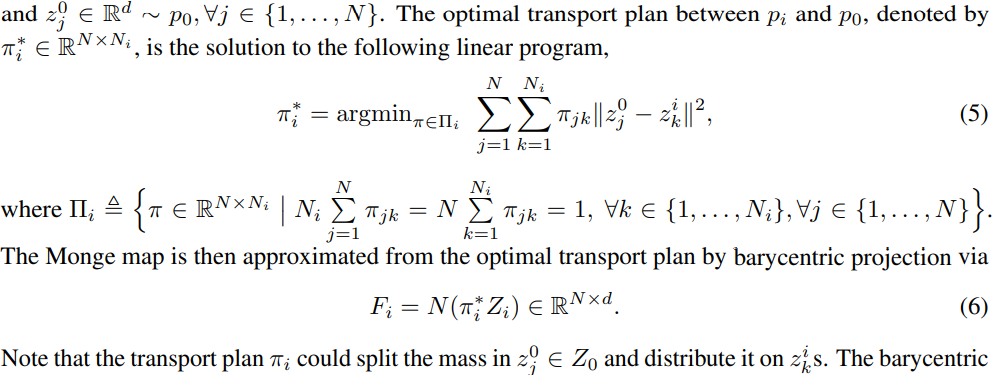

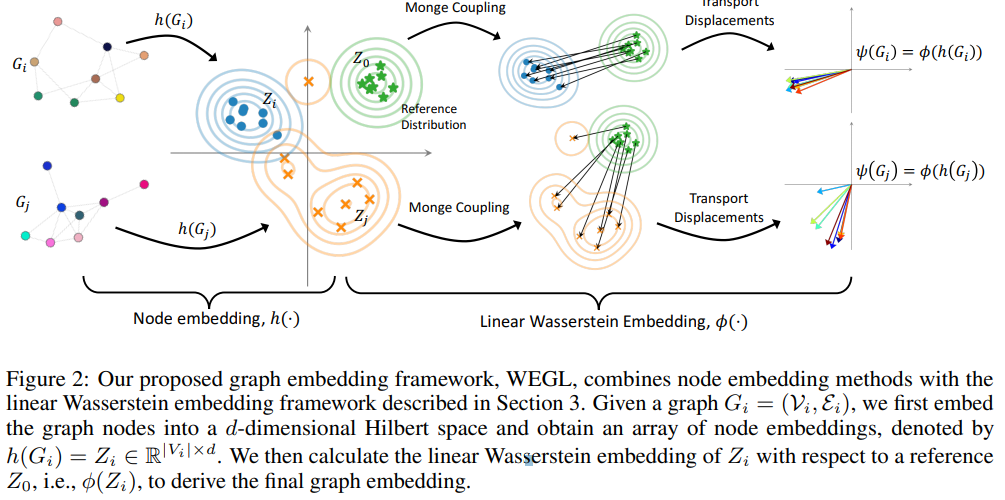

- Paper 42: Wasserstein embedding for graph learning

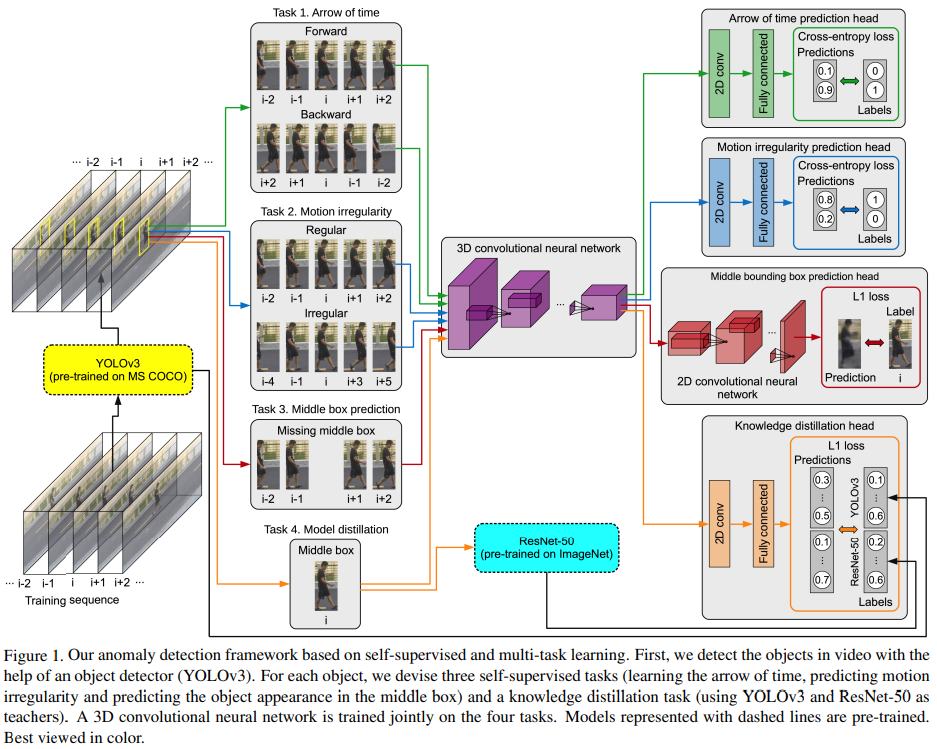

- Paper 43: Anomaly Detection in Video via Self-Supervised and Multi-Task Learning

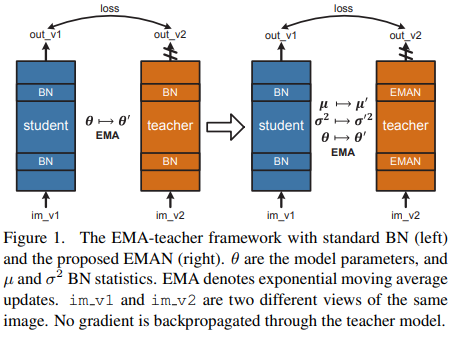

- Paper 44: Exponential Moving Average Normalization for Self-supervised and Semi-supervised Learning

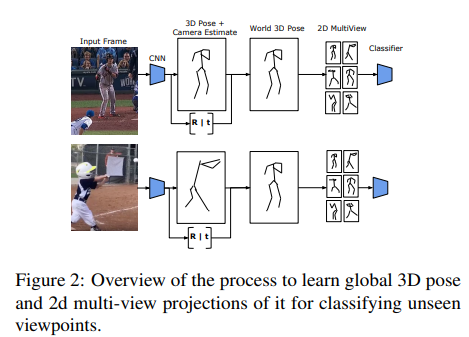

- Paper 45: Recognizing Actions in Videos from Unseen Viewpoints

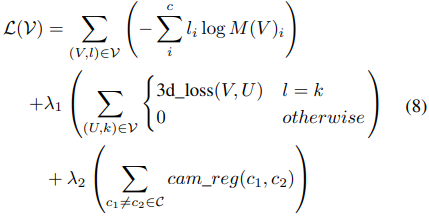

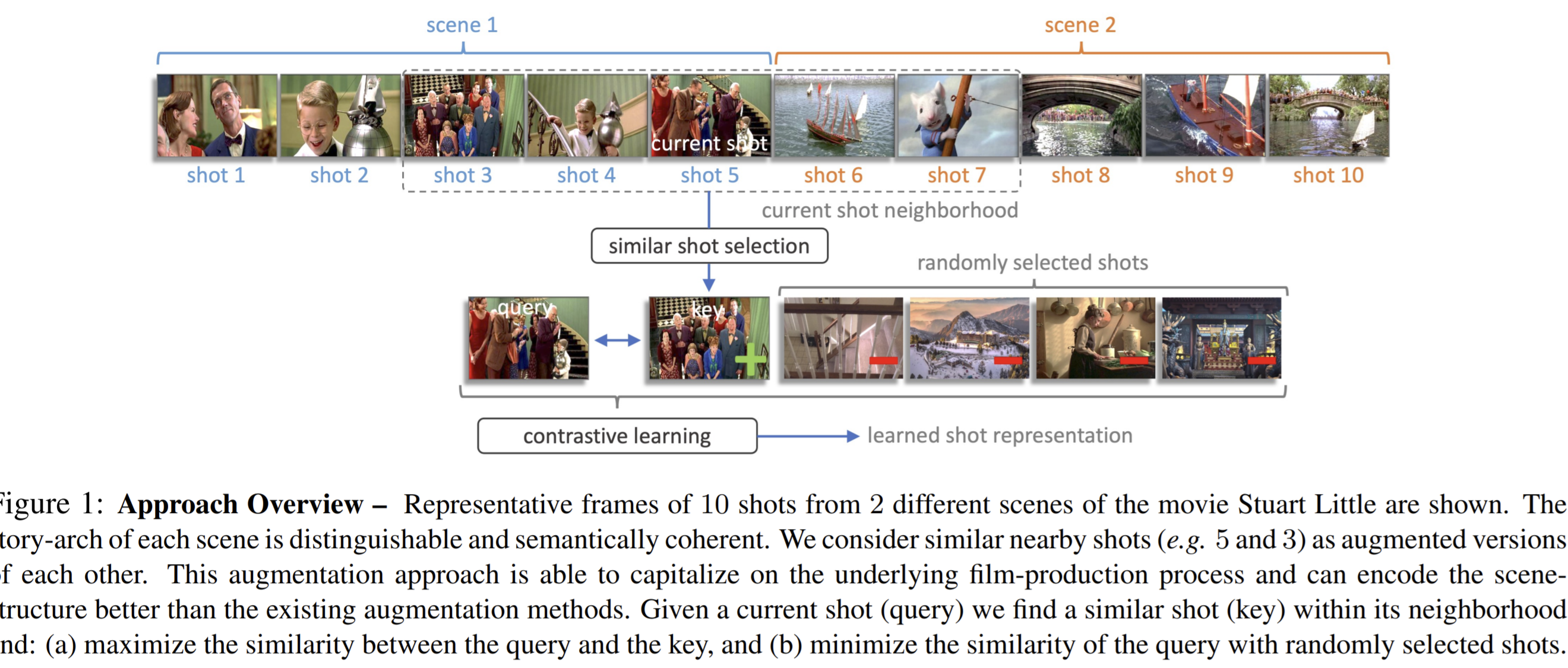

- Paper 46: Shot Contrastive Self-Supervised Learning for Scene Boundary Detection

Paper 1: Jigsaw Clustering for Unsupervised Visual Representation Learning

Codes: https://github.com/dvlab-research/JigsawClustering

Previous pretext task

- Intra-image tasks: including colorization and jigsaw puzzle, design a transform of one image and train the network to learn the transform.

- Since only the training batch itself is forwarded each time, they name these methods as single -batch methods.

- Can be achieved using only one image's information, limiting the learning ability of feature extractors.

- Inter-image tasks

- require the network to discriminate among different images.

- Try to reduce the distance between representations of positive pairs and enlarge the distance between representations of negative samples.

- since each training batch and its augmented version are forwarded simultaneously, methods are named as dual-batches methods.

- The way to design an efficient single-batch based method with similar performance to dual-batches methods is still an open problem.

Ideas

They propose a framework for efficient training of unsupervised models using Jigsaw clustering, which combines advantages of solving jigsaw puzzles and contrastive learning, and makes use of both intra- and inter-image information to guide feature extractor.

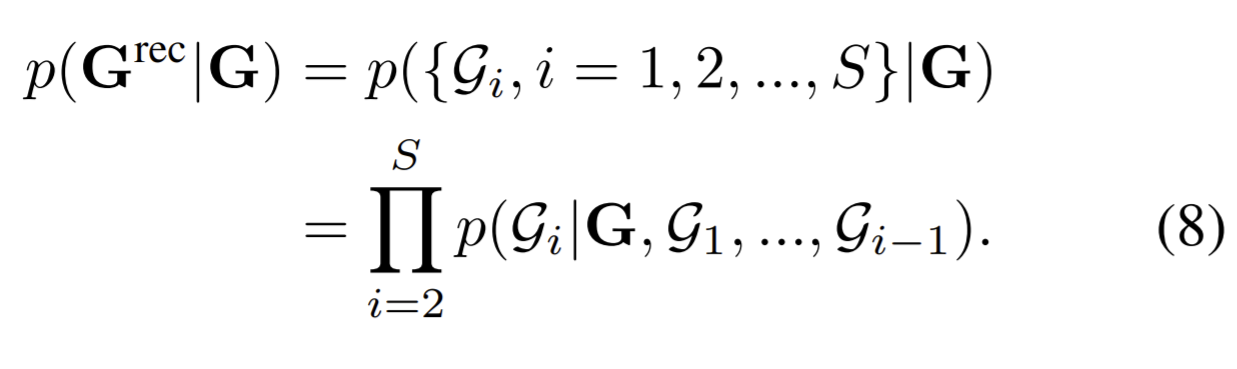

Jigsaw Clustering task

- Every image in a batch is split into different patches. They are randomly permuted and stitched to form a new batch for training.

- Goal : recover the disrupted parts back to the original images.

- The patches are permuted in a batch

- The network has to distinguish between different parts of one image and identifies their original positions to recover the original image from multiple montage input images.

- Why works?

- Discriminating among different patches in one stitched image forces the model to capture instance-level information inside an image. This level of feature is missing in general in other contrastive learning methods.

- Clustering different patches from multiple input images helps the model learn image-level features across images.

- arranging every patch to the correct location requires detailed location information, which was considered in single-batch methods.

How?

Batches

Each batch will have \(n\) images, and after splitting (patches split in images have a level of overlap), there will be \(n\times m\times m\) patches, the patches are latterly stitched into \(n\) images. The cluster branch will cluster the \(n\times m\times m\) patches into \(n\) classes so as to define which original image that one patch comes from.

- Using montage images as input instead of every single patch is noteworthy, since directly using small patches as input leads to the solution with only global information.

- the input images form only one batch with the same size as the original batch, which costs half of resource during training compared with recent methods.

- The choice of \(m\) affects the difficulty of the task. They show that \(m=2\) is good.

Network

- The logits is in size \(n\times m \times m\). (in location branch)

Loss function

The target of clustering is pulling together objects from the same class and pushing away patches from different classes.

The loss function of location branch is simply cross-entropy loss.

The final objective is the weighted summation of the two losses mentioned above. But in their experiments, when the two loss are simply summed, they get the best result.

Paper 2: Self-supervised Motion Learning from Static Images

Why

- To well distinguish actions, correctly locating the prominent motion areas is of crucial importance.

Previous work

- Motion learning by architectures: two-stream networks and 3D convolutional networks. The two-stream networks extract motions representations explicitly from optical flows, while 3D structures apply convolutions on the temporal dimension or space-time cubics to extract motion cues implicitly.

- Self-supervised image representation learning: patch-based approaches , image-level pretext tasks such as image inpainting, image colorization, motion segment prediction and predicting image rotations.

- Self-supervised video representation learning: extend patch-based context prediction to spatial-temporal scenarios, e.g., spatio-temporal puzzles, video cloze procedure and frame/clip order prediction; learn representations by predicting future frames; generate supervision signals, such as speed up prediction and play back rate prediction.

How

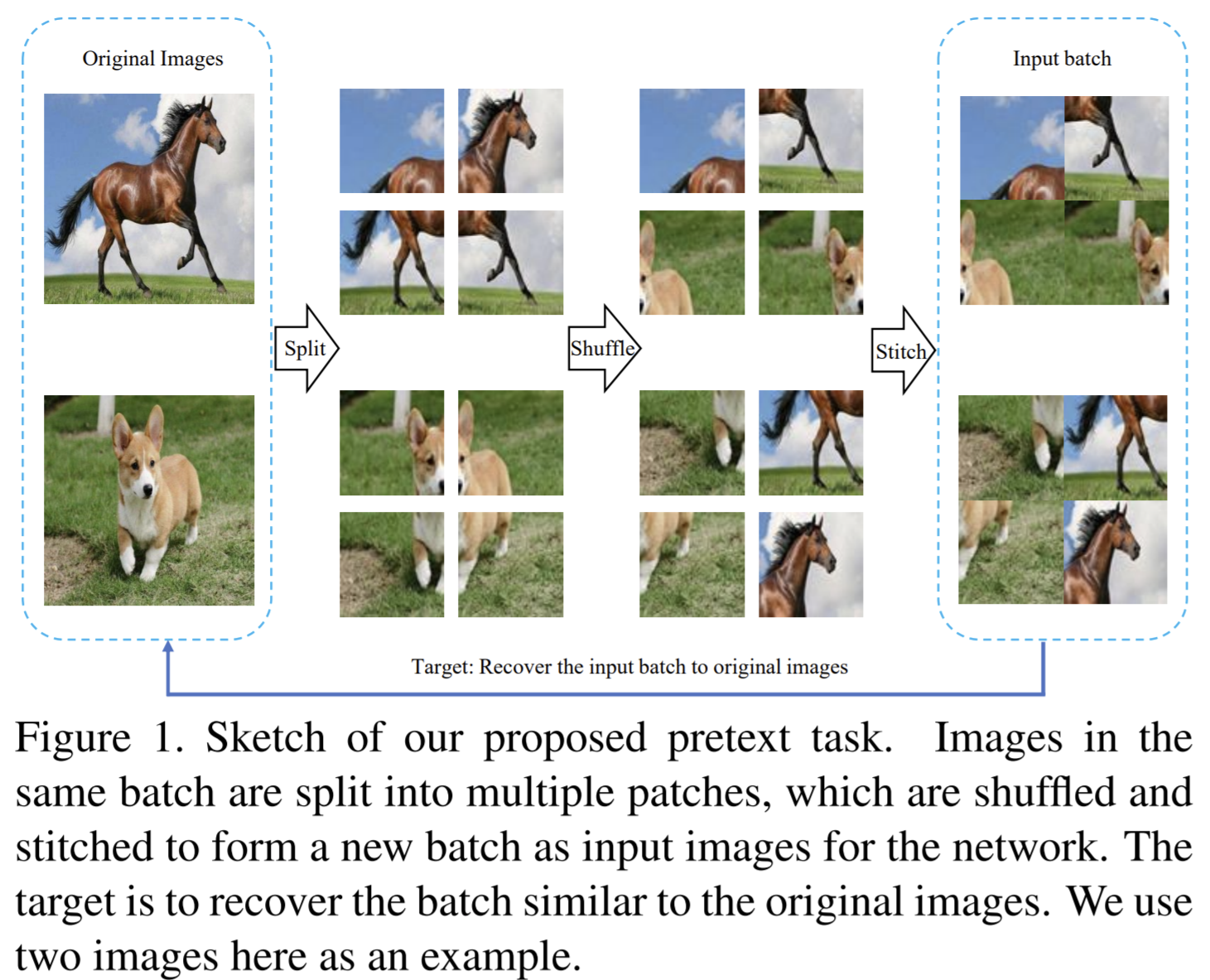

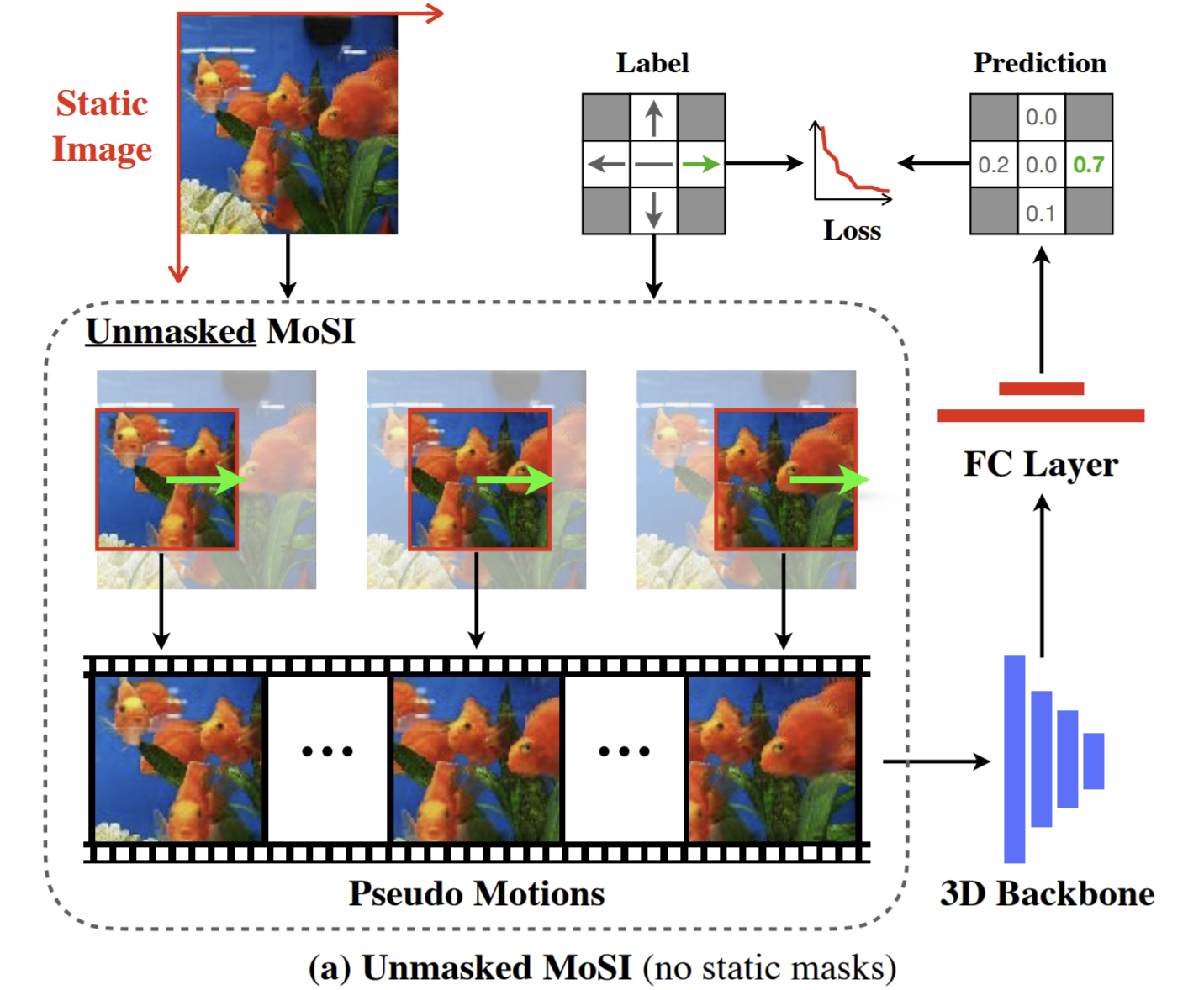

Idea

- Learn Motion from Static Images (MoSI), take images as our data source, and generate deterministic motion patterns.

- Given the desired direction and the speed of the motions, MoSI generates pseudo motions from static images. By correctly classifying the direction and speed of the movement in the image sequence, models trained with MoSI is able to well encode motion patterns.

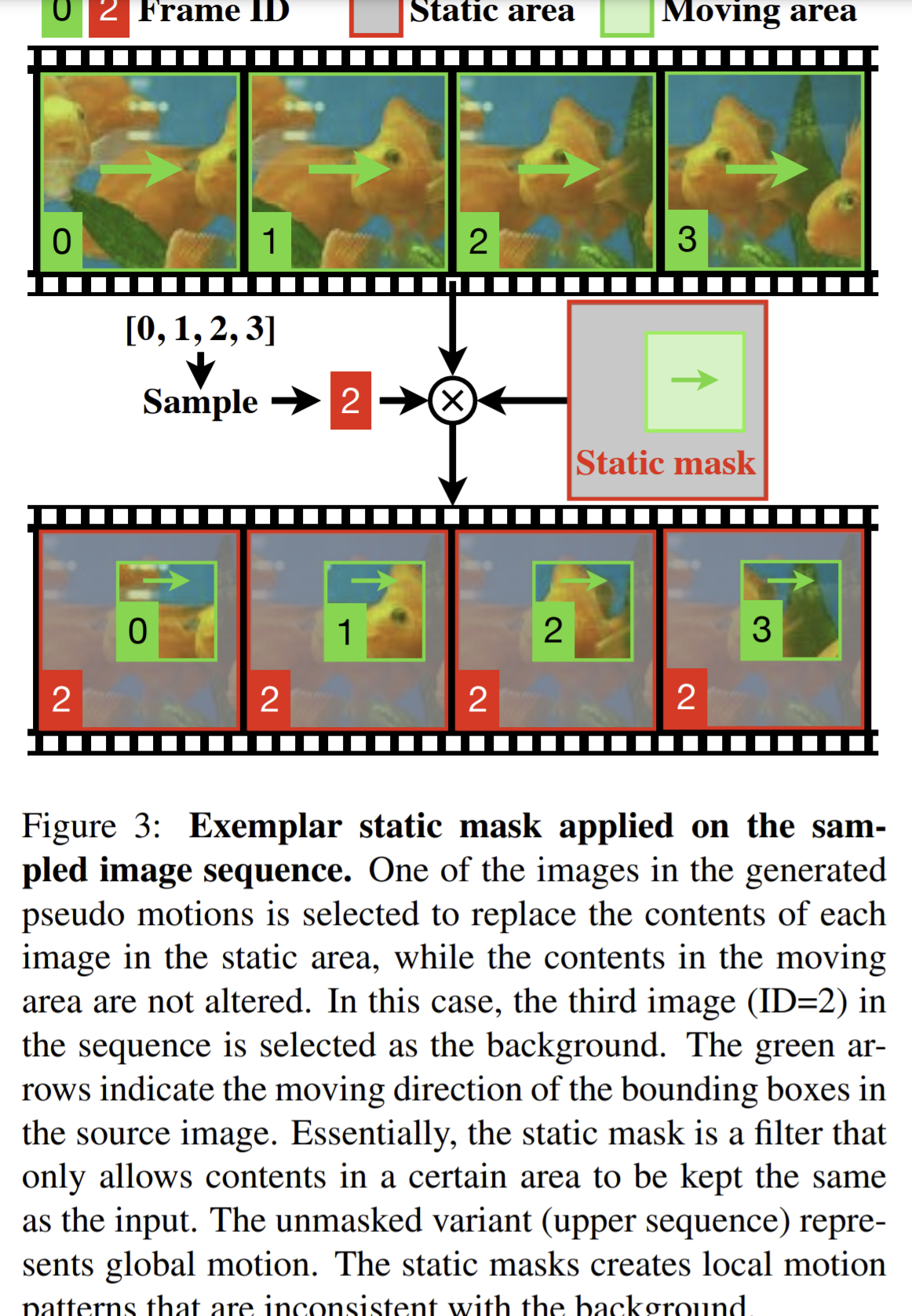

- Furthermore, a static mask is applied to the pseudo motion sequences. This produces inconsistent motions between the masked area and the unmasked one, which guides the network to focus on the inconsistent local motions

Motion learning from static images

Pseudo motions

Label pool:

- For each label, a non-zero speed only exists on one axis.

Pseudo motion generation

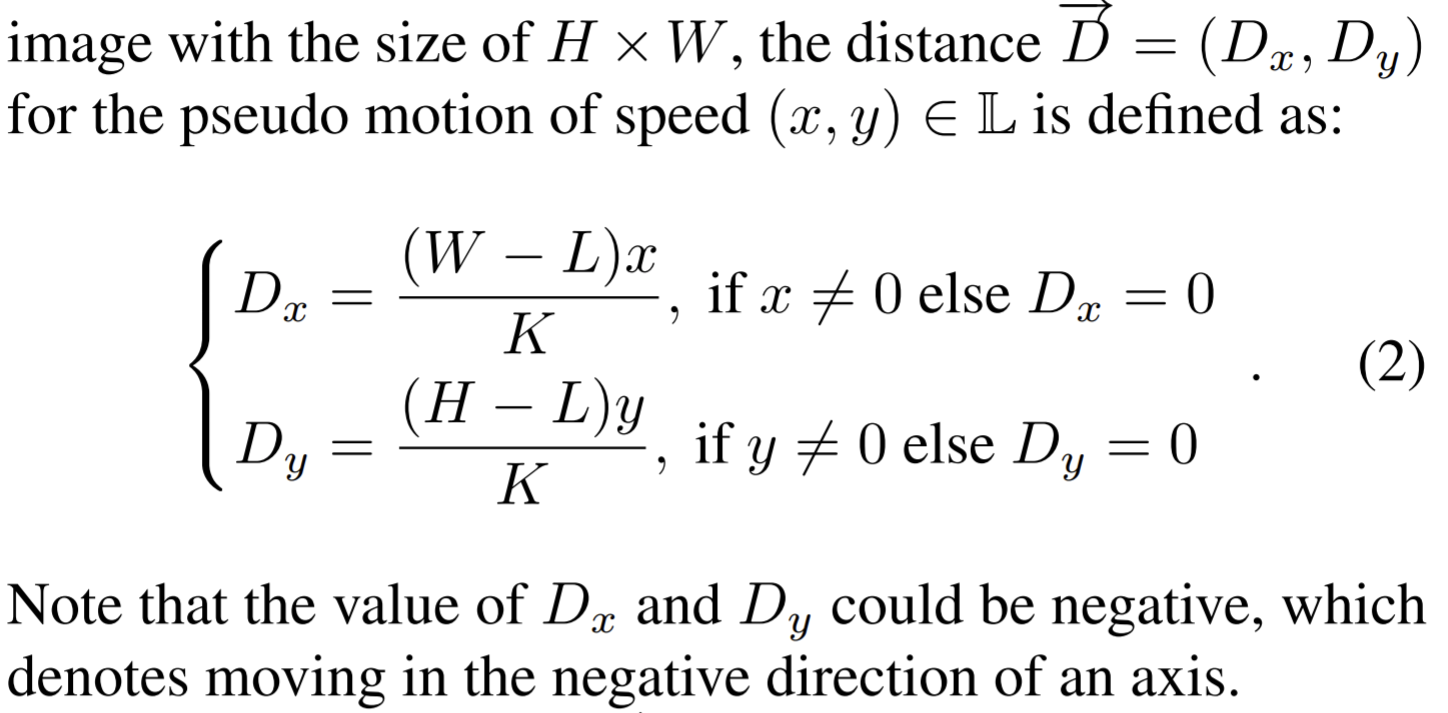

To generate the samples with different speeds, the moving distance from the start to the end of the Pseudo sequences are need.

The start location is randomly sampled from a certain area which ensures the end location is located completely within the source image.

For label \((x, y) = (0, 0)\), where the sampled image sequence is static on both axis, the start location is selected from the whole image with uniform distribution.

Classification

- each batch contains all transformed image sequences generated from the same source image

- The model is trained by cross entropy loss.

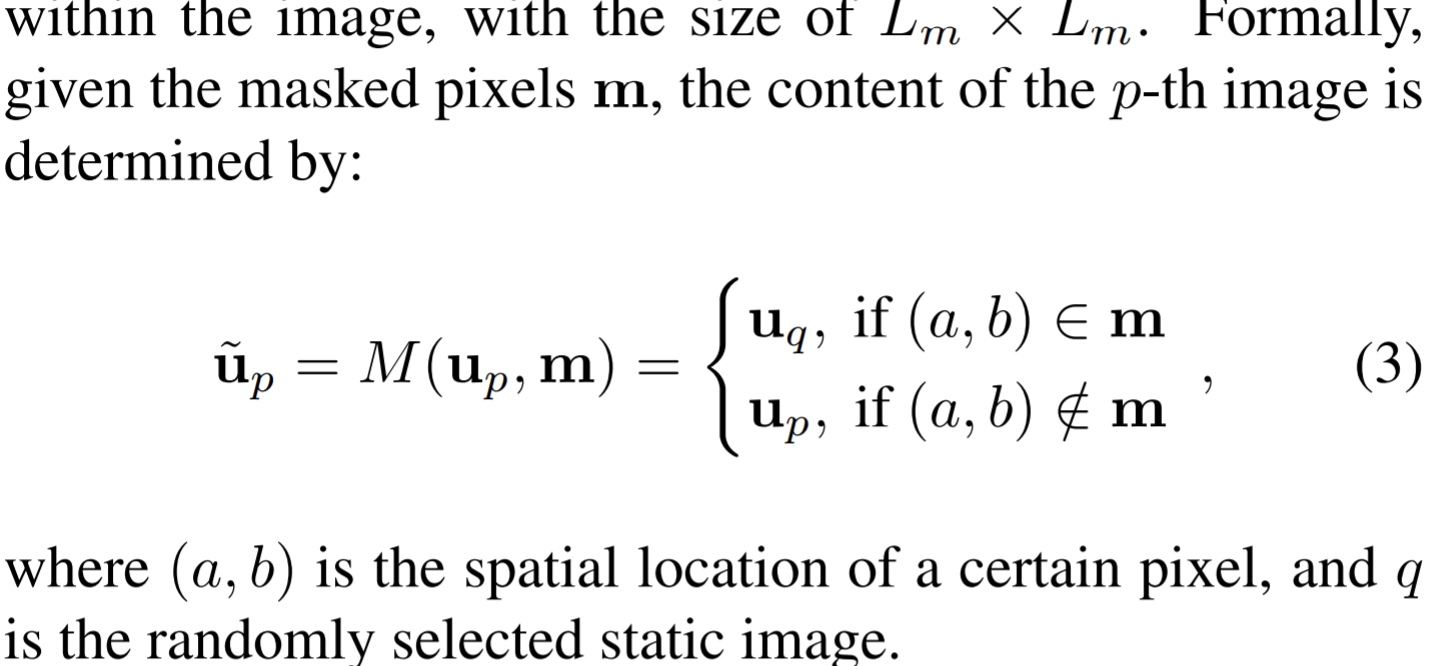

Static masks

- The static masks creates local motion patterns that are inconsistent with the background.

- introduce static masks as the second core component of the proposed MoSI since the model may possibly focus on just several pixels.

- the model is now required not only to recognize motion patterns, but also to spot where the motion is happening

Implementation

- Data preparations: the source images need to be first sampled from the videos in the video datasets.

- Augmentation: randomize the location and the size of the unmasked area. In addition, randomize the selection of the background frames in the MoSI.

Paper 3: Self-supervised Video Representation Learning by Context and Motion Decoupling

Why

- What to learn ?

- Context representation can be used to classify certain actions, but also leads to background bias.

- Motion representation

- Problem

- The source of supervision: video in compressed format (such as MPEG-4) roughly decouples the context and motion information in its I-frames and motion vectors.

- I-frames can represent relatively static and coarse-grained context information, while motion vectors depict dynamic and fine-grained movements

- The source of supervision: video in compressed format (such as MPEG-4) roughly decouples the context and motion information in its I-frames and motion vectors.

Previous work

- SS video representation learning

- video specific pretext tasks: estimating video playback rates, verifying temporal order of clips, predicting video rotations, solving space-time cubic puzzles, and dense predictive coding.

- Contrastive learning

- clips from the same video are pulled together while clips from different videos are pushed away.

- employ adaptive cluster assignment, where the representation and embedding clusters are simultaneously learned.

- But they may suffer from the context bias problem.

- mutual supervision across modalities

- DSM: enhance the learned video representation by decoupling the scene and the motion. It simply changes the construction of positive and negative pairs in contrastive learning.

- Action recognition in compressed videos

- Video compression techniques (e.g., H.264 and MPEG4) usually store only a few key frames completely, and reconstruct other frames using motion vectors and residual errors from the key frames.

- Some methods directly build models on the compressed data.

- One replace the optical flow stream in two-stream action recognition models with a motion vector stream.

- CoViAR: use all modalities, including I-frames, motion vectors and residuals.

- Motion prediction

- deduce the states of an object in a near future.

- Typical models: RNNs, Transformers, and GNNs.

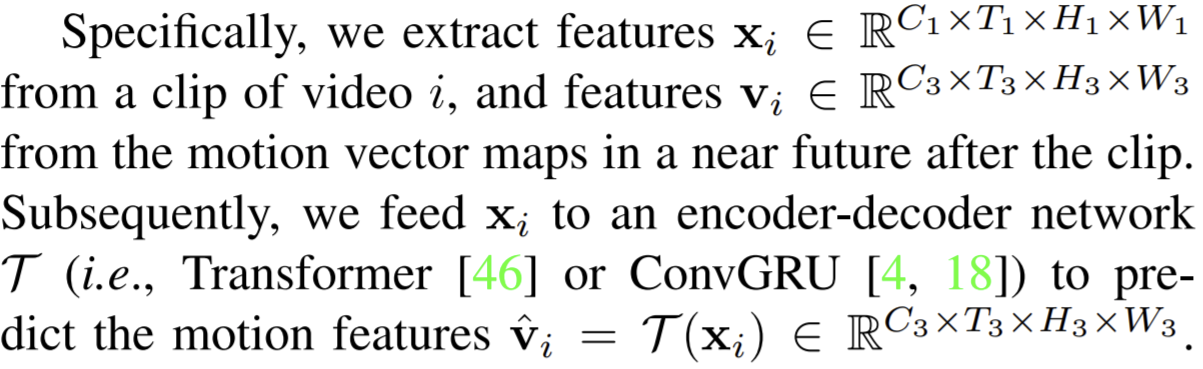

Goal

Design a self-supervised video representation learning method that jointly learns motion prediction and context matching.

- The context matching task aims to give the video network a rough grasp of the environment in which actions take place. It casts a NCE loss between global features of video clips and I-frames, where clips and I-frames from the same videos are pulled together, while those from different videos are pushed away.

- The motion prediction task requires the model to predict pointwise motion dynamics in a near future based on visual information of the current clip.

- They use pointwise contrastive learning to compare predicted and real motion features at every spatial and temporal location \((x,y,t)\), which will lead to more stable pretraining and better transferring performance.

- It works as a strong regularization for video networks, and it can also be regarded as an auxiliary task clearly improves the performance of supervised action recognition.

How

- Data: compressed videos, to be exactly, MPEG-2 Part2, where every I-frames is followed by 11 consecutive P-frames.

- Methods: context matching task for coarse-grained and relatively static context representation, and a motion prediction task for learning fine-grained and high-level motion representation.

- context matching

- where (video clip, I-frame) pairs from the same videos are pulled together, while pairs from different videos are pushed away

- Motion prediction

- Only feature points corresponding to the same video \(i\) and at the same spatial and temporal position \((x, y, t)\) are regarded as positive pairs, otherwise they are regarded as negative pairs.

- The input and output for Transformer is considered as a 1-D sequence.

- Some findings

- Predicting future motion information leads to significantly better video retrieval performance compared with estimating current motion information;

- Matching predicted and “groundtruth” motion features using the pointwise InfoNCE loss brings better results than directly estimating motion vector values;

- Different encoder-decoder networks lead to similar results, while using Transformer performs slightly better.

- context matching

Paper 4: Skip-convolutions for Efficient Video Processing

Codes: https://github.com/Qualcomm-AI-research/Skip-Conv

Why

- Leverage the large amount of redundancies in video streams and save computations

- The spiking nets is lack of efficient training algorithms

- Residual frames provide a strong prior on the relevant regions, easing the design of effective gating functions

Previous work

- Efficient video models

- feature propagation, which computes the expensive backbone features only on key-frames.

- interleave deep and shallow backbones between consecutive frames: methods are mostly suitable for global prediction tasks where a single prediction is made for the whole clip.

- Efficient image models: The reduction of parameter redundancies

- model compression: Skip-Conv leverages temporal redundancies in activations.

- conditional computations in developing efficient models for images.

Goal

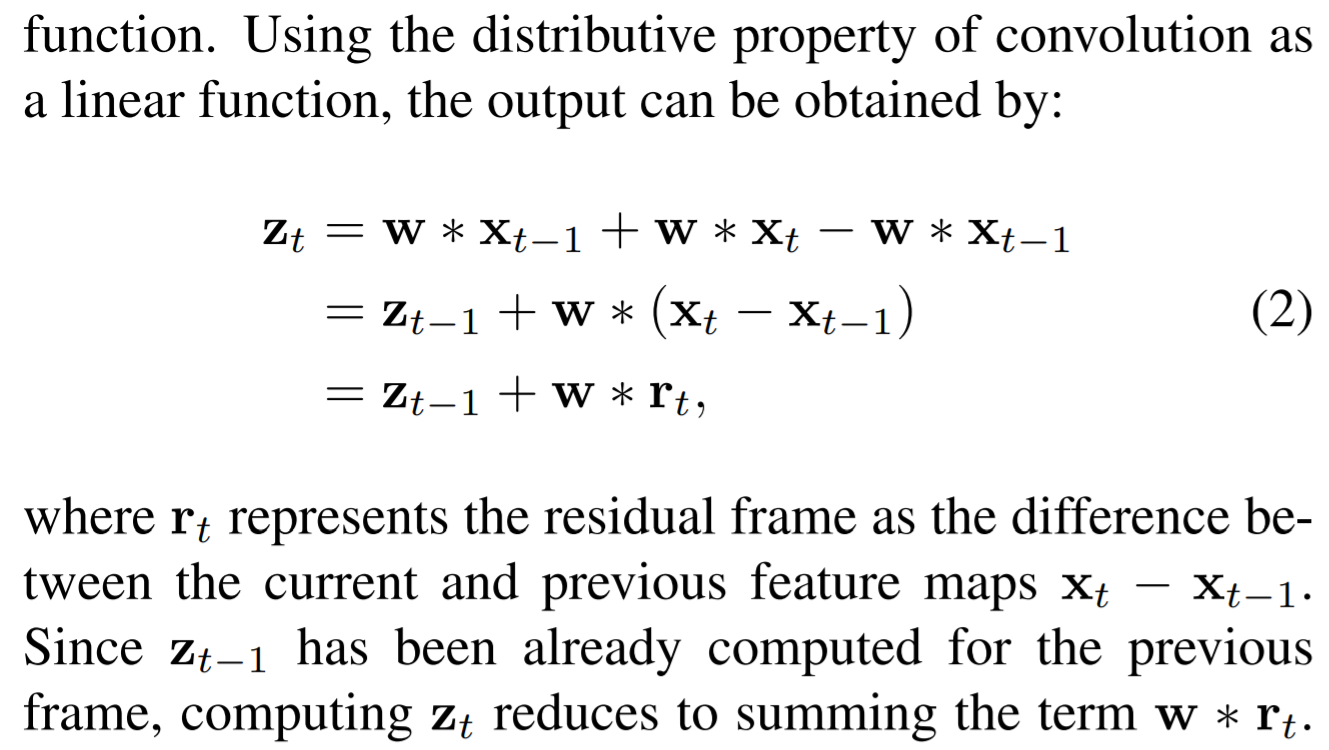

To speed up any convolutional network for inference on video streams. Considering a video as a series of changes across frames and network activations, denotes as residual frames. They reformulate standard convolution to be efficiently computed over such residual frames by limiting the computation only to the regions with significant changes while skipping the others. The important residuals are learned by a gating function.

How

- The contributions

- a simple reformulation of convolution, which computes features on highly sparse residuals instead of dense video frames

- Two gating functions, Norm gate and Gumbel gate, to effectively decide whether to process or skip each location, where Gumbel gate is trainable.

Skip Convolutions

Convolutions on residual frames

- for every kernel support filled with zero values in \(\mathrm{r}_t\), the corresponding output will be trivially zero, and the convolution can be skipped by copying values from \(\mathrm{z}_{t−1}\) to \(\mathrm{z}_{t}\).

- Introduce a gating function for each convolutional layer to predict a binary mask indicating which locations should be processed, and taking only \(\mathrm{r}_t\) as input. \(\mathrm{r}_t\) as input will provide a strong prior to the gating function.

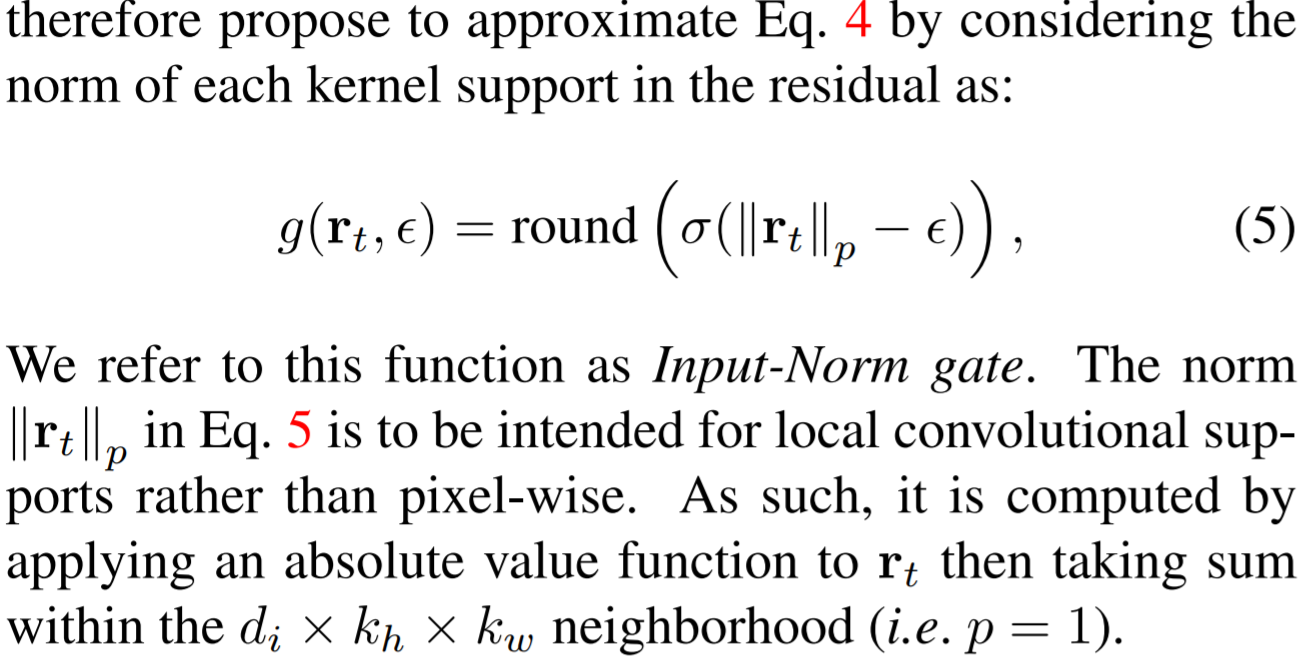

Gating functions

Norm gate: decides to skip a residual if its magnitude (norm) is small enough, not learnable

- indicate regions that change significantly across frames, but not all changes are equally important for the final prediction.

Gumbel gate, trainable with the convolutional kernels.

A higher efficiency can be gained by introducing a higher

pixel-wise Bernoulli distributions by applying a sigmoid function. During training, sample binary deisions from the Bernoulli distribution.

Employ the Gumbel reparametrization and a straight-through gradient estimator in order to backpropagate through the sampling procedure.

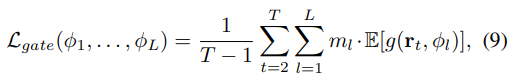

The Gating parameters are learned jointly with all model parameters by minimizing \(\mathcal{L}_{task}+\beta \mathcal{L}_{gate}\).

The gating loss is defined as the average multiply-accumulate (MAC) count needed to process \(T\) consecutive frames as

train the model over a fixed-length of frames and do inference iteratively on an binary number of frames.

By simply adding a downsampling and an unsampling function on the predicted gates, the Skip-conv can be extended to generate structured sparsity. This structure will enable more efficient implementation with minimal effect on performance.

Generalization and future work

Paper 5: Temporal Query Networks for Fine-grained Video Understanding

Codes: http://www.robots.ox.ac.uk/~vgg/research/tqn/

Why

- For finer-grain classification which depends on subtle differences in pose, the specific sequence, duration and number of certain subactions, it requires reasoning about events at varying temporal scales and attention to fine details.

- the constraints imposed by finite GPU memory. To overcome this, one way is to use pretrained features, but this relies on good initializations and ensures a small domain gap. Another solution focuses on extracting key frames from untrimmed videos.

- VQA (visual question and answering)

- Have queries which attends to relevant features for predicting the answers.

- The problem in this paper is more interested in a common set of queries shared across the whole dataset.

Goal

Fine-grained classification of actions in untrimmed videos.

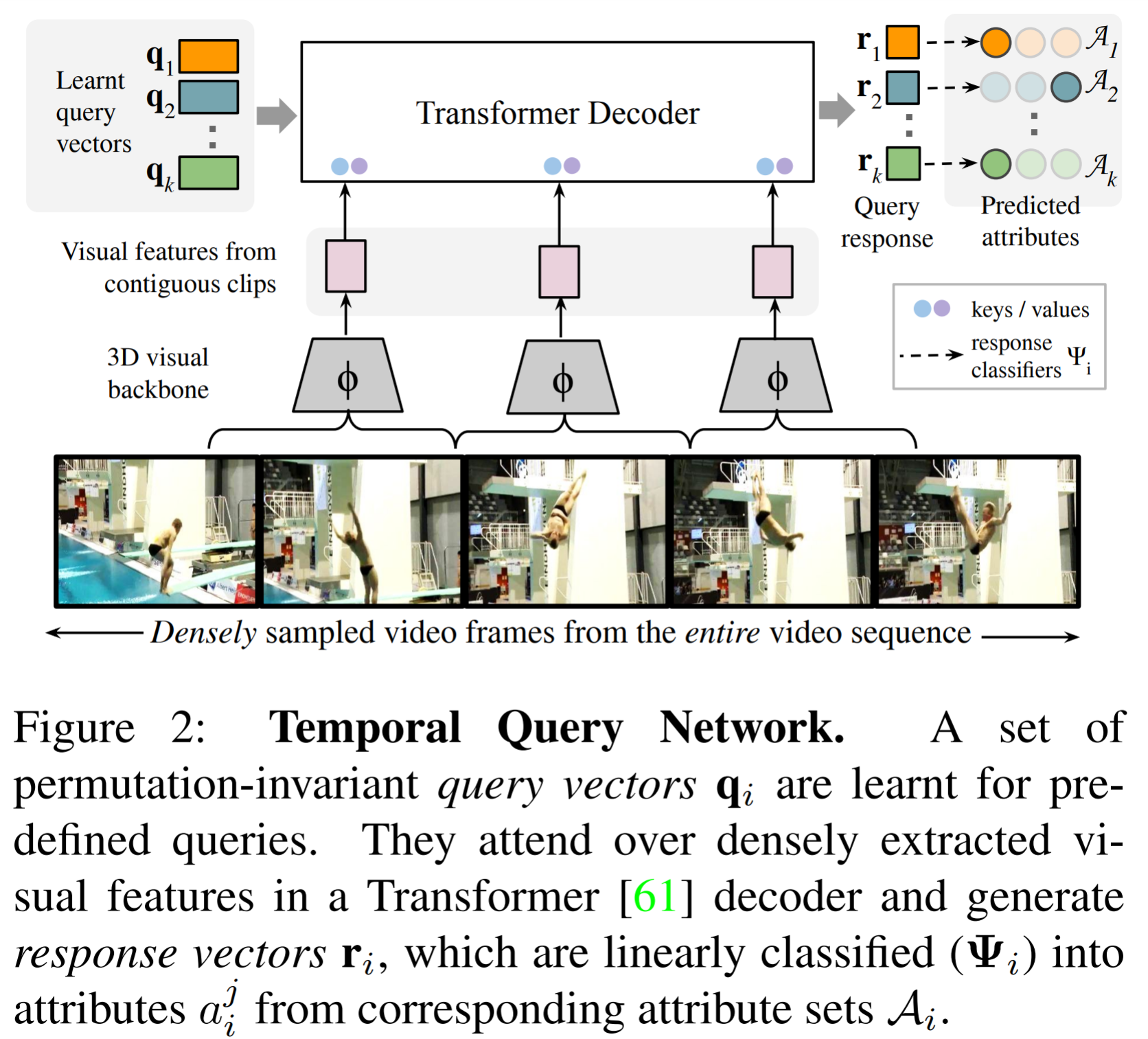

Propose a Transformer-based video network, namely the Temporal Query Network (TQN) for fine-grained action classification, which will take a video and a predefined set of queries as input and output responses for each query, where the response is query dependent.

The queries act as "experts" that are able to pick out from the video the temporal segments required for their response.

- Pick out relevant temporal segments and ignore irrelevant segments.

- Since only relevant segments will help in classification, the excessive temporal aggregation may lose the signal in the noise.

Introduce a stochastically updated feature bank to solve memory constraints.

- features from densely sampled contiguous temporal segments are cached over the course of training,

- only a random subset of these features is computed online and backpropagated through in each training iteration

How

Train with weak supervision, meaning that at training time the temporal location information for the response is not proposed.

TQN

- Identifies rapidly occurring discriminative events in untrimmed videos and can be trained given only weak supervision.

- Achieves by learning a set of permutation-invariant query vectors corresponding to predefined queries about events and their attributes, which are transformed into response vectors using Transformer decoder layers attending to visual features extracted from a 3DCNN backbone.

- Given an untrimmed video, first visual features for contiguous non-overlapping clips of 8 frames are extracted using a 3D ConvNet.

- multiple layers of a parallel non-autoregressive Transformer decoder

- The training loss is a multi-task combination of individual classifier losses, which are Softmax cross-entropy, where the labels are the ground-truth attribute for the label query.

Stochastically updated feature bank

- The memory bank caches the clip-level 3DCNN visual features.

- In each training iteration, a fixed number \(n_{online}\) of randomly samples consecutive clips are forwarded through the visual encoder, while the remaining \(t-n_{online}\) clip features are retrieved from the memory bank.

- The two sets mentioned above are then combined and input into the TQN decoder for final prediction and backpropagation.

- During inference, all features are computed online without the memory bank.

- Advantages: fixed number of clips to reduce the memory price. Also enables to extend temporal context and promotes diversity in each mini-batch as multiple different videos can be included instead of just a single long video.

Factorizing categories into attribute queries

- This factorization unpacks the monolithic category labels into their semantic constituents

- The categories are factorized into multiple queries that with several attributes respectively.

Implementation

- Use S3D as visual backbone, operating on non-overlapping contiguous video clips of 8 frames.

- The decoder consists of 4 standard post-normalization Transformer decoder layers, each with 4 attention heads.

- The visual encoder is pre-trained on Kinetics-400.

Paper 6: Unsupervised disentanglement of linear-encoded facial semantics

Why

- Sampling along the linear-encoded representation vector in latent space will change the associated facial semantics accordingly.

- Current frameworks that maps a particular facial semantics to a latent representation vector relies on training offline classifiers with manually labeled datasets. Therefore they require artificially defined semantics and provide the associated labels for all facial images. If training with labeled facial semantics:

- They demand extra effort on human annotations for each new attributes proposed

- Each semantics is defined artificially

- unable to give any insights on the connections among different semantics

- Previous work

- Synthesizing faces by GAN, which changes the target attribute but keep other information ideally unchanged.

- The comprehensive design of loss functions.

- the involvement of additional attribute features

- the architecture design

- To achieve meaningful representations, one should always introduce either supervision or inductive biases to the disentanglement method

- Inductive bias: rise from the symmetry of natural objects and the 3D graphical information.

- Reconstruct the face images by carefully remodeling the graphics of camera principal, which makes it possible to decompose the facial images into environmental semantics and other facial semantics.

- It's unable to generate realistic faces and perform pixel-level face editing on it.

- Synthesizing faces by GAN, which changes the target attribute but keep other information ideally unchanged.

Goal

- Photo-realistic images synthesizing, minimize the demand for human annotations

- Capture linear-encoded facial semantics.

How

With a given collection of coarsely aligned faces, a GAN is trained to mimic the overall distribution of the data. Then use the faces that the trained GAN generates as training data and trains a 3D deformable face reconstruction method. A mutual reconstruction strategy stabilizes the training significantly. Then they keep a record of the latent code from StyleGAN and apply linear regression to disentangle the target semantics in the latent space.

Decorrelating latent code in StyleGAN

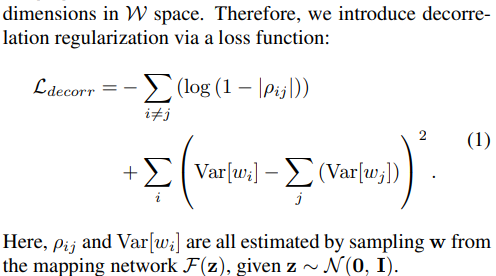

Enhance the disentangled representation by decorrelating latent codes.

In order to maximizes the utilization of all dimensions, they use Pearson correlation coefficient to zero and variance of all dimension.

Introduce decorrelation regularization via a loss function

The mapping network is the only one to update with the new loss.

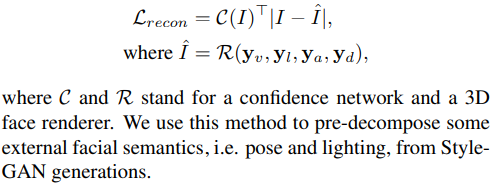

Stabilized training for 3D face reconstruction

Use the decomposed semantics to reconstruct the original input image with the reconstruction loss

- The 3D face reconstruction algorithm struggles to estimate the pose of profile or near-profile faces.

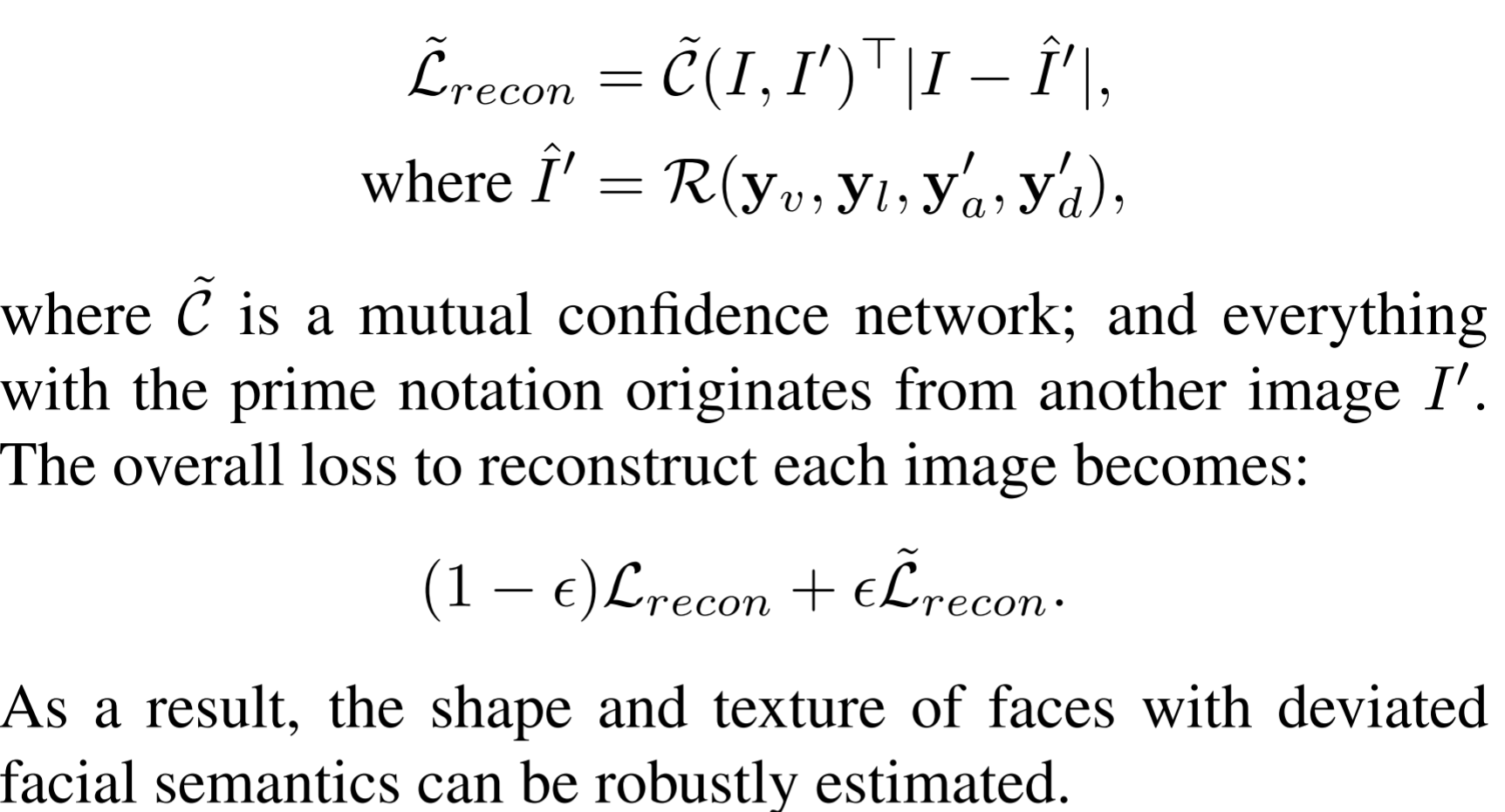

- The algorithm tries to use extreme values to estimate the texture and shape of each face independently, which deviate far away from the actual texture and shape of the face. To solve this, the mutual reconstruction strategy is proposed to prevent the model from using extreme values to fit individual reconstruction, and the model learns to reconstruct faces with a minimum variance of the shape and texture among all samples.

During training, they swap the albedo and depth map between two images with a probability \(\epsilon\) to perform the reconstruction with the alternative loss.

simply concatenate the two images channel-wise as input to the confidence network

Disentangle semantics with linear regression

- The Ultimate goal of disentangling semantics is to find a vector in StyleGAN, such that it only takes control of the target semantics.

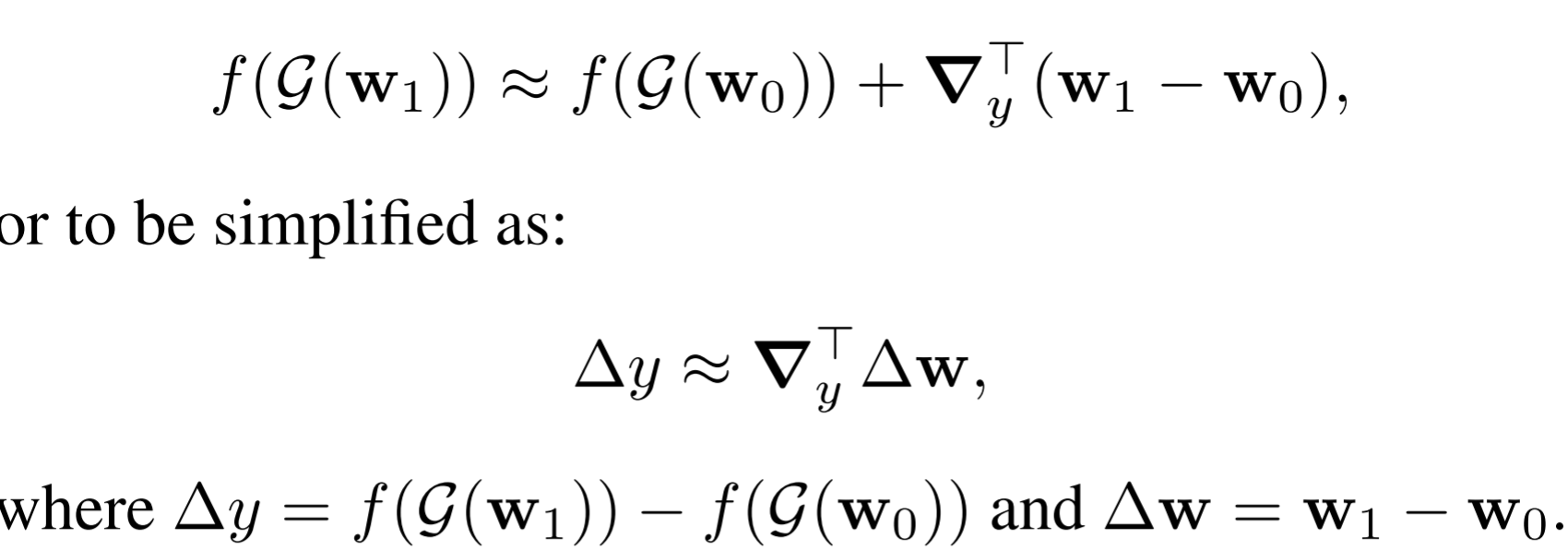

- Semantic gradient estimation

- It's observed that with StyleGAN, many semantics can be linear-encoded. Therefore, the gradient is now independent of the input latent code.

- Semantic linear regression

- In real world scenario, the gradient is hard to estimate directly because back-propagation only captures local gradient, making it less robust to noises.

- Propose a linear regression model to capture global linearity for gradient estimation.

Image manipulation for data augmentation

- One application is to perform data augmentation.

- By extrapolating along \(\mathrm{v}\) beyond its standard deviation, we can get samples with more extreme values for the associated semantics.

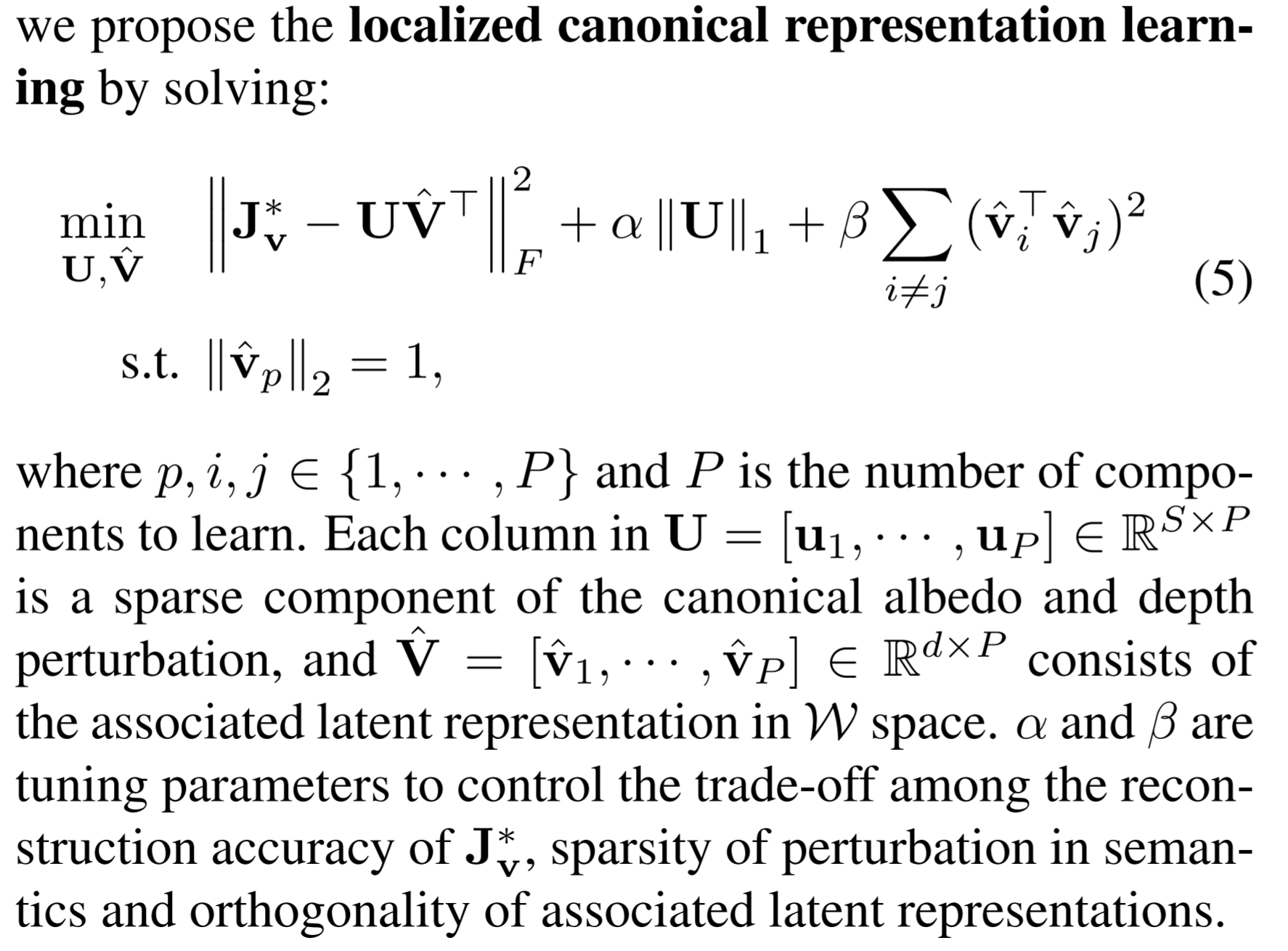

Localized representation learning

- Find the manipulation vectors \(\hat{\mathrm{v}}\) that capture interpretable combinations of pixel value variations.

- Start by defining a Jacobian matrix, which is the concatenation of all canonical pixel-level \(\mathrm{v}\).

- interpolation along \(\hat{\mathrm{v}}\) should result in significant but localized (i.e. sparse) change across the image domain.

Paper 7: Bi-GCN: Binary Graph Convolutional Network

Codes: https://github.com/bywmm/Bi-GCN

Why

- The current success of GNNs is attributed to an implicit assumption that the input of GNNs contains the entire attributed graph, which will collapse or the accuracy will decrease if the entire graph is too large.

- One intuitive solution for the problem is sampling: neighbor sampling or graph sampling. The graph sampling will sample subgraphs and can avoid neighbor explosion. But not like neighbor sampling, it cannot guarantee that each node is sampled.

- Neighbor sampling: GraphSAGE, VRGCN

- Sampling subgraphs: Fast-GCN, ClusterGCN, DropEdge, DropConnection, GraphSAINT for edge sampling.

- Another solution is compressing the size of input graph data the the GNN model: such as pruning, shallow networks, designing compact layers and quantizing the parameters.

- One intuitive solution for the problem is sampling: neighbor sampling or graph sampling. The graph sampling will sample subgraphs and can avoid neighbor explosion. But not like neighbor sampling, it cannot guarantee that each node is sampled.

- The challenges of compressed GNN

- The compression of the loaded data demands more attention

- The original GNN is shallow and therefore the compression will be more difficult to be achieved.

- Require the compressed GNNs to possess sufficient parameters for representations.

Goal

- reduce the redundancies in the node representations while maintain the principle information.

How

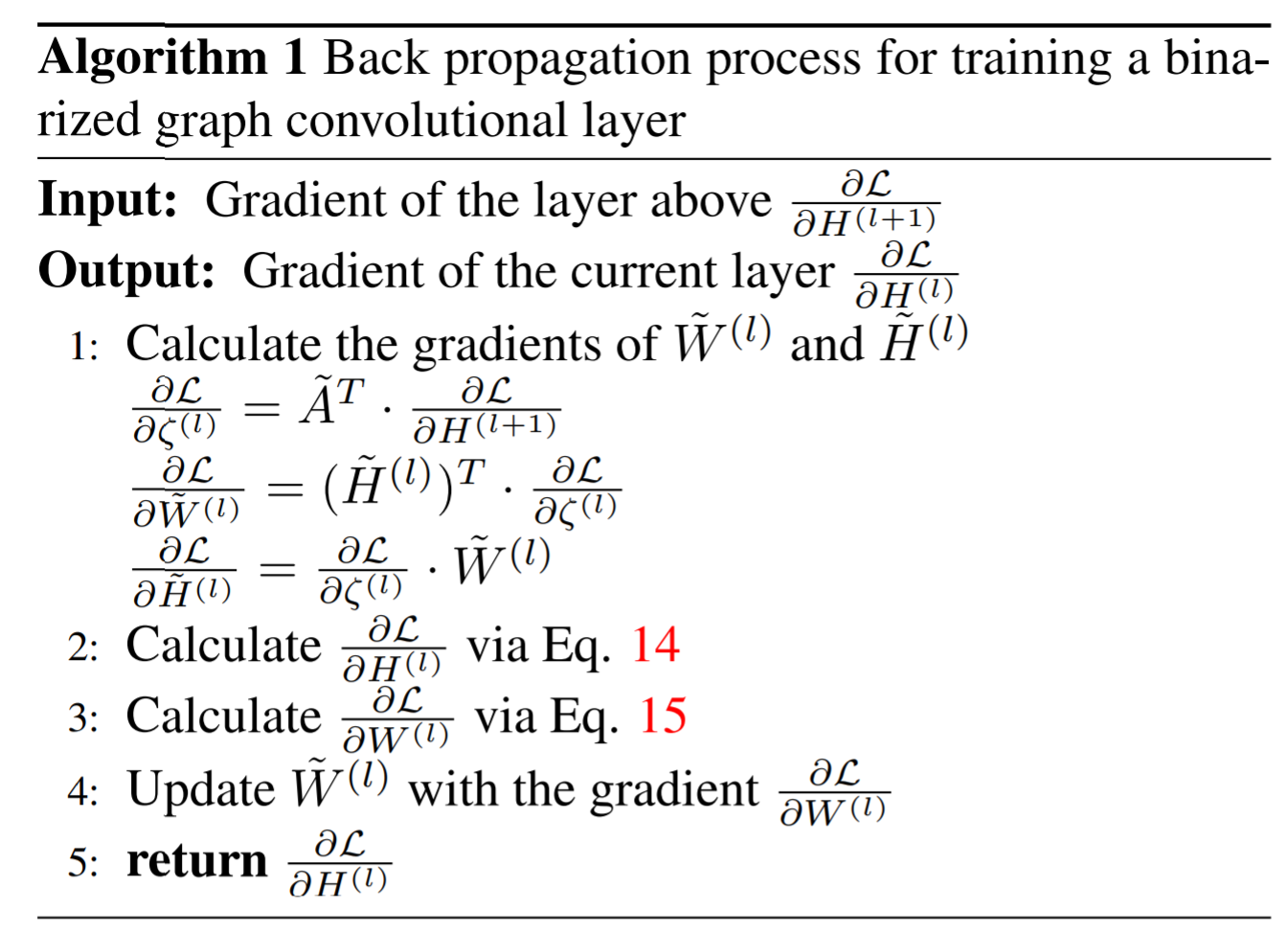

- Binarizes both the network parameters and input node features. The original matrices multiplications are revised to binary operations for accelerations. Design a new gradient approximation based back-propagation to train the proposed Bi-GCN.

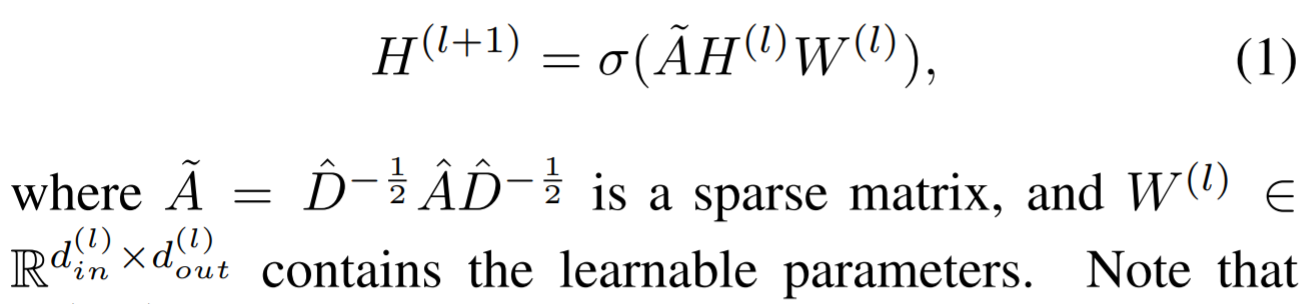

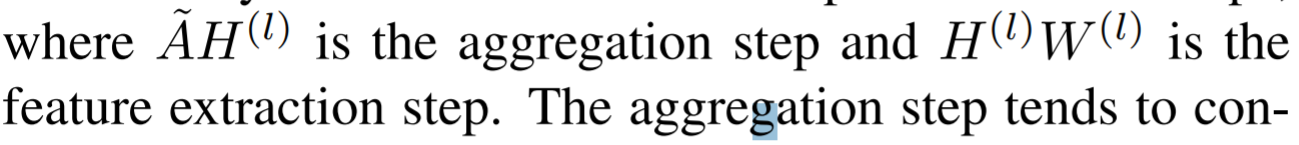

GCN

Use task-dependent loss function, e.g. the cross-entropy.

Bi-GCN

Only focus on binarizing the feature extraction step, because the aggregation step possesses no learnable parameters and it only requires a few calculations. To reduce the computational complexities and accelerates the inference process, the XNOR and bit count operations are utilized.

Binarization of the feature extraction step

Binarization of the parameters

Each column if the parameter matrix is splitted as a bucket, and \(\alpha\) maintain the scalars for each bucket.

Binarization of the node features

Processed by the graph convolutional layers.

- Split the hidden state at layer \(l\) into row buckets based on the constraints of the matrix multiplication.

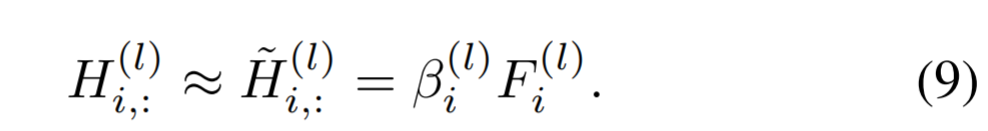

- Let \(F^{(l)}\) be the binarized buckets, the binary approximation of \(H^{(l)}\) can be obtained via

- \(\beta\) can be considered as the node-weights for the features representations. Each element of \(F^{(l)}\) and \(B^{(l)}\) is either -1 or 1.

- This Binarization also possesses the ability of activation, therefore the activation operations can be eliminated.

Binary gradient approximation based back propagation

Paper 8: An Attractor-Guided Neural Networks for Skeleton-Based Human Motion Prediction

Why

- Most existing methods tend to build the relations among joints, where local interactions between joint pairs are well learned. However, the global coordination of all joints, is usually weakened because it is learned from part to whole progressively and asynchronously.

- Most graphs are designed according to the kinematic structure of the human to extract motion features, but hardly do they learn the relations between spatial separated joint pairs directly.

- Except for speed, other dynamic information like accelerated speed are not counted into previous work, which ignores important motion information.

- Previous work

- Human motion prediction

- Many works suffer from discontinuities between the observed poses and the predicted future ones.

- Consider global spatial and temporal features simultaneously, such as transform temporal space to trajectory space to take the global temporal information into account.

- Joint relation modeling

- Focus on skeletal constraints to model correlation between joints.

- adaptive graph: the existed works weak the global coordination of all joints since they are learned from parts.

- Dynamic representation of skeleton sequence

- Many attempts proposed to extract enriching dynamic representation from raw data, but they only extract the dynamics from neighbor frames

- Extract the dynamic features among frames through multiple timescale will extract more motion features.

- Human motion prediction

Goal

To characterize the global motion features and thus can learn both the local and global motion features simultaneously.

Generate predicted poses through proposed framework AGN and the historical 3D skeleton-based poses.

How

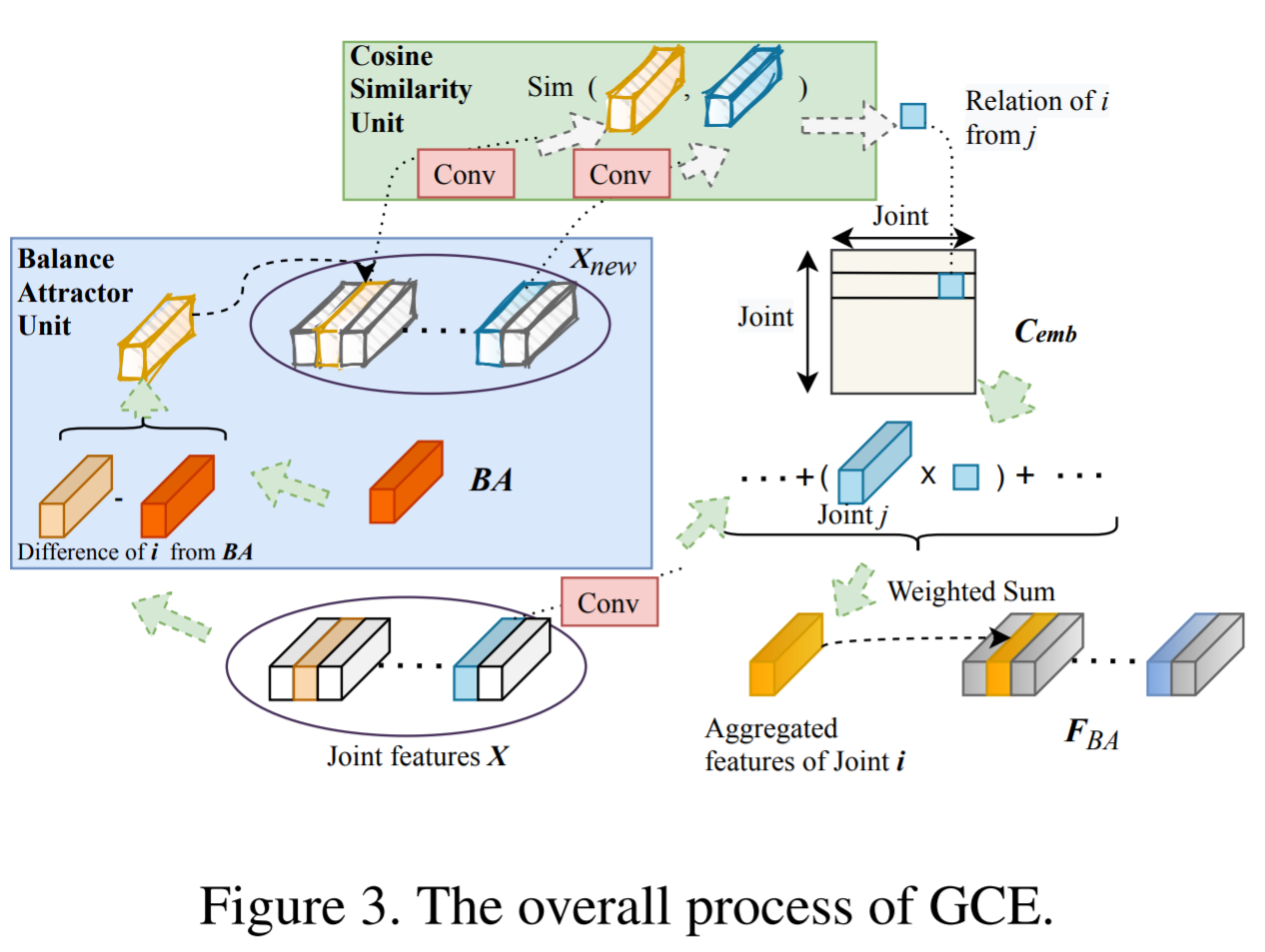

- Pipeline: A BA (balance attractor) is learned by calculating dynamic weighted aggregation of single joint feature. Then the difference between the BA and each joint feature is calculated. Later the resulting new joint features are used to calculate joints similarities to generate final joint relations.

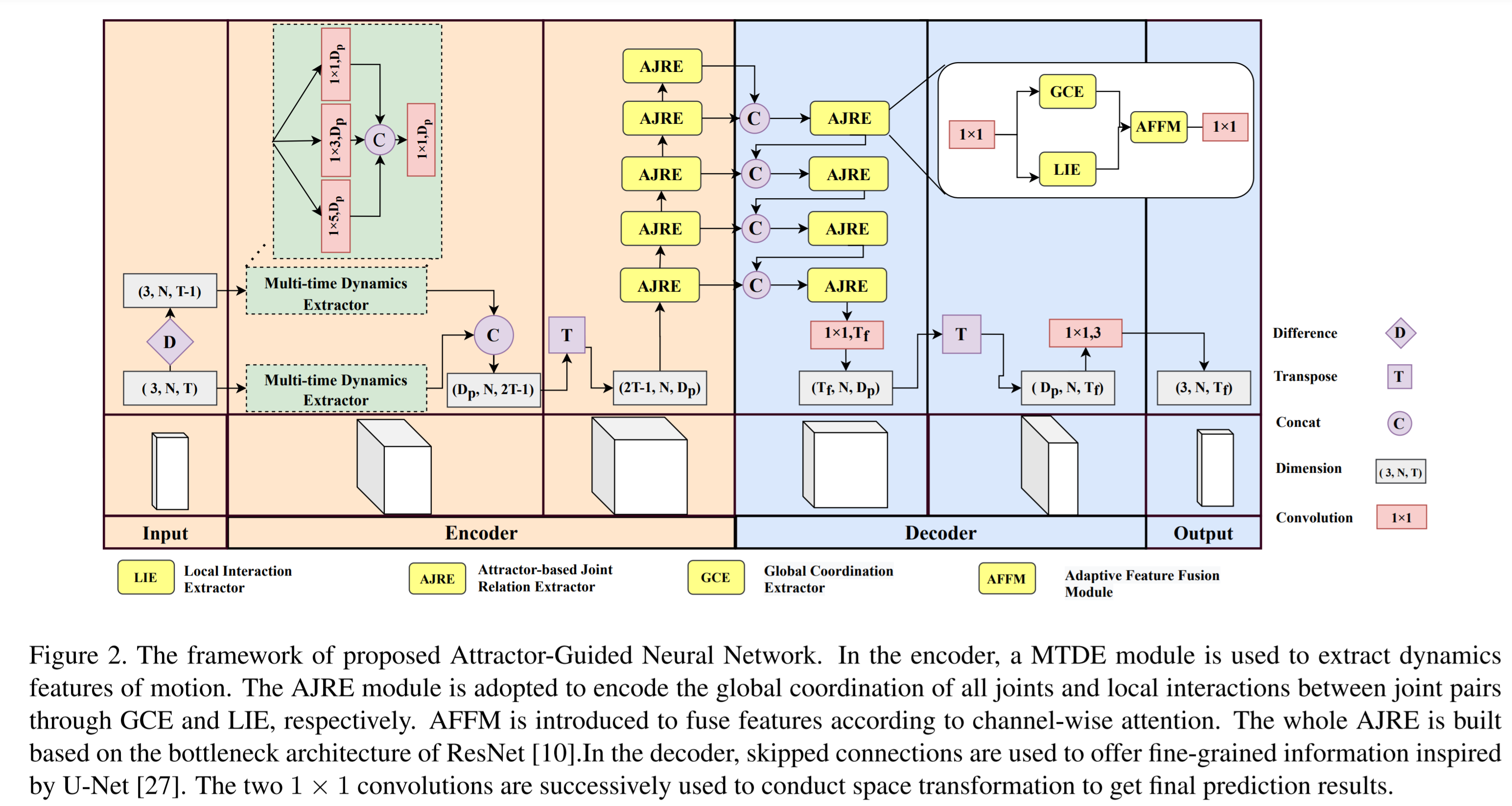

- Framework: Attractor-Guided Neural Network, which first learn an enriching dynamic representation from raw position information adaptively through MTDE (multi-timescale dynamics extractor). Then the AJRE (attractor-based joint relation extractor) is imported , including a LIE (local interaction extractor), a GCE (global coordination extractor) and an adaptive feature fusing module.

- AJRE: a joint relation modeling = GCE+LIE. The GCE models the global coordination of all joints, while LIE mines the local interactions between joint pairs.

- MTDE: extract enriching dynamic information from raw input data for effective prediction.

MTDE

A combination of different time scales motion dynamics

Two stream, one path is the raw input poses, the other is the difference between adjacent frames in raw input. The dynamics of each joint separately is also modeled to avoid the interference of other joints.

The MTDE uses three 1DCNN but in different kernel size (5,3,1) to extract the local (joint-level) dynamics.

AJRE

- Consists of GCE and LIE to separately model global coordination of all joints and local interactions between joint pairs, and also AFFM which is used to fuse features according to channel-wise attention to improve the flexibility of joint relation modeling.

- GCE and LIE work in parallel, and they are followed by AFFM.

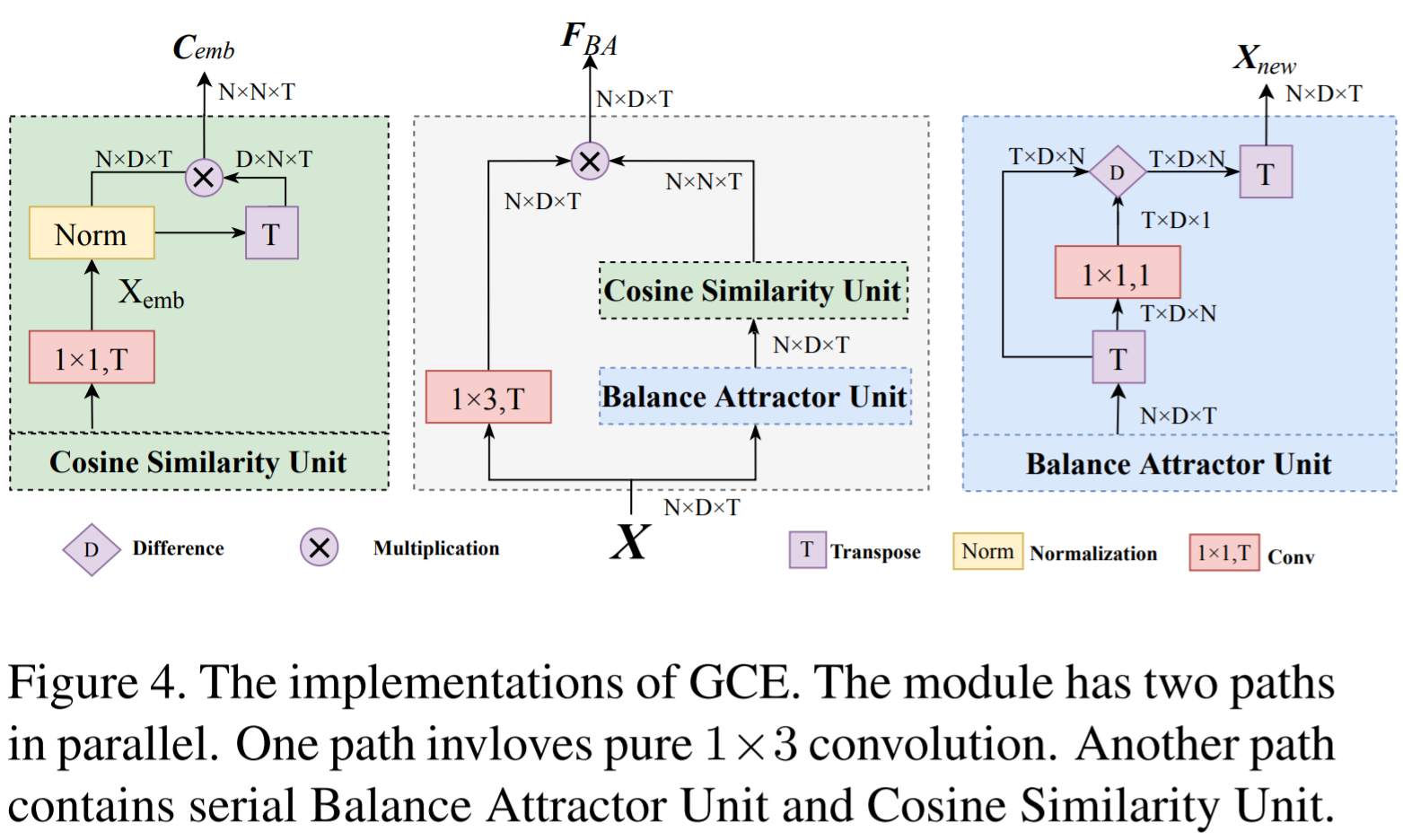

GCE

Global coordination of all joints, so they learn a medium to build new joint relations indirectly.

- BA (balance attractor unit) unit calculates all joints' aggregation to characterize the global motion features. After transpose the input features, then BA unit applies \(1\times 1\) conv to get a dynamic weighted feature aggregation of N joints features. And \(X_{new}\) is the difference between the output features of BA and the original \(X\).

- The new relations of all joints is built by the Cosine similarity unit, which measure between \(X_{new},X\). The cosine similarity between all row vector pairs to illustrate the correlation between joint pairs. The correlation matrix on each channel is calculated since each channel encodes specific spatiotemporal features and should focus on different correlations compared with other channels.

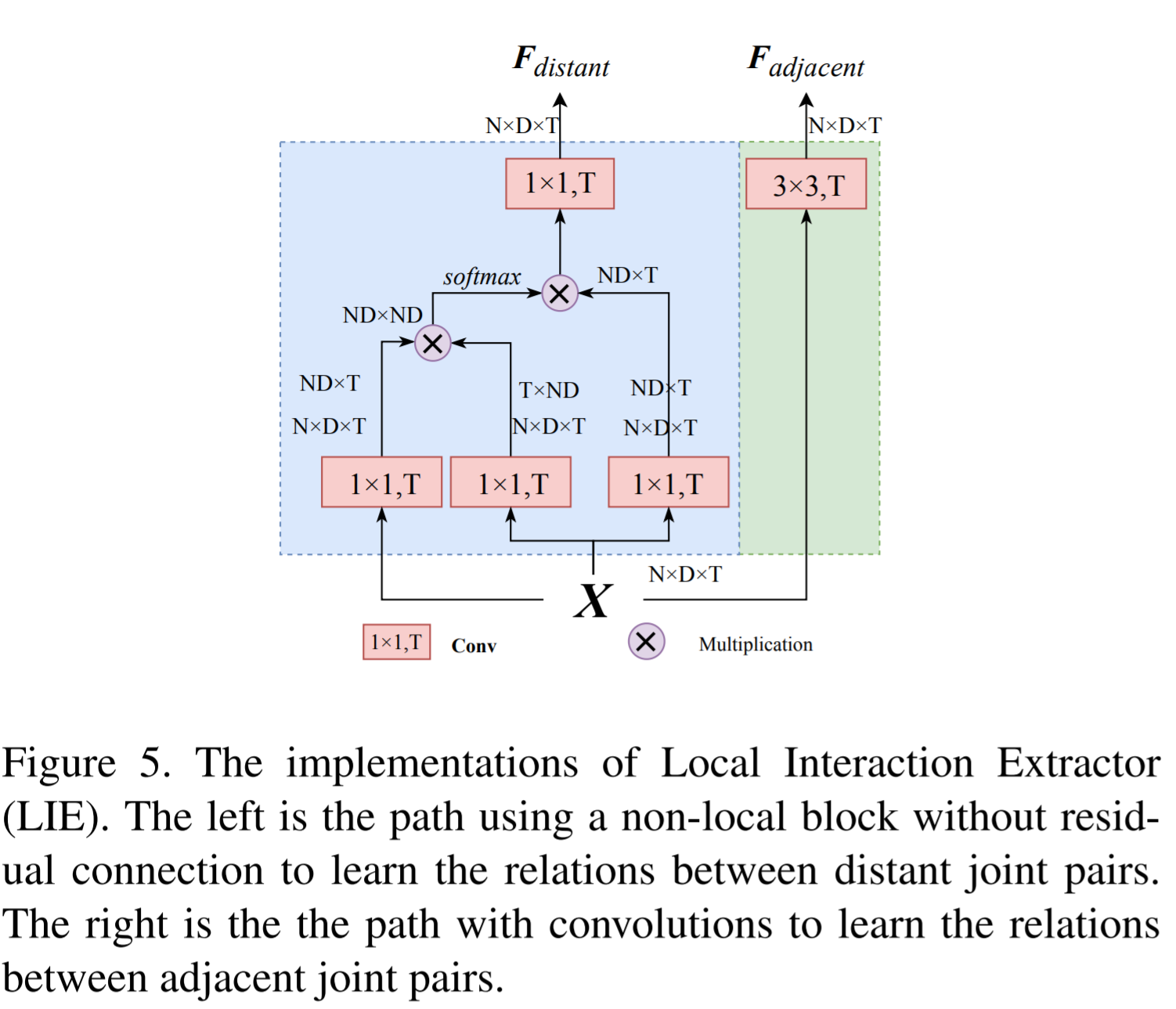

LIE

- It's used to learn local interactions between joint pairs, including adjacent and distant joints.

- To learn the relations between adjacent joint pairs, a pure \(3\times 3\) convolution is adopted. To learn the relations between distant joint pairs, the self-attention is used.

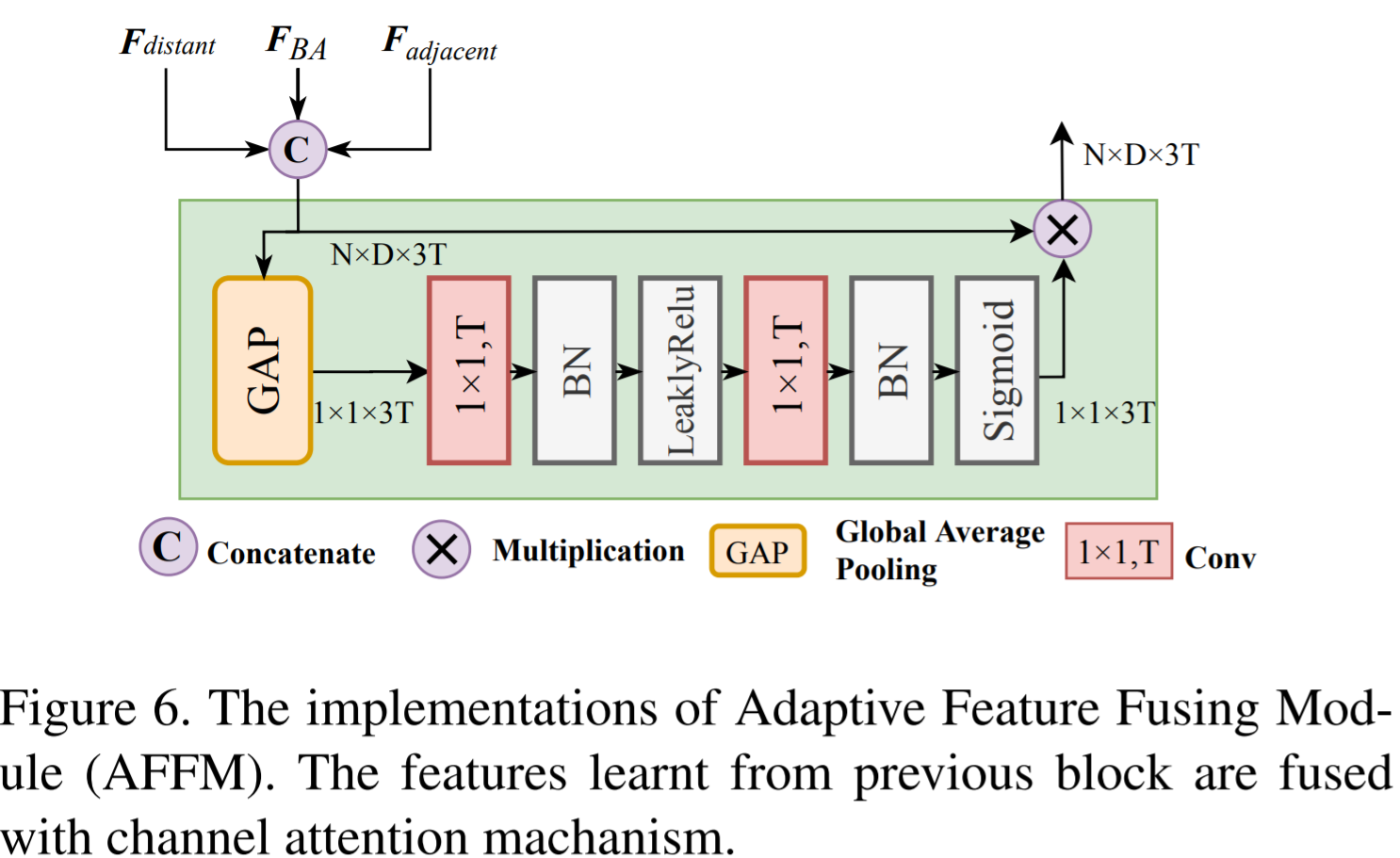

AFFM

- Channel attention to fuse features adaptively and reform more reliable representation.

- After the sigmoid in the AFFM unit, the importance ratio of each channel is obtained. Then the channel-wise multiplication between ratio and raw input is done to reform features.

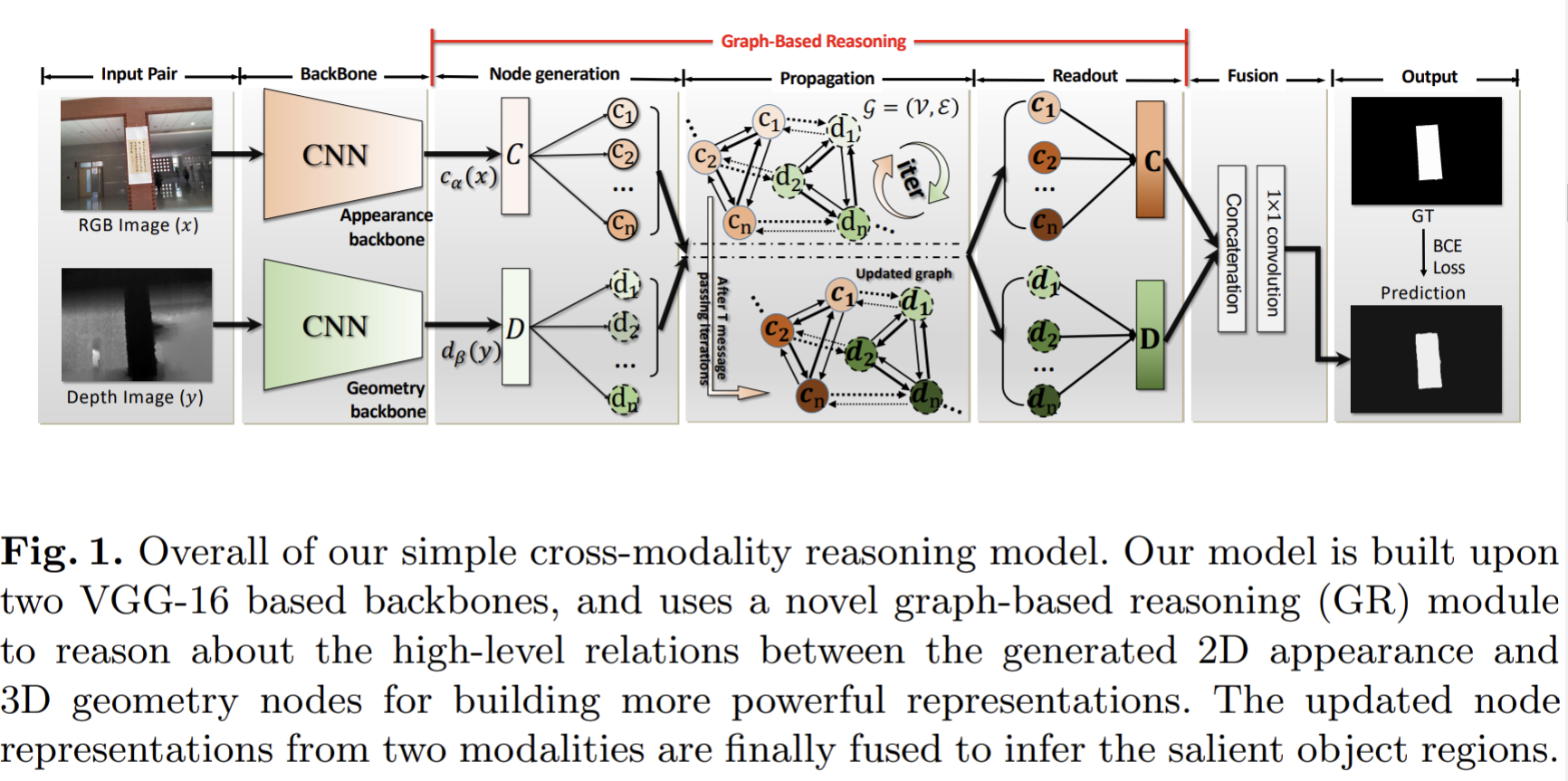

Paper 9: Cascade Graph Neural Networks for RGB-D Salient Object Detection

Codes: https://github.com/LA30/Cas-Gnn

Why

- How to leverage the two complementary data sources: color and depth information

- Current works either simply distill prior knowledge from the corresponding depth map for handling the RGB-image or blindly fuse color and geometric information to generate the coarse depth-aware representations, hindering the performance of RGB-D saliency detectors

- Identify saliency objects of varying shape and appearance, show robustness towards heavy occlusion, various illumination and background.

- Network cascade is an effective scheme for a variety of high-level vision applications. It will ensemble a set of models to handle challenging tasks in a coarse-to-fine or easy-to-hard manner.

Goal

Salient object detection for RGB-D images. To distill and reason the mutual benefits between the color and depth data sources through a set of cascade graphs.

Predict a saliency Map given an input image and its corresponding depth image.

How

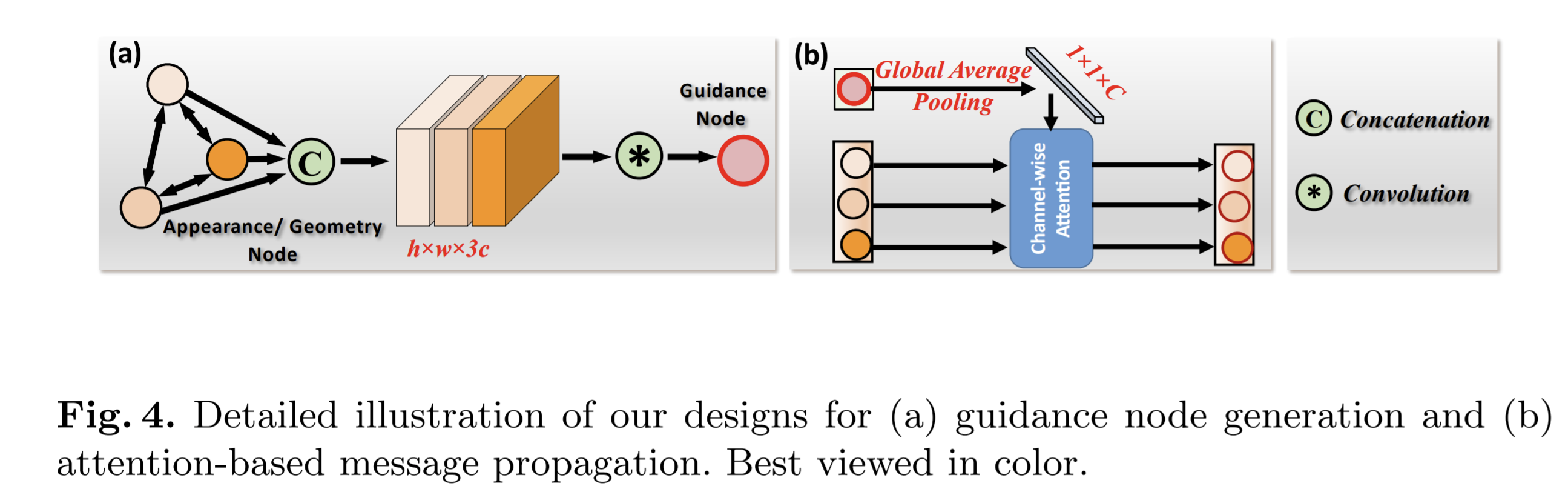

- CGR (cascade graph reasoning ) module to learn dense features, from which the saliency map can be easily inferred. It explicitly reasons about the 2D appearance and 3D geometry information for RGBD SOD.

- Each graph consists of two types of nodes, geometry nodes storing depth features and appearance nodes storing RGB-related features.

- Multiple-level graphs sequentially chained by coarsening the preceding graph into two domain-specific guidance nodes for the following cascade graph.

Cross-modality reasoning with GNNs

- Build a directed graph, where the edges connect i) the nodes from the same modality but different scales and ii) the nodes of the same scale from different modalities.

- The backbone is VGG-16 plus dilated network technique, which will extract 2D appearance representations and 3D geometry representations. They also propose a graph-based reasoning (GR) module to reason about the cross-modality, high-order relations between them.

- GRU module

- Input 2D features and 3D features.

- gated recurrent unit for node state updating

Cascade Graph Neural networks

To overcome the drawbacks of independent multilevel (graph-based) reasoning, propose cascade GNNs.

coarsening the preceding graph into two domain-specific guidance nodes for the following cascade graph to perform the joint reasoning

The guidance nodes only deliver the guidance information, and will stay fixed during the message passing process.

The guidance node is built by firstly concatenation and then the fusion via \(3\times 3\) convolution layer.

Each guidance node propagates the guidance information to other nodes of the same domain in the graph through the attention mechanism.

Multi-level feature fusion: The merge function is either element-wise addition or channel-wise concatenation.

Paper 10: Coarse-Fine Networks for Temporal Activity Detection in Videos

Code: https://github.com/kkahatapitiya/Coarse-Fine-Networks

Why

- One main challenge for video representation learning is capturing long-term motion from a continuous video.

- Use of frame striding or temporal pooling has been a successful strategy to cover a larger time interval without increasing the number of parameters

- Previous work

- Action localization: temporal action localization task, which tends to annotate every frame with multiple ongoing activities. Use of sequential models such as LSTMs have been popular.

- Dynamic sampling: selective processing of information, like spatially, temporally or spatio-temporally sampling.

- Two challenges of the network

- how to abstract the information at a lower temporal resolution meaningfully, and

- how to utilize the fine-grained context information effectively.

Goal

learn better video representations for long-term motion, works in multiple temporal resolutions of the input and selects frames dynamically.

How

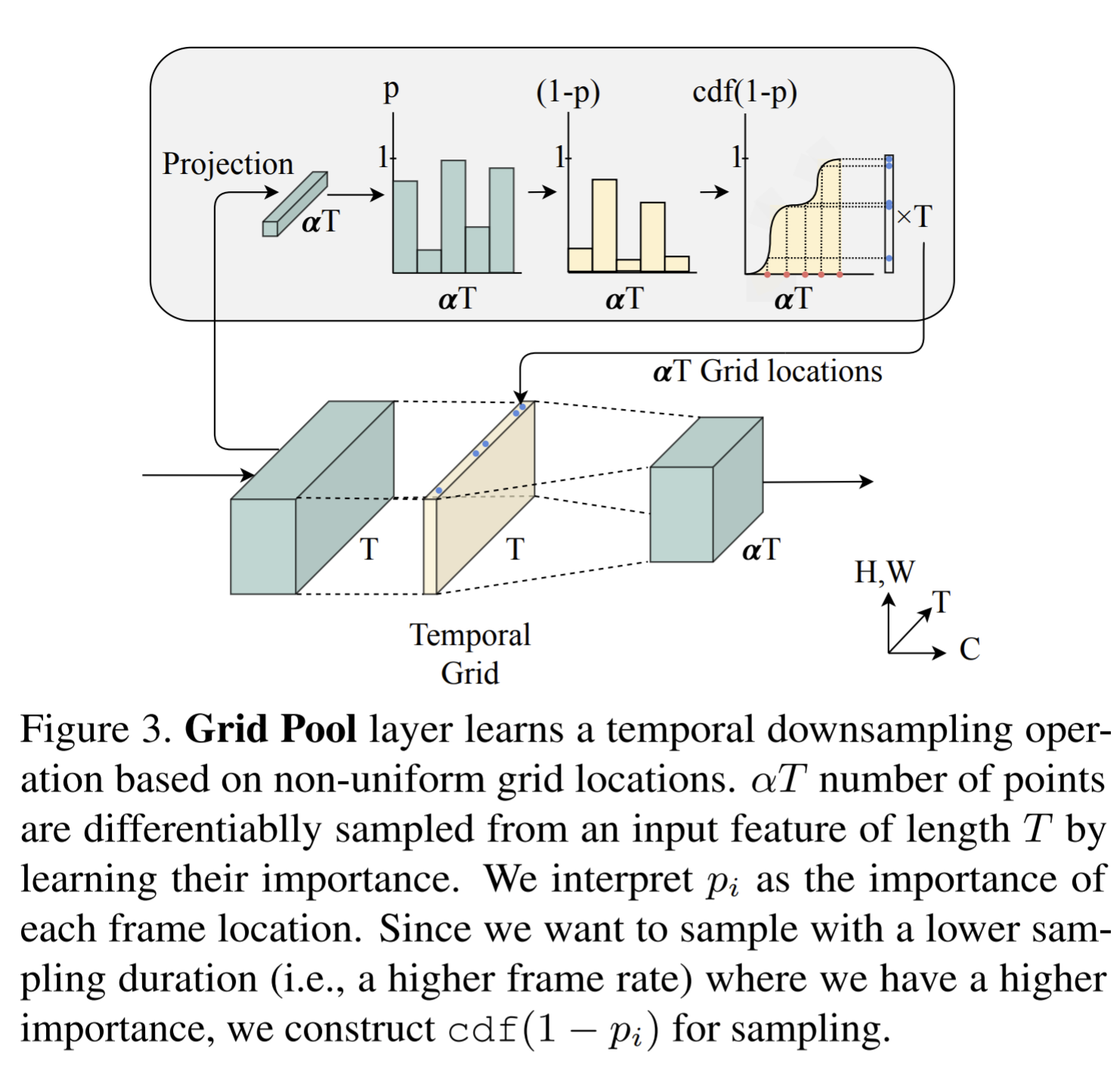

- Grid Pool, a learned temporal downsampling layer to extract coarse features, which adaptively samples the most informative frame locations with a differentiable process.

- Multi-stage Fusion, a spatio-temporal attention mechanism to fuse a fine-grained context with the coarse features.

Grid pool

- Samples by interpolating on a non-uniform grid with learnable grid locations. The intuition comes from that sampling frames at a higher frame rate where the confidence is high and at a lower frame rate where it is low. The stride between the interpolated frame locations should be small and vice-versa.

- The confidence value is modeled as a function of the input representation.

- To get a set of \(\alpha T\) (an integer) grid locations based on confidence values, the CDF is considered.

- When a grid location is non-integer, the corresponding sampled frame is a temporal interpolation between the adjacent frames.

- A grid unpool operation is coupled with the grid locations learned by the Grid pool layer, which simply performs the inverse operation of the Grid pool. In this way, one will resamples with a low frame-rate in the regions where one used a high frame-rate in Grid pool, and vice-versa.

Multi-stage fusion

- Fuse the context from the fine stream and the coarse stream. Aims: filter out what fine-grained information should be passed down to the coarse stream, have a calibration step to align the coarse features and fine features, learn and benefit from multiple abstraction-levels of fine-grained context at each fuse-location in the coarse stream

- filtering fine-grained information: self-attention mask by processing the fine feature through a lightweight head consists of point-wise convolutional layers followed by a sigmoid non-linearity.

- Fine to coarse correspondence: use a set of temporal Gaussian distributions centered at each coarse frame location which abstract a location dependent weighted average of the fine feature.

- Multiple abstraction-levels: allow each fusion connection to look at the features from all abstraction levels by concatenating them channel-wise. The scale and shift features at each fusion location is calculated to finally fuse the features from any abstraction-levels.

Model details

- Backbone: X3D, which follows ResNet structure but designed for efficiency in video models.

- The coarse stream takes in segmented clips of \(T=64\) frames to follow the standard X3D architecture after the Grid pool later during training, while the fine stream always process the entire input clip.

- The main difference between the coarse and the fine stream is the Grid pool layer and the corresponding grid unpool operation.

- The grid pool later is placed after the 1st residual block.

- The peak magnitude of each mask is normalized to 1.

- The standard deviation \(\sigma\) is set to be \(\frac{T'}{8}\), empirically.

Paper 11: CoCoNets: Continuous Contrastive 3D Scene Representations

https://mihirp1998.github.io/project_pages/coconets/

Why

- Combine 3D voxel grids and implicit functions and learn to predict 3D scene and object 3D occupancy from a single view with unlimited spatial resolution

Goal

SSL learning of amodal 3D feature representations from RGB and RGBD posed images and videos, and finally generate the representations to help object detection, object tracks or visual correspondence.

Trained for contrastive view prediction by rendering 3D feature clouds in queried viewpoints and matching against the 3D feature point cloud predicted frim the query view.

Finally, the model forms plausible 3D completions of the scene given a single RGB-D image as input.

How

- 3d feature grids as a 3D-informed neural bottleneck for contrastive view prediction, and implicit functions for handling the resolution limitations of 3D grids.

- Propose CoCoNets (continuous contrastive 3D networks) that learns to map RGB-D images to infinite-resolution 3D scene representations by contrastively predicting views. Specifically, the model is trained to lift 2.5D images to 3D feature function grids of the scene by optimizing for view-contrastive prediction

- There are two branches in CoCoNet, one is to encode RGB-D images into a 3D feature map, and the other is to encode the RGB-D of the target viewpoint into a 3D feature cloud.

- Two branches, one adopts top-down idea which encodes the input RGB-D image and orienting the feature map to the target viewpoint, and predict the features for the target 3D points in target domain by querying, and later output a feature cloud for the target domain. The other branch is the bottom-up one, which simply encodes the target RGB-D image and predict the features for the target 3D position in target domain, obtaining the feature cloud for target domains

- The positive samples are from the target domain that works in bottom-up branch.

Result

- The scene representations learnt by CoCoNets can detect objects in 3D across large frame gaps

- Using the learnt 3D point features as initialization boosts the performance of the SOTA Deep Hough Voting detector.

- The learnt 3D feature representations can infer 6DoF alignment between the same object in different viewpoints, and across different objects of the same category.

- optimize a contrastive view prediction objective but uses a 3D girder of implicit functions as its latent bottleneck.

Paper 12: CutPaste: Self-Supervised Learning for Anomaly Detection and Localization

Codes: https://github.com/Runinho/pytorch-cutpaste

Why

- Difficult to obtain a large amount of anomalous data, and the difference between normal and anomalous patterns are often fine-grained.

- The anomaly score defined as an aggregation of pixel-wise reconstruction error or probability densities lacks to capture a high-level semantic information.

- deep one-class classifier outperforms, but most existing work focus on detecting semantic outliers, which cannot generalize well in detecting fine-grained anomalous patterns as in defet detection.

- Naively applying existed methods such as rotation prediction or contrastive learning, is sub-optimal for detecting local defects.

- Rotation and translation ect. lacks of irregularity.

Goal

- detects unknown anomalous patterns of an image without anomalous data

- Design a pretext task that can identify local irregularity.

- The pretext task is also amenable to combine with existing methods, such as transfer learning from pretrained models for better performance or patch-based models for more accurate localization

How

- Learn representations by classifying normal data from the CutPaste (data augmentation strategy that cuts an image patch at a random location of a large image). First learn SS deep representations and then build a generative one-class classifier.

- transfer learning on pretrained representations on ImageNet.

- designing a novel proxy classification task between normal training data and the ones augmented by the CutPaste. The CutPaste motivated to produce a spatial irregularity to serve as a coarse approximation of real defects.

- A two-stage framework to build an anomaly detector, where in the first stage they learn deep representations from normal data and then construct an one-class classifier using learned representations.

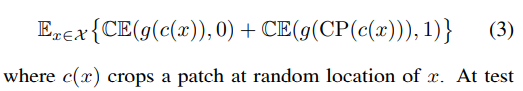

SSL with CutPaste

- CutPaste augmentation as follows:

- Cut a small rectangular area of variable sizes and aspect ratios from a normal training image.

- Optionally, we rotate or jitter pixel values in the patch.

- Paste a patch back to an image at a random location

- In practice , data augmentation or color jitter, are applied before feeding x into g or CP.

CutPaste variants

- CutPaste scar: a long-thin rectangular box filled with an image patch

- Multi-class classification: formulate a finer-grained 3-way classification task among normal, CutPaste and CutPaste-Scar by treating CutPaste variants as two separate classes.

- Similarity between CutPaste and real defects: outliers exposure. CutPaste creates examples preserving more local structures of the normal examples, while is more challenging for the model to learn to find this irregularity.

- CutPaste does look similar to some real defects.

Computing anomaly score

A simple Gaussian density estimator whose log-density is computed as follows

Localization with patch representation

CutPaste prediction is readily applicable to learn a patch representation – all we need to do at training is to crop a patch before applying CutPaste augmentation.

Paper 13: Discriminative Latent Semantic Graph for Video Captioning

https://github.com/baiyang4/D-LSG-Video-Caption

Why

- Key challenge of the video captioning task: no explicit mapping between video frames and captions, and the output sentence should be natural

- GNNs show particular advantages in modeling relationships between objects, but they don't jointly consider the frame-based spatial-temporal contexts in the entire video sequence

- discriminative modeling for caption generation suffers from stability issues and requires pre-trained generators.

- Traditional GNNs for video captioning cannot take adequate information into consideration, while the work of this paper (conditional graph) jointly consider objects, contexts and motion information at both region and frame levels.

Goal

- Video captioning

- Encode-decoder frameworks cannot explicitly explore the object-level interactions and frame-level information from complex spatio-temporal data to generate semantic-rich captions.

- Contributions on three key sub-tasks in video captioning

- Enhanced object proposal: propose a novel conditional graph that can fuse spatio-temporally information into latent object proposal.

- visual knowledge: latent proposal aggregation to dynamically extract visual words

- sentence validation: a novel discriminative language validator

- Propose D-LSG, where the graph model for feature fusion from multiple base models, the latent semantic refers to the higher-level semantic knowledge that can be extracted from the enhanced object proposals. The discriminative module is designed as a plug-in language validator, which uses the Multimodal Low-rank Bi-linear (MLB) pooling as metrics.

How

- a semantic relevance discriminative graph based on Wasserstein gradient penalty.

- Modeled as a sequence to sequence process.

Architecture Design

- Multiple feature extraction: use 2D CNNs for appearance features and 3D CNNs for motion features. Then these two features are concatenated and apply LSTM on them.

- Enhanced object proposal: enhanced by their visual contexts of appearance and motion respectively, which result in enhanced appearance proposals and enhanced motion proposal, together these two form the enhanced object proposals.

- Visual knowledge: latent semantic proposals as \(K\) dynamic visual words, after introducing the dynamic graph built by LPA to summarize the enhanced appearance and motion features.

- Language decoder: language generation decoder will take the visual knowledge extracted by the LPA to generate captions. it consists of an attention LSTM for weighting dynamic visual words and a language LSTM for caption generation.

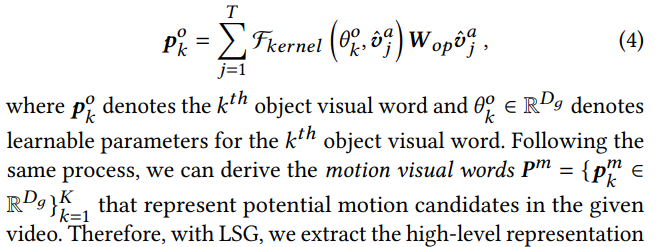

Latent Semantic graph

conditional graph operation : model the complex object-level interactions and relationships, and learn informative object-level features that are in context of frame-based background information.

- To build the graph, each region feature is regarded as a node. During message passing, the enhanced appearance proposal and object-level region features are handled with a kernel function to encode relations between them. The kernel is defined by linear functions followed by Tanh activation function.

LPA

- to further summarize the enhanced object proposals

- propose a latent proposal aggregation method to generate visual words dynamically based on the enhanced features.

- Introduce a set of object visual words, which means potential object candidates in the given video, and then they summarize the enhanced proposals into informative dynamic visual words.

Discriminative language validation

- The module is designed to as a language validation process that encourages the generated captions to contain more informative Semantic concepts via reconstructing the visual words or knowledge based on the input sentences under the condition of corresponding true visual words encoded by LSG.

- Use WGAN-GP

- Extract sentence features from given captions by 1DCNN,

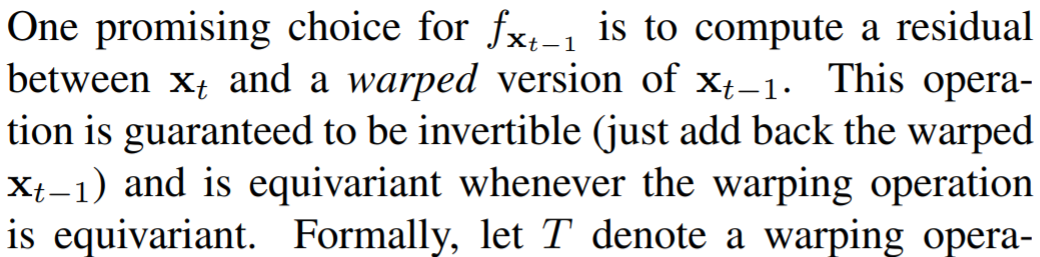

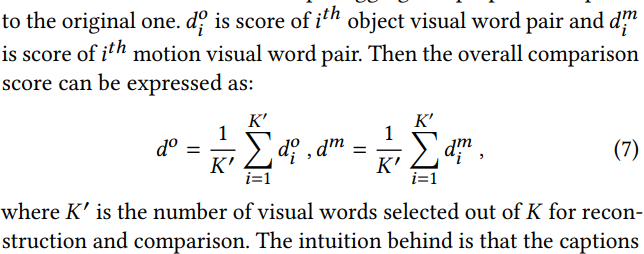

- The output of the discriminative model is weighted since sentences have different proportions of object and motion concepts

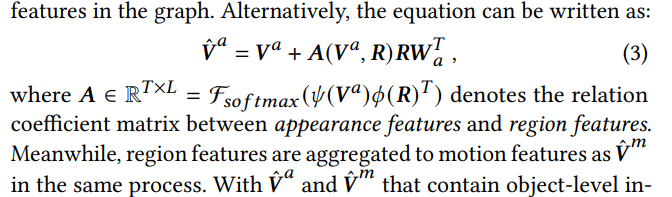

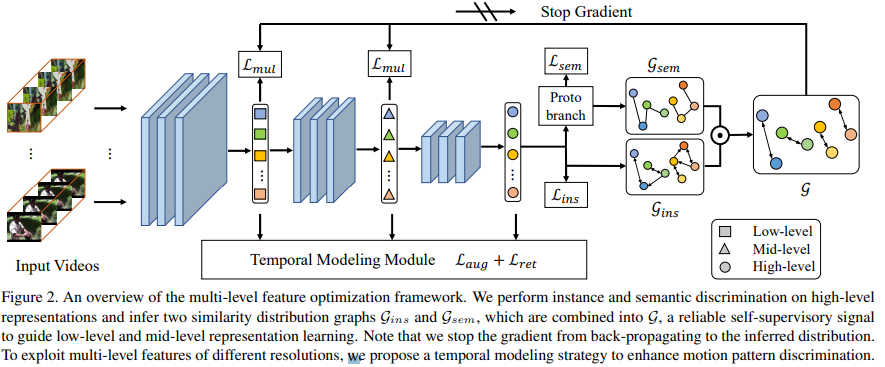

Paper 14: Enhancing Self-supervised Video Representation Learning via Multi-level Feature Optimization

https://github.com/shvdiwnkozbw/Video-Representation-via-Multi-level-Optimization

Why

- most recent works have mainly focused on high-level semantics and neglected lower-level representations and their temporal relationship

- The requirement of developing unsupervised video representation learning without resorting to manual labeling

- Drawbacks

- previous works only explore either instance-wise or semantic-wise distribution, lacking a comprehensive perspective over both sides.

- less effort has been placed on low-level features than high-level representations, while the former is proven critical for knowledge transfer

- Third, directly performing temporal augmentations, e.g., shuffle and reverse, at input level instead of feature level could impair feature learning

- High-level features are more representative towards instances or semantics but less feasible towards cross-task transfer, while low-level features are transfer-friendly but lack structural information over samples.

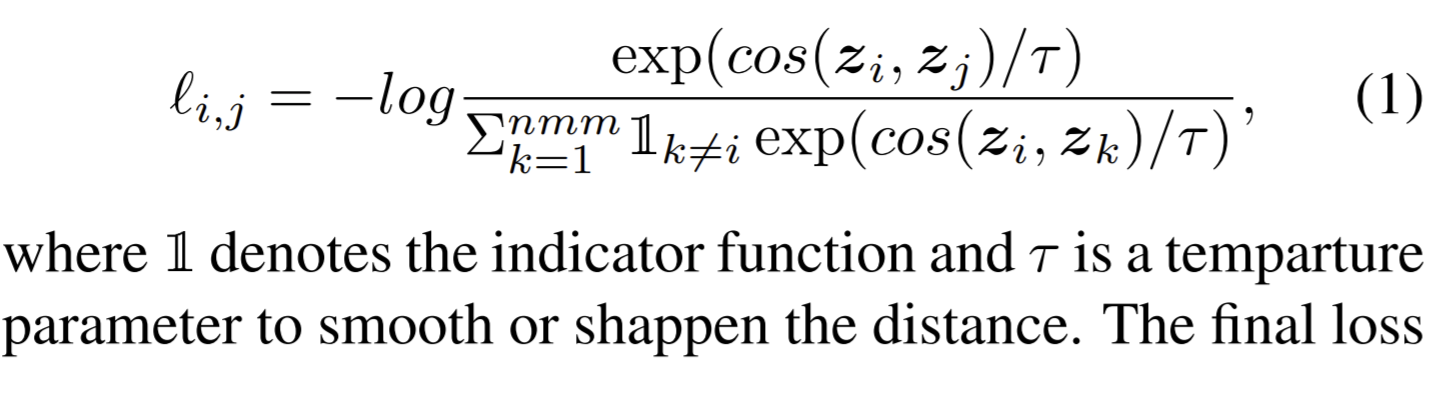

- a line of works expanded contrastive learning pipeline to video domain

- InfoNCE loss for dense future prediction

- the temporal information in videos is not well leveraged

- require a simple yet effective operation to apply temporal augmentations on extracted multi-level features

Goal

- proposes a multi-level feature optimization framework to improve the generalization and temporal modeling ability of learned video representations

- avoids forcing the backbone model to adapt to unnatural sequences which corrupts spatiotemporal statistics.

- Jointly consider the instance and semantic-wise similarity distribution to form a reliable SS signal.

How

- high-level features obtained from naive and prototypical contrastive learning are utilized to build distribution graphs

- devise a simple temporal modeling module from multi-level view features to enhance motion pattern learning.

- For low-level representation, apply temporal augmentation on multi-level features to construct contrastive pairs that have different motion patterns with the objective designed to distinguish the augmented samples and original ones. And one retrieval task is proposed to match the features in short and long time spans based on their semantic consistency.

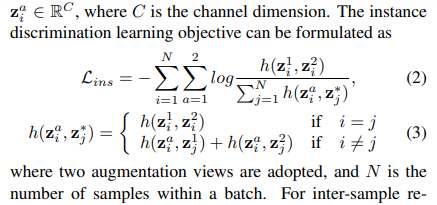

Beyond instance discrimination

- The one-hot labels in InfoNCE loss neglect the relationship between different samples. But there exist some negative samples that may share similar characteristics.

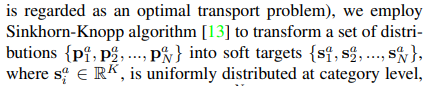

- besides instance-wise discrimination, we explicitly develop another branch on the projected high-level feature vectors for inter-sample relationship modeling.

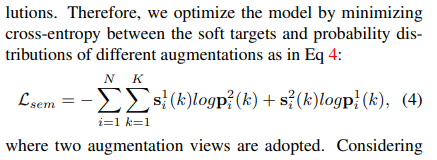

- design a queue to store the semantic-wise distributions from previous batches to ensure equal partition into K prototypes, but using only those from the current batch for gradient back-propagation

- Finally, we jointly leverage \(\mathcal{L}_{ins}\) and \(\mathcal{L}_{sem}\) to form the self-supervisory objective for high-level representations:

Graph constraint for multi-level features

- It is the lower-level features that mainly transfer from the pretrained network to downstream tasks. One can infer instance- and semantic-wise distribution from high-level features.

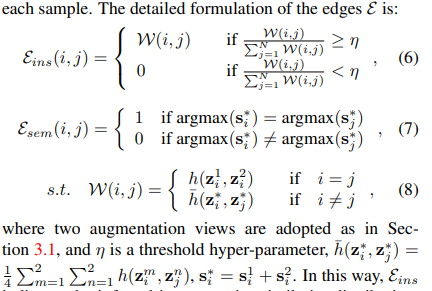

- Denote the instance-wise similarity distribution as a directed graph \(\mathcal{G}_{ins}\), and semantic-wise distribution as an undirected graph \(\mathcal{G}_{sem}\). Each graph contains N nodes representing N different samples within a batch, and edges indicating the relationship between each sample.

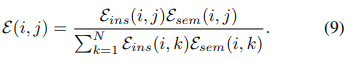

- \(\mathcal{E}_{ins}\) indicates the inferred instance-wise similarity distribution, which respects inter-sample relationship and is more realistic data distribution than one-hot encoding. \(\mathcal{E}_{sem}\) to truncate the edges between nodes of different pseudo categories.

- Jointly leverage \(\mathcal{G}_{ins}\) and \(\mathcal{G}_{sem}\) to form the combined graph \(\mathcal{G}\), whose edge weights serve as the final soft targets:

- The cross-entropy between \(\mathcal{E}\) and inferred similarity distribution to optimize lower-level features.

Temporal modeling

Use the temporal information at diverse time scales to enhance motion pattern modeling since the features at different layers possess different temporal characteristic.

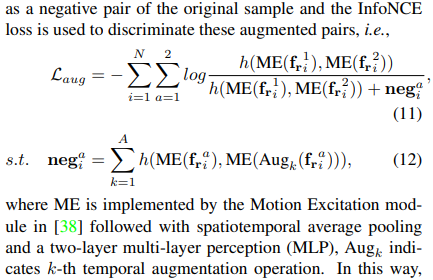

A robust temporal model requires two aspects: semantic discrimination between different motion patters; semantic consistency under different temporal views.==> Two learning objectives

perform temporal augmentation on multi-level features \(\mathrm{f}_r\), and then leverage a lightweight motion excitation module to extract motion enhanced feature representations

Temporal transformations that result in semantically inconsistent motion patterns can be regarded as a negative pair of the original sample

To boost the consistency, they propose to match feature of a specific timestamp from sequences of different lengths. For one short sequence \(v_s\) that is contained in a long sequence \(v_l\), they retrieve the feature at each timestep of \(v_s\) in the feature set of \(v_l\). The feature of corresponding timestamp in \(v_l\) serves as the positive key, while others serve as negatives.

Paper 15: Exploring simple siamese representation learning

codes: https://github.com/facebookresearch/simsiam

Why

- Siamese networks are natural tools for comparing (including but not limited to “contrasting”) entities

- Recent methods define the inputs as two augmentations of one image, and maximize the similarity subject to different conditions

- An undesired trivial solution to Siamese networks is all outputs “collapsing” to a constant.

- Methods like Contrastive learning, e.g., SimCLR etc. work to fix this.

- Clustering is another way of avoiding constant output. While these methods do not define negative exemplars, this cluster centers can play as negative prototypes.

- BYOL relies only on positive pairs but it does not collapse in case a momentum encoder is used. The momentum encoder is important for BYOL to avoid collapsing, and it reports failure results if removing the momentum encoder

- the weight-sharing Siamese networks can model invariance w.r.t. more complicated transformations

Goal

- report that simple Siamese networks can work surprisingly well with none of the above strategies (contrastive learning, clustering or BYOL) for preventing collapsing

- our method ( SimSiam) can be thought of as “BYOL without the momentum encoder”. Directly shares the weights between the two branches, so it can also be thought of as “SimCLR without negative pairs”, and “SwAV without online clustering”.

- SimSiam is related to each method by removing one of its core components.

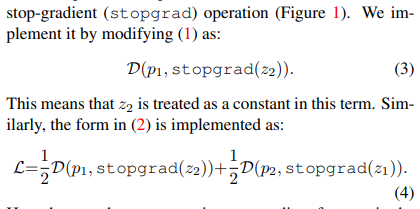

- The importance of stop-gradient suggests that there should be a different underlying optimization problem that is being solved.

How

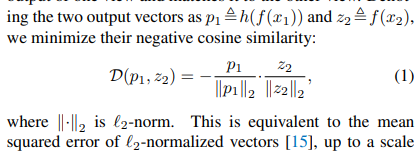

The proposed architecture takes as input two randomly augmented views \(x_1\) and \(x_2\) from an image \(x\). The two views are processed by an encoder network \(f\) consisting of a backbone and a project MLP head. A predict MLP head is denoted as \(h\).

The symmetrized loss is denoted as \(\mathcal{L}=\frac{1}{2}\mathcal{D}(p_1,p_2)+\frac{1}{2}\mathcal{D}(p_2,z_1)\). Its minimum possible value is −1.

the encoder on \(x_2\) receives no gradient from \(z_2\), but gradients from \(p_2\).

Use SGD as optimizer, with a base \(lr=0.05\), the learning rate is \(lr\times \mathrm{BatchSize}/256\).

Use ResNet50 as the default backbone.

Unsupervised pretraining on the 1000-class ImageNet training set without using labels.

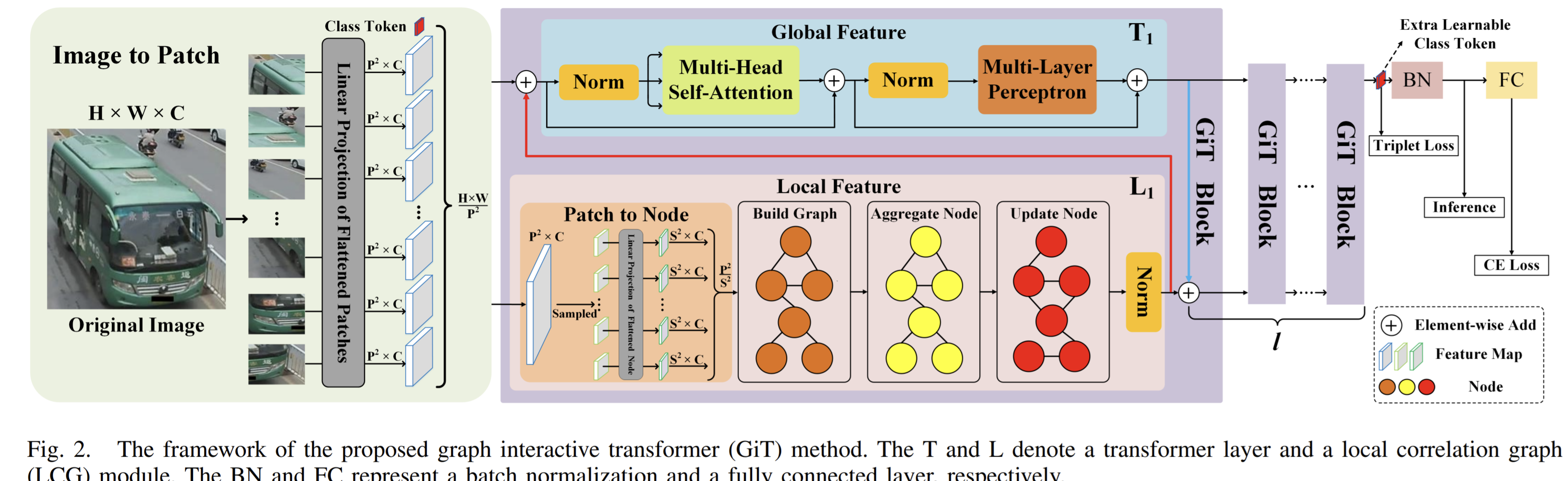

Paper 16: GiT: Graph Interactive Transformer for Vehicle Re-identification

Why

- Vehicle re-identification aiming to retrieve a target vehicle from non-overlapping cameras. But there are challenges

- vehicle images of different identifications usually have similar global appearances and subtle differences in local regions

- The technologies of vehicle re-identification

- Early methods: pure CNNs, fail to catch local information

- Based on CNNs, cooperate part divisions (uniform spatial division suffer from partition misalignment, part detection requires a high cost of extra manual part annotations) to learn global features and local features.

- CNNs cooperate GNNs to learn global and local features: the CNN's downsampling and convolution operations reduce the resolution of feature maps, the CNN and GNN branches are supervised with two independent loss functions and lack interaction.

- This paper: couple global and local features via transformer and local correction graph modules.

- The advantages of transformer

- The transformer can use multi-head attention module to capture global context information to establish long-distance dependence on global features of vehicles.

- The multi-head attention module of transformer does not require convolution and down-sampling operations, which retain more detailed vehicle information.

Goal

Propose a graph interactive transformer (GiT) for vehicle-reidentification. Each GiT block employs a novel local correlation graph (LCG) module to extract discriminative local features within patches.

LCG Modules and transformer layers are in a coupled status.

How

- The transformer later and LCG module interact each other by skip connection when these two components work in sequence.

LCG module

To aggregate and learn discriminative local features within every patch.

flatten \(n\) local features \(d\) dimensions and map to \(d'\) dimensions with a trainable linear projection in every patch

The spatial graph's edges are constructed as \(E_{v_{i,j}}=\frac{\exp (F_{cos}(v_i,v_j))}{\sum\limits_{k=1}^{n}\exp {(F_{cos}(v_i,v_k))}}\), where \(i,j\in[1,2,...,n]\). The score of the cosine distance is denoted as \(F_{cos}=\frac{v_i,v_j}{\|v_i\|\|v_j\|}\).

To aggregate and update nodes, the aggregation node \(U\) of \(i\)-th graph is updated according as follows

\(U=(D^{-\frac{1}{2}}AD^{-\frac{1}{2}}X_i)\cdot W\),

Then \(U\) is processed non-linearly as

\(O=GELU(LN(U))\), where GELU represents the gaussian error linear units and LN denotes the layer normalization.

Transformer layer

- Model the global features between the different patches.

- Patches are the input for multi-head attention layer

- Later, the output from the attention layer is normalized and then processed by MLP.

Graph interactive Transformer

- Each GiT block consists of a LCG module and a Transformer layer.

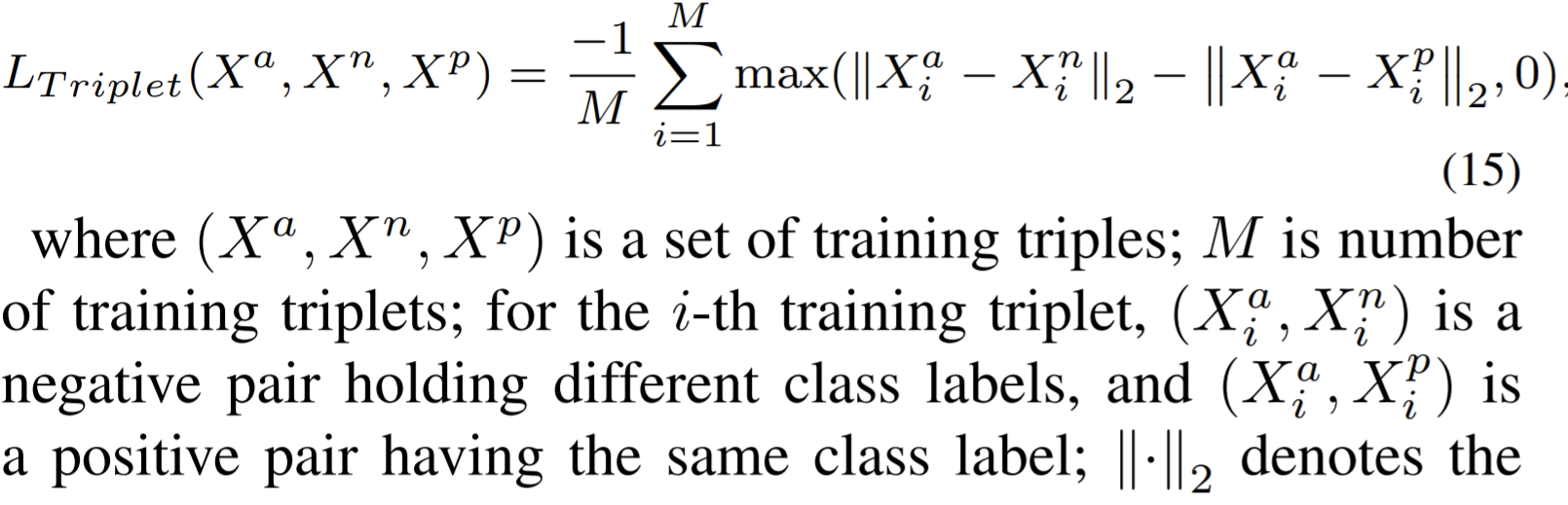

Loss function design

The proposed GiT's total loss function is

\(L_{total}=\alpha L_{CE}+\beta L_{Triplet}\), where \(L_{CE}\) denote cross-entropy loss, and \(L_T\) denotes triplet loss.

The \(L_{CE}\) formulates the cross-entropy of each patch's label

The triplet loss is

Paper 17: Graph-Time Convolutional Neural Networks

Codes: https://github.com/gtcnnpaper/DSLW-Code

Why

- The key for learning on multivariate temporal data is to embed spatiotemporal relations into into its inner-working mechanism.

- Spatiotemporal graph-base models

- Hybrid: combine learning algorithms developed separately for the graph domain and the temporal domain

- Such as a temporal RNN, CNN

- their spatial and temporal blocks are modular and can be implemented efficiently

- unclear how to best interleave these blocks for learning from spatiotemporal relationships

- Fused

- force the graph structure into conventional spatiotemporal solutions and provide a single strategy to jointly capture the spatiotemporal relationships.

- substitute the parameter matrices in these models with graph convolutional filters

- fused models capture naturally these relationships as they have graph-time dependent inner-working mechanisms.

- Hybrid: combine learning algorithms developed separately for the graph domain and the temporal domain

Goal

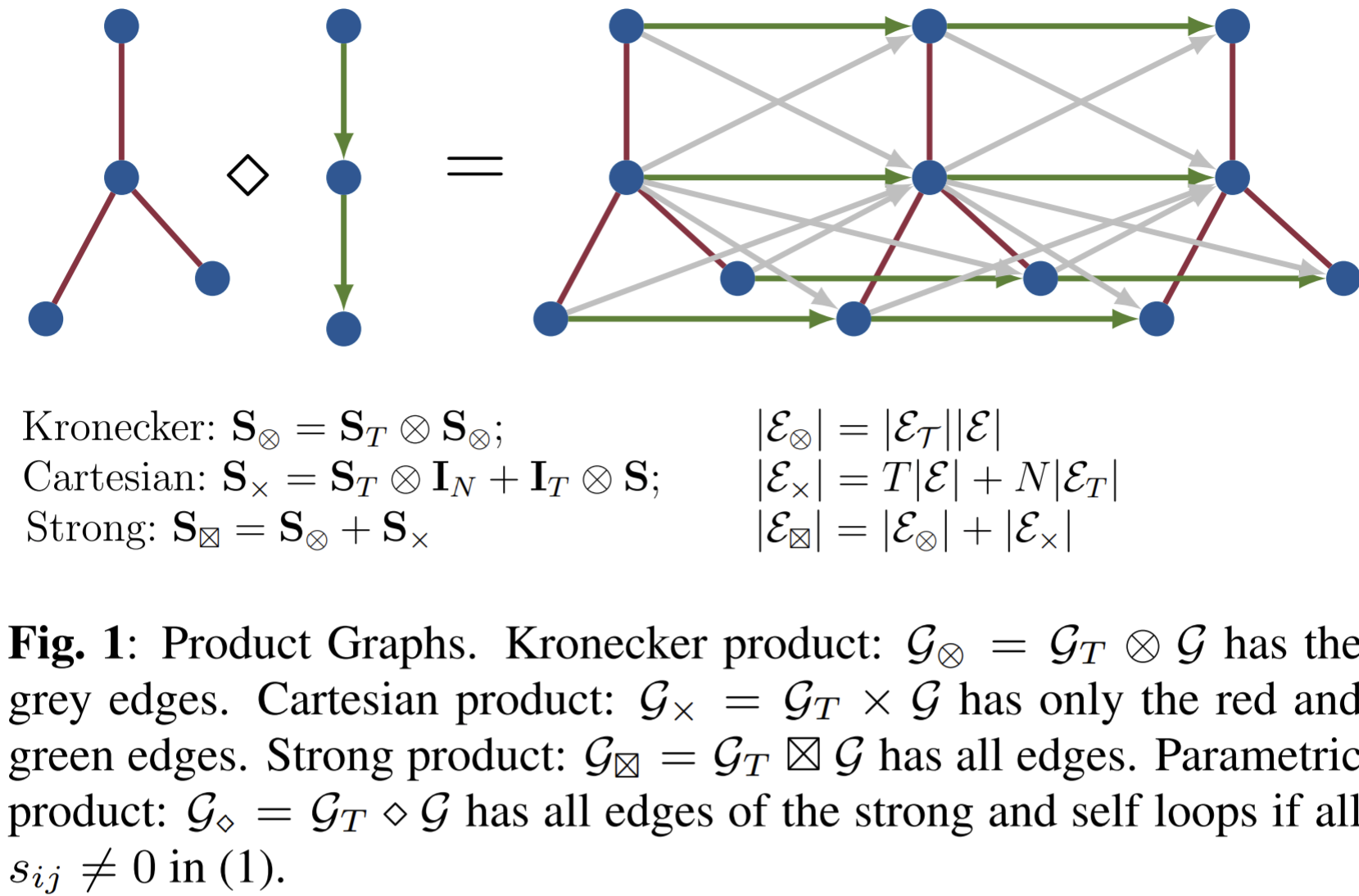

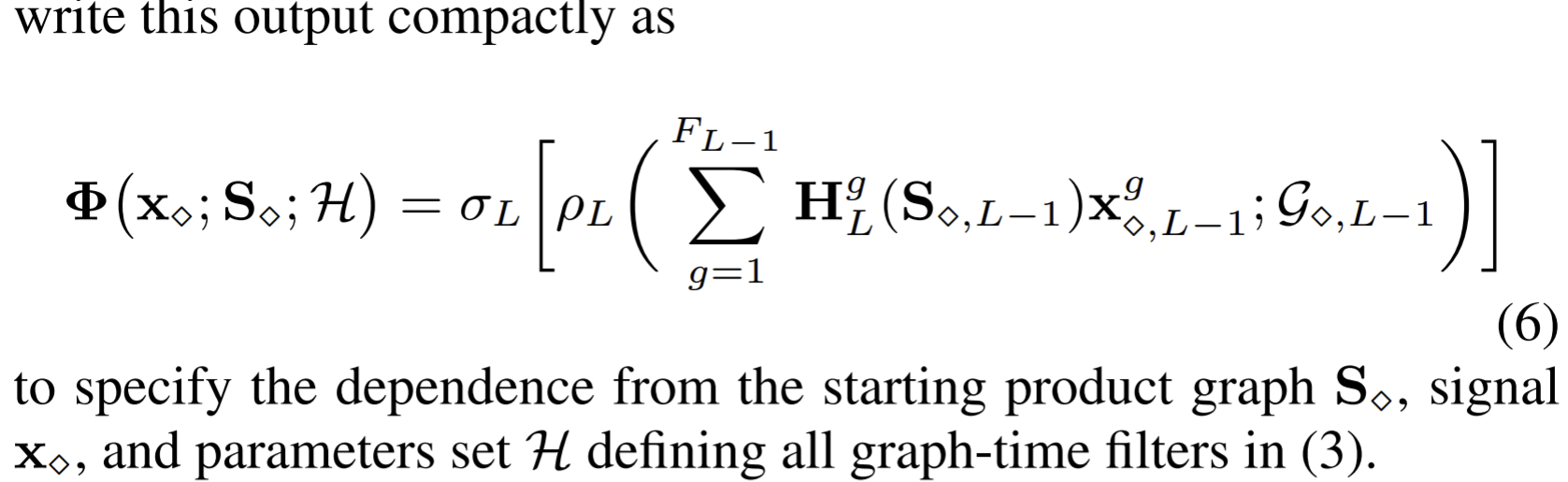

- Represent spatiotemporal relations through product graphs and develop a first principle graph-time convolutional neural network (GTCNN).

- For multivariate temporal data such as sensor or social networks

How

- Each layer consists of a graph-time convolutional module, a graphtime pooling module, and a nonlinearity.

- The product graph itself is parametric to learn the spatiotemporal coupling

- The zero-pad pooling preserves the spatial graph while reducing the number of active noes and parameters

Signals over product graphs

Two graphs:

- Spatial graph \(\mathcal{G}\), consider the original sensor network, each node has a time sequence

- Temporal graph \(\mathcal{G}_T\): for each node, there is a line graph that take each timestep as a node.

Given graphs \(\mathcal{G}\) and \(\mathcal{G}_T\) , we can capture the spatiotemporal relations in \(\mathrm{X}\) through the product graph \(\mathcal{G}_\diamond = \mathcal{G}_T\times \mathcal{G}=(\mathcal{V}_\diamond,\mathcal{E}_\diamond)\) , where the vertex set \(\mathcal{V}_\diamond = \mathcal{V}_T \times \mathcal{V}\) is the Kronecker product between \(\mathcal{V}_T\) and \(\mathcal{V}\).

Product graphs

- The Kronecher product preserves the relations between different nodes along temporal dimension. The 1st node at the 1st timestep have influences for the 2nd node at the 2nd timestep. And the influence is bidirectional.

- The cartesian product preserves the original spatial infromation and the temporal evolution on each node's temporal dimension.

Goal: to learn spatiotemporal representations in a form akin to temporal or graph CNNs.

Graph-time CNNs

- A compositional architecture of \(L\) layers each having a graph-time convolutional module, a graph-time pooling module and a nonlinearity.

- at convolutions allow for effective parameter sharing, inductive learning, and efficient implementation, while zero-pad pooling and pointwise nonlinearities make the architecture independent from graph-reduction techniques or other modules.

- Graph-time convolutional filtering

- The graph-time convolutional filter aggregates at the space-time location \((i, t)\) information from space-time neighbors that are up to \(K\) hops away over the product graph \(\mathcal{G}_\diamond\).

- Implement it recursively, and expand all polynomials of order \(k\). Then the computational cost is liner in the product graph dimensions.

- Graph-time pooling: The pooling approach has three steps: i) summarization; ii) slicing; iii) downsampling

- Summarization: up to \(\alpha_l\) hops away for each node . Use mean or max function.

- Summarization is an implicit low-pass operation and the type of product graph has an impact on its severit

- Slicing: reduces the dimensionality across the temporal dimension.

- Downsampling: reduces the number of active nodes across the spatial dimension from \(N_{l-1}\) to \(N_l\) without modifying the underlying spatial graph.

- Summarization: up to \(\alpha_l\) hops away for each node . Use mean or max function.

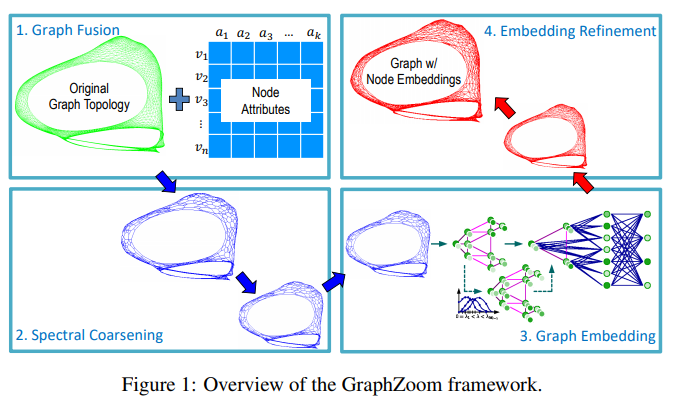

Paper 18: Graphzoom: A multi-level spectral approach for accurate and scalable graph embedding

Codes: https://github.com/cornell-zhang/GraphZoom

Why

- Challenges

- existing graph embedding models either fail to incorporate node attribute information during training or suffer from node attribute noise, which compromises the accuracy

- few of them scale to large graphs due to their high computational complexity and memory usage

- Graph embedding techniques

- Random-walk-based embedding algorithms

- embed a graph based on its topology without incorporating node attribute information==> limits the embedding power

- GCN with the basic notion that node embeddings should be smoothed over the entire graph and so can leverage both topology and node attribute information.==> But may suffer from high-frenquency noise in the inital node features.

- Random-walk-based embedding algorithms

- Only one of the solution for increasing the accuracy or improving the scalability of graph embedding methods is well-handeled. Not all of them

- Previous work

- Multi-level graph embedding: GraphZoom is motivated by theoretical results in spectral graph embedding.

- Graph filtering: GCN model implicitly exploits graph filter to remove high-frequency noise from the node feature matrix. In GraphZoom we adopt graph filter to properly smooth the intermediate embedding results during the iterative refinement step.

Goal

- Propose GraphZoom, a multi-level framework for improving both accuracy and scalability of unsupervised graph embedding algorithms.

How

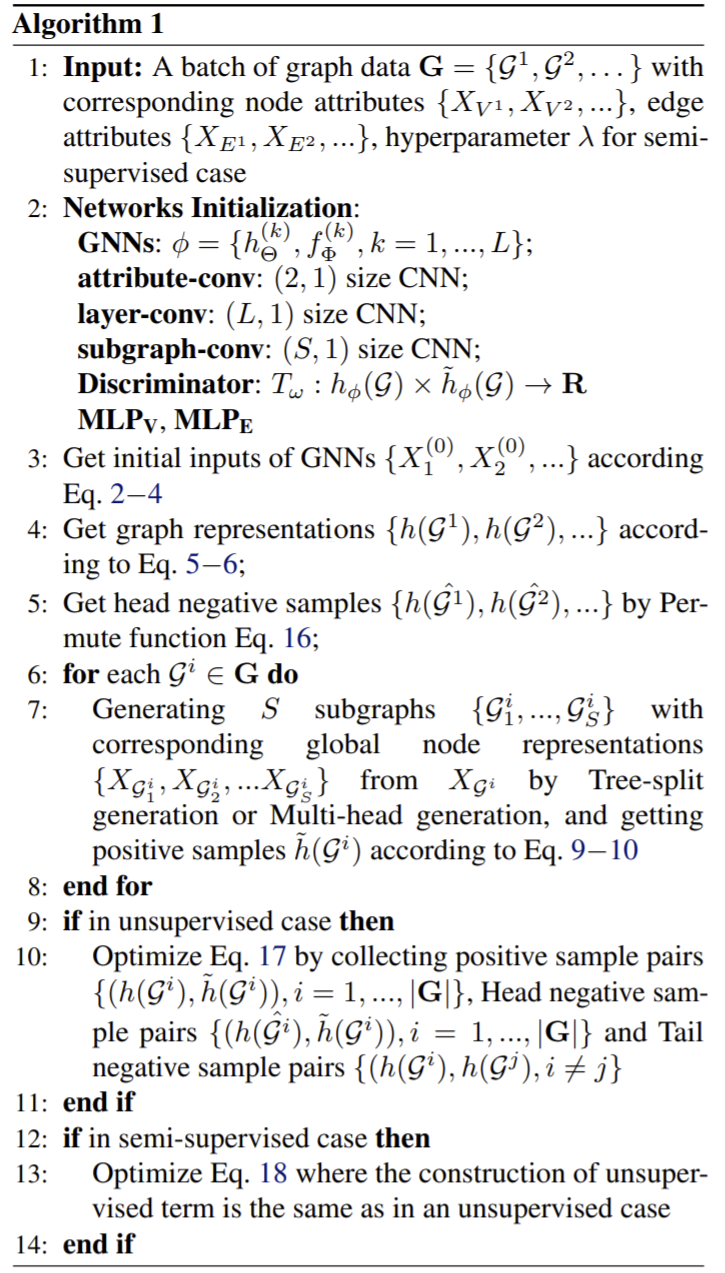

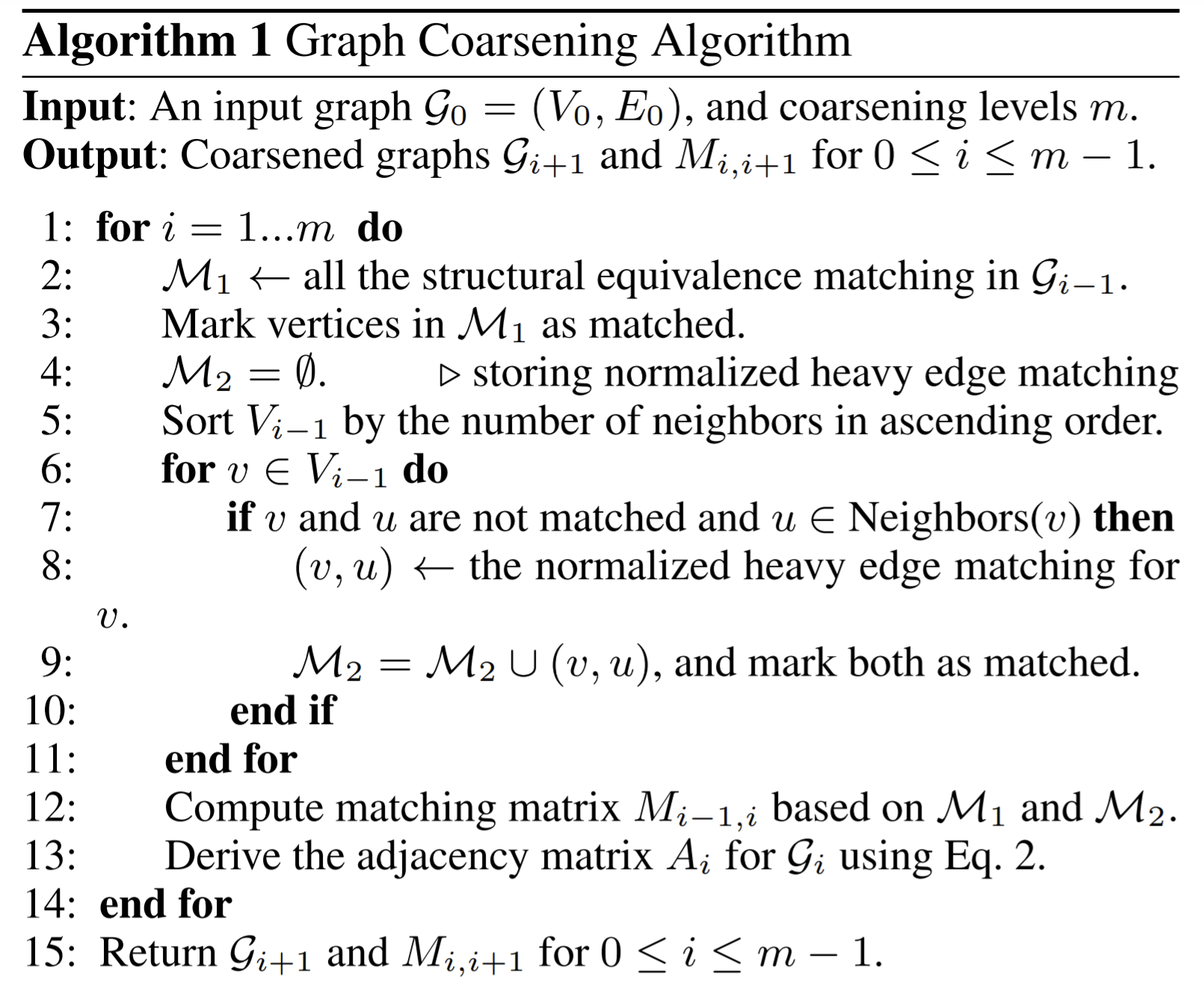

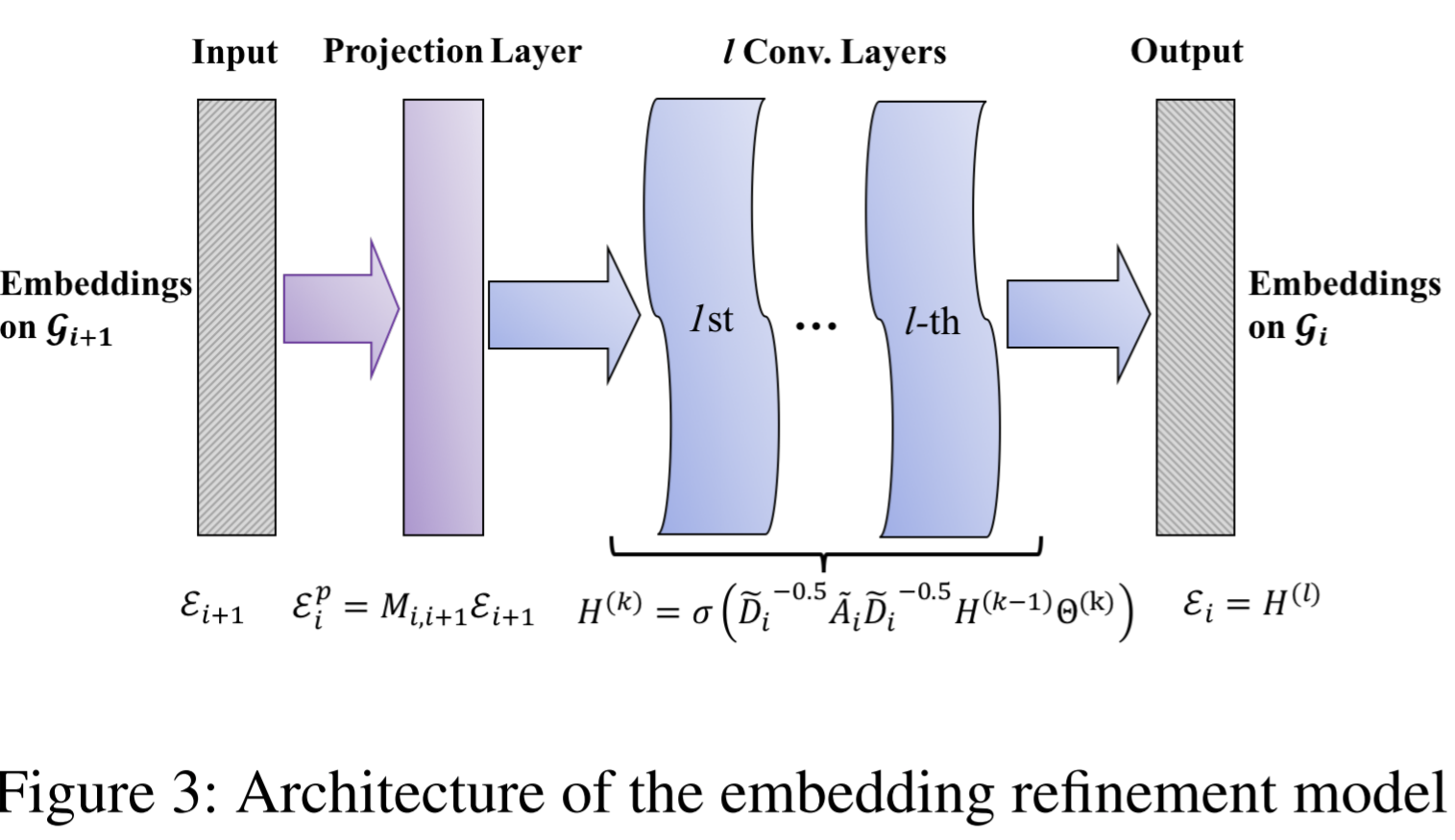

- GraphZoom consists of four major kernels: (1) graph fusion, (2) spectral graph coarsening, (3) graph embedding, and (4) embedding refinement.

- The graph fusion kernel first converts the node feature matrix into a feature graph and then fuses it with the original topology graph.

- Spectral graph coarsening produces a series of successively coarsened graphs by merging nodes based on their spectral similarities.

- During the graph embedding step, any of the existing unsupervised graph embedding techniques can be applied to obtain node embeddings for the graph at the coarsest level.

- Embedding refinement is then employed to refine the embeddings back to the original graph by applying a proper graph filter to ensure embeddings are smoothed over the graph.

Graph Fusion

- To construct a weighted graph that has the same number of nodes as the original graph but potentially different set of edges (weights) that encapsulate the original graph topology as well as node attribute information

- Firstly generate a KNN graph based on the \(\ell^2-norm\) distance between the attribute vectors of each node pair so to convert initial attribute matrix \(X\) into a weighted node attribute graph.

- To implement in a linear cost, they start with coarsening the original graph \(\mathcal{G}\) to obtain a substantially reduced graph that has much fewer nodes with an \(\mathcal{O}(|\mathcal{E}|)\) , similar as to spectral graph clustering to group nodes into clusters of high conductance. After the attribute graph is formed, we assign a weight to each edge based on the cosine similarity between the attribute vectors of the two incident nodes. Finally, we can construct the fused graph by combining the topology graph and the attribute graph using a weighted sum: \(\mathrm{A}_{fusion}=\mathrm{A}_{topo}+\beta \mathrm{A}_{feat}\).

Spectral coarsening

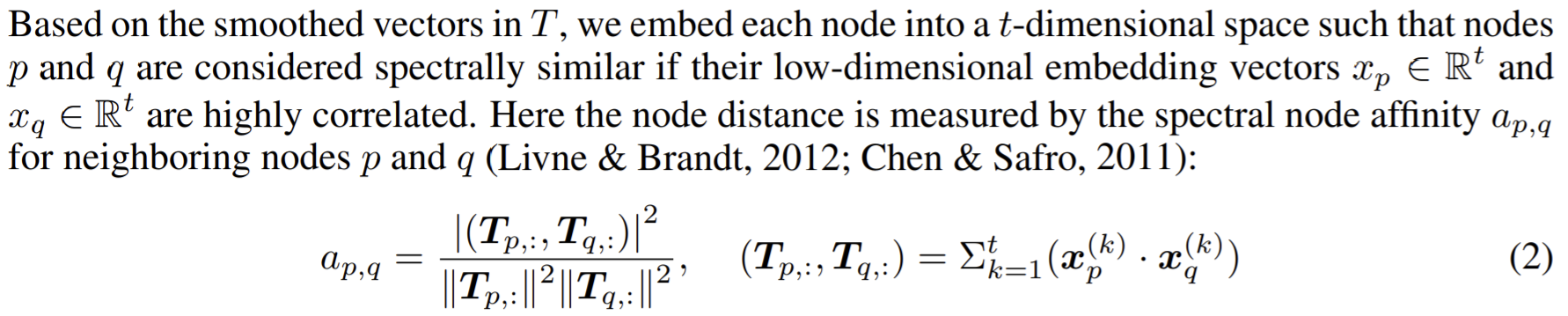

- For graph coarsening via global spectral embedding: calculating eigenvectors of the original gragh Laplacian is very costly. To eliminate the cost, they propose a method for graph coarsening via local spectral embedding.

- The analogies between the traditional signal processing (Fourier analysis) and graph signal processing.

- The signals at different time points in classical Fourier analysis correspond to the signals at different nodes in an undirected graph;

- The more slowly oscillating functions in time domain correspond to the graph Laplacian eigenvectors associated with lower eigenvalues or the more slowly varying (smoother) components across the graph.

- apply the simple smoothing (low-pass graph filtering) function to \(k\) random vectors to obtain smoothed vectors for \(k\)-dimensional graph embedding, which can be achieved in linear time.

- The analogies between the traditional signal processing (Fourier analysis) and graph signal processing.

- We adopt low-pass graph filters to quickly filter out the high-frequency components of the random graph signal or the eigenvectors corresponding to high eigenvalues of the graph Laplacian, and then get the smoothed vectors in \(T\) (initial random vectors).

- The aggregation scheme:

- Once the aggregation scheme is defined, the coarsening in multilevel is formed as a set of graph mapping matrices.

Graph embedding

Just obtain embedding according to the previously defined formulas.

Embedding refinement

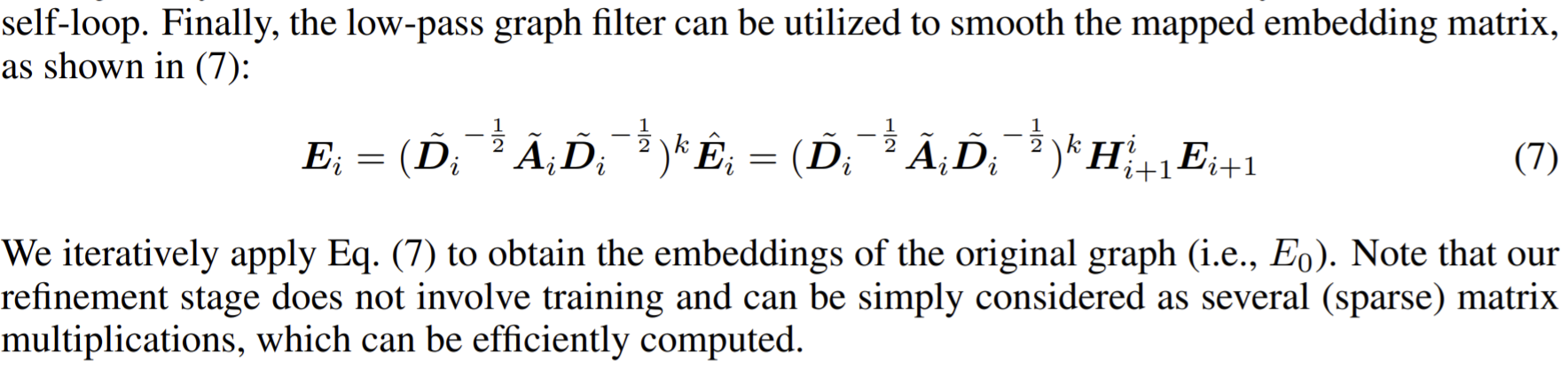

- To get the embedding vectors for the original graph eventually. It's kind of like remapping the graph from the coarsest level to the finest level.

- The refinement process is motivated by Tikhonov regularization to smooth the node embedding over the graph by minimizing \(\min\limits_{E_i}\{\|E_i-\hat{E}_i\|^2_2+tr(E_i^\top L_iE_i)\}\), where \(L_i\) and \(E_i\) are the normalized Laplacian matrix and mapped embedding matrix of the graph at the \(i\)-th coarsening level, respectively. The solving the equation, the refined embedding matrix of edges are solved.

- To solve the equation efficiently , they work in spectral domain, and approximate the graph filter by it first-order Taylor expansion.

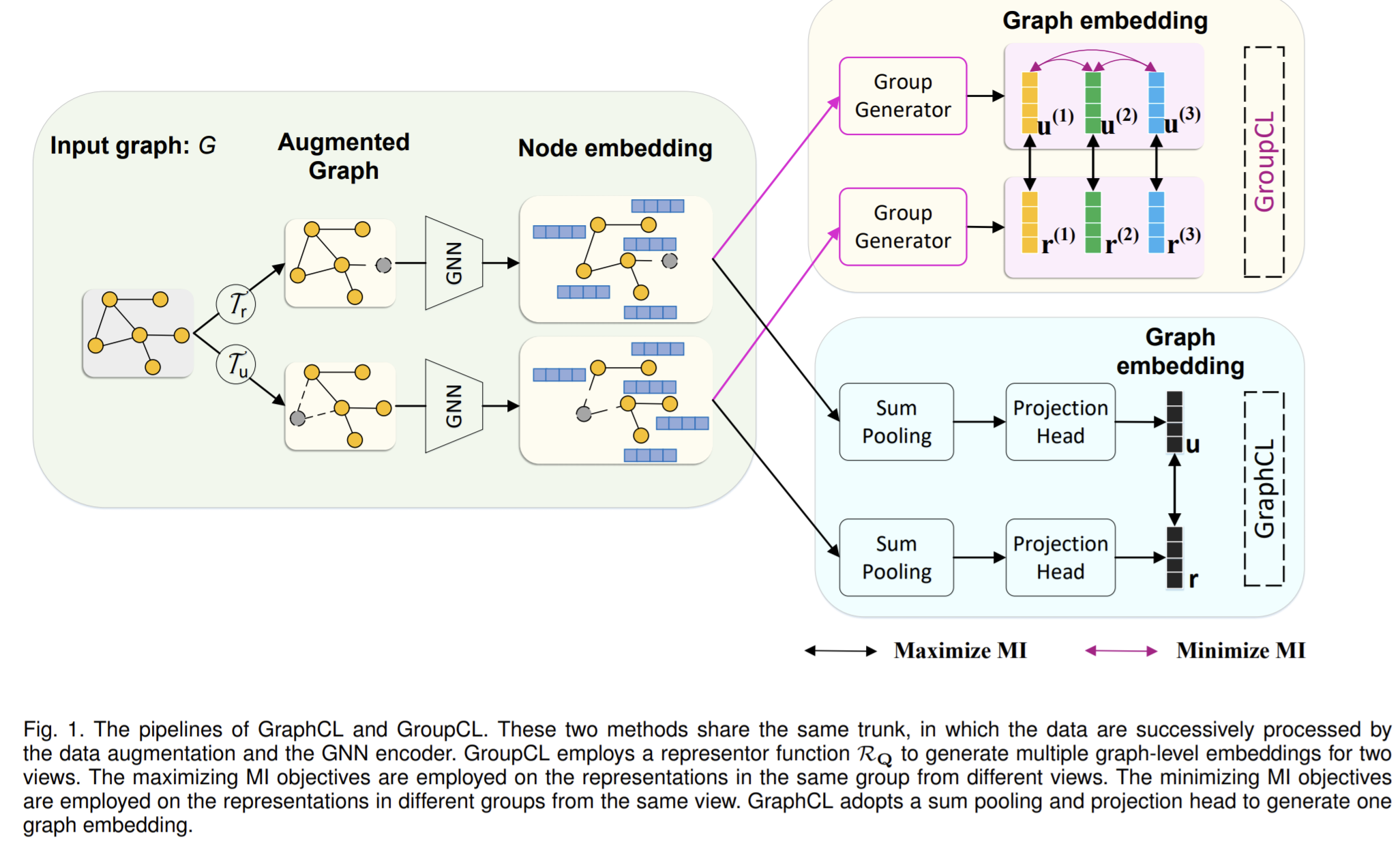

Paper 19: Group Contrastive Self-Supervised Learning on Graphs

Why

- Capability : Contrasting graphs in multiple subspaces enables graph encoders to capture more abundant characteristics.

- CL methods train models on pretext tasks that encode the agreement between two views of representations. These two views can be global-local pairs or differently transformed graph data. The learning goal is to make these two-view representations similar if they are from the same graph and dissimilar if they are from different graphs.

- The idea of using groups has been shown to be effective in the image domain.

Goal

- Study SSL on graphs by contrastive learning.

- Propose a group contrastive learning framework. Embed the given graph into multiple subspaces, of which each representation is prompted to encode specific characteristics of graphs.

- Further develop an attention-based representor function to compute representations. Develop principled objectives that enable us to capture the relations among both intra-space and inter-space representations in groups.

How

- refer to a group as a set of representations of different graph views within the same subspace

- propose to maximize the MI between two views of representations in the same group while minimizing the MI between the representations of one view across different groups.

- a graph-level encoder usually consists of a node encoder which computes the node embedding matrix and a readout function summarize the mdoe embeddigs into the desired graph-level embedding.

The proposed group contrastive learning framework

- To build two views and their corresponding multiple graph-level representations. The used GNN encoder can be the same, or one can just duplicate the calculated representation for \(p\) times. Then for view \(u,r\), they can be combined into \(p\) pairs which are cross-view pairs and two groups that each group from one specific view.

- So for two views: the main view \(u\), and auxiliary view \(r\), there are in total two encoders which are parameterized by \(\theta, \phi\) respectively.

Intra-space objective function

- For the intra-space objective, they seek to maximize the mutual information between representations of two views within each group. The optimization is done based on paramters \(\theta,\phi\).

- To implement, maximizes the MI's lower-bound. Precisely, they adopt the Jensen-Shannon estimator of MI, and the disciriminator is the dot product between two representations.

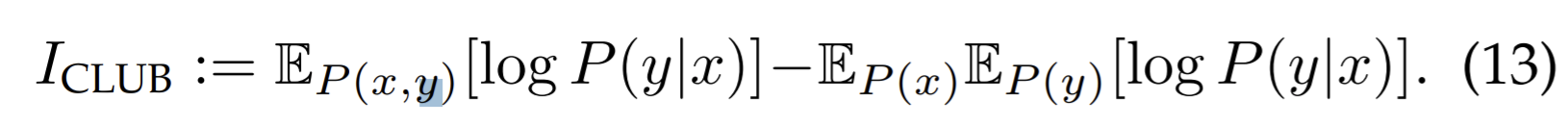

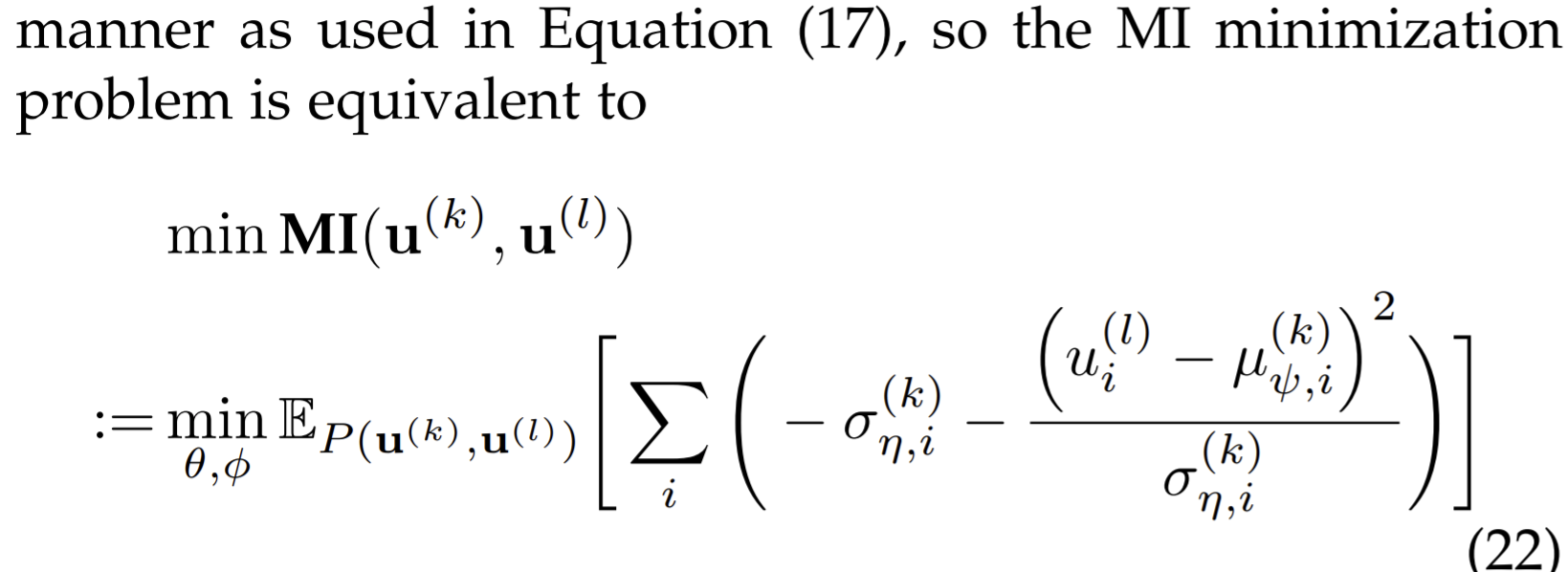

Inter-space objective function

- Constraint the pairwise relation across different groups of the same view to enforce the diversity of inter-space. They employ an inter-space optimization objective based on mutual information minimization.

- To minimize the MI, we introduce an upper bound of MI as an efficient estimation, based on the contrastive log-ratio upper bound:

. To solve the key challenge of modeling \(P(y|x)\), there are two approaches based on whether the same dimensions of \(x\) and \(y\) correspond to each other across different representations. Suppose the distribution \(y\) conditional on \(x\) is subject to a Gaussian distribution.

. To solve the key challenge of modeling \(P(y|x)\), there are two approaches based on whether the same dimensions of \(x\) and \(y\) correspond to each other across different representations. Suppose the distribution \(y\) conditional on \(x\) is subject to a Gaussian distribution.

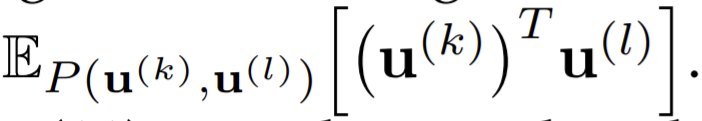

- Non-parameterized estimation

- Assume \(\mathbb{E}[y|x]=x\), and the variance \(\Sigma\) is a diagonal matrix with the same values on its diagonal. Then after deduction, the goal minimizing CLUB is equivalent to minimize

- This goal enlarges the agreement between \(\mathrm{u}^{(k)},\mathrm{u}^{(l)}\), under the joint distribution so to keep the diversity among one group.

- Assume \(\mathbb{E}[y|x]=x\), and the variance \(\Sigma\) is a diagonal matrix with the same values on its diagonal. Then after deduction, the goal minimizing CLUB is equivalent to minimize

- Parameterized estimation

- When there is no correspondence between dimensions of \(x,y\).

- Via a parameterized variational distribution. Concretely, they use two independent MLP to generate the mean and variance respectively.

- Non-parameterized estimation

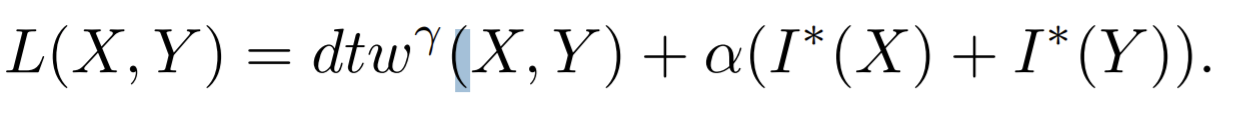

The overall objective function

- Combine the intra-space and the inter-space objectives together.

- Either optimize based on non-parameterized way or parameterized way.

GroupCL: GraphCL with Group Contrast

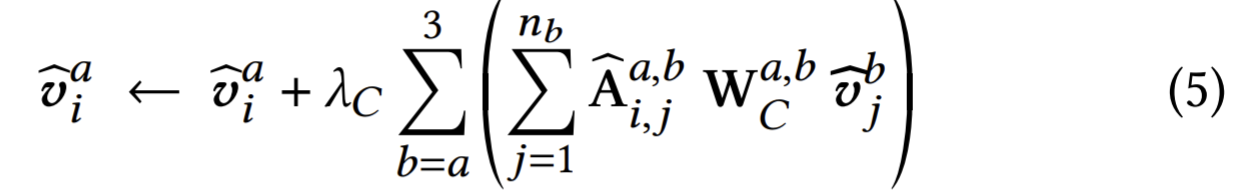

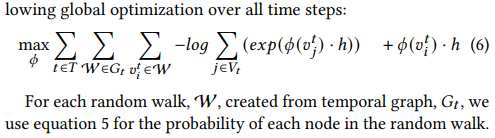

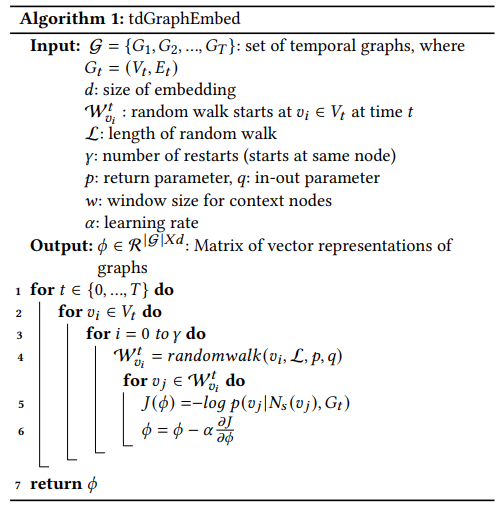

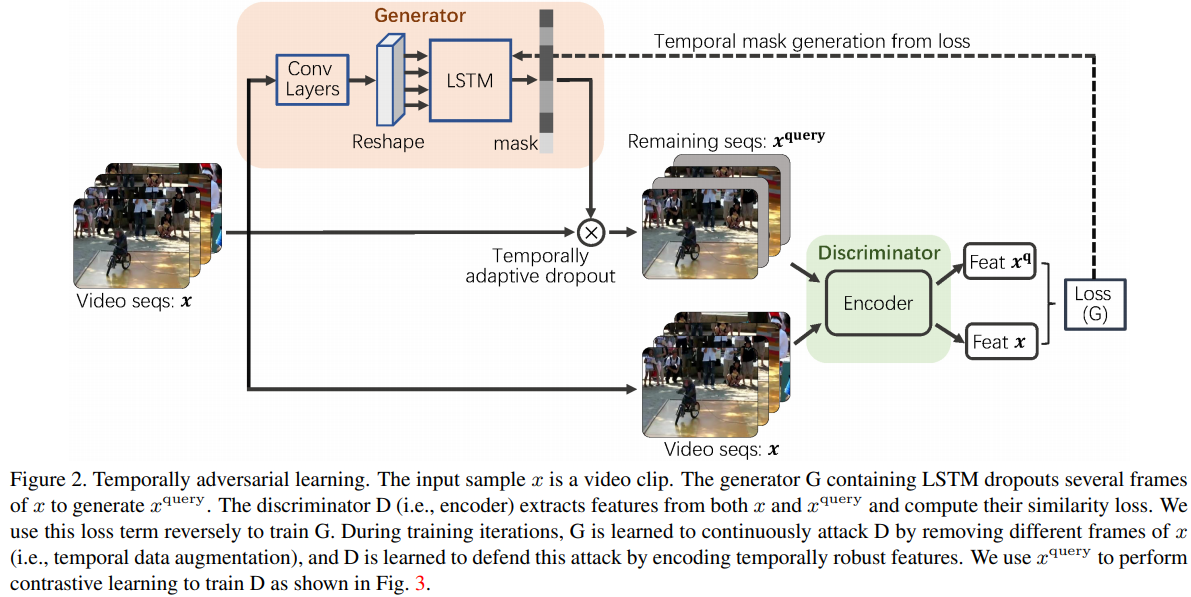

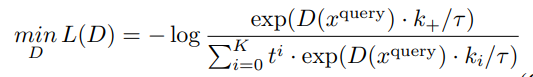

- the generation of multiple representations shares the same node encoder and envolves a parameterized representor function that computes multiple graph representations from node embeddings of a given graph.