Paper 1: Vincent et al (2008) Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. JMLR 2008

Analysis of SSL methods

- Paper 2: Kolesnikov, Zhai and Beyer (2019) Revisiting Self-Supervised Visual Representation Learning. CVPR 2019

- Paper 3: Zhai et al (2019) A Large-scale Study of Representation Learning with the Visual Task Adaptation Benchmark (A GLUE-like benchmark for images) ArXiv 2019

- Paper 4: Asano et al (2019) A critical analysis of self-supervision, or what we can learn from a single image ICLR 2020

Contrastive methods

Paper 5: van den Oord et al. (2018) Representation Learning with Contrastive Predictive Coding (CPC), ArXiv 2018

Paper 6: Hjelm et al. (2019) Learning deep representations by mutual information estimation and maximization (DIM) ICLR 2019

Paper 7: Tian et al. (2019) Contrastive Multiview Coding (CMC) ArXiv 2019

Paper 8: Hénaff et al. (2019) Data-Efficient Image Recognition with Contrastive Predictive Coding (CPC v2: Improved CPC evaluated on limited labelled data) ArXiv 2019

Paper 9: He et al (2020) Momentum Contrast for Unsupervised Visual Representation Learning (MoCo, see also MoCo v2). CVPR 2020

Paper 10: Chen T et al (2020) A Simple Framework for Contrastive Learning of Visual Representations (SimCLR). ICML 2020

Paper 11: Chen T et al (2020) Big Self-Supervised Models are Strong Semi-Supervised Learners (SimCLRv2) ArXiv 2020

Paper 12: Caron et al (2020) Unsupervised Learning of Visual Features by Contrasting Cluster Assignments (SwAV) ArXiv 2020

Paper 13: Xiao et al (2020) What Should Not Be Contrastive in Contrastive Learning ArXiv 2020

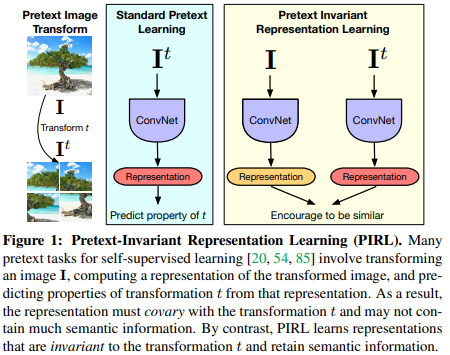

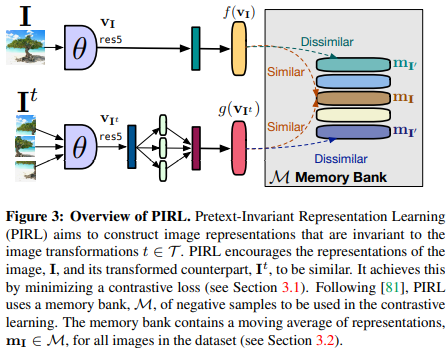

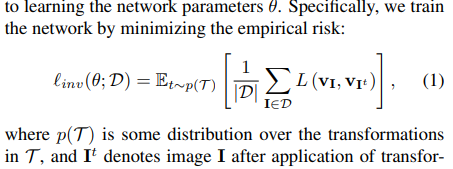

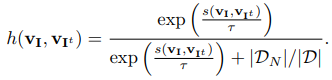

Paper 14: Misra and van der Maaten (2020) Self-Supervised Learning of Pretext-Invariant Representations. CVPR 2020

Generative methods

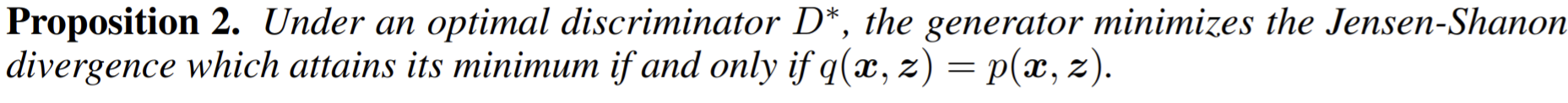

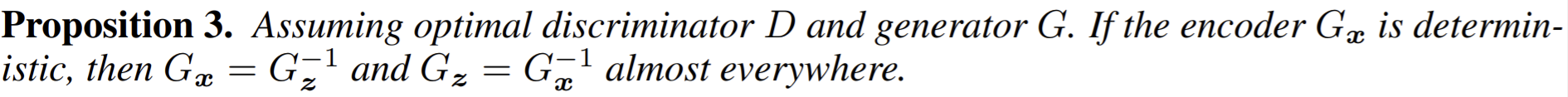

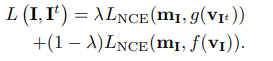

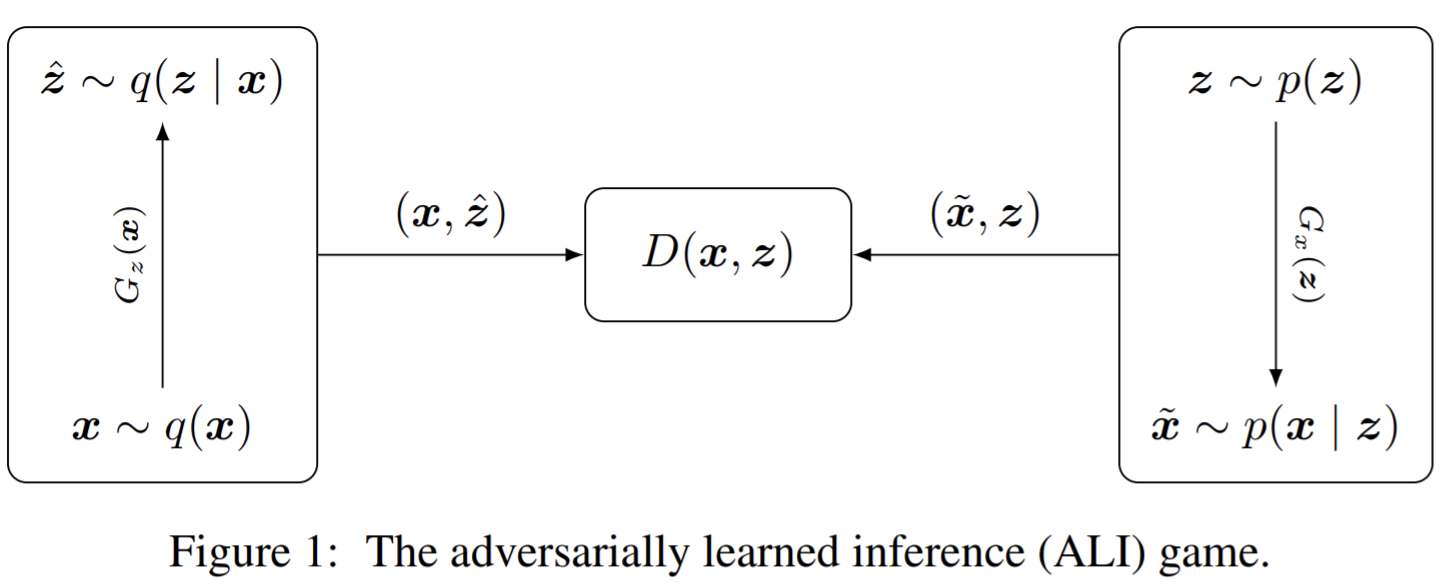

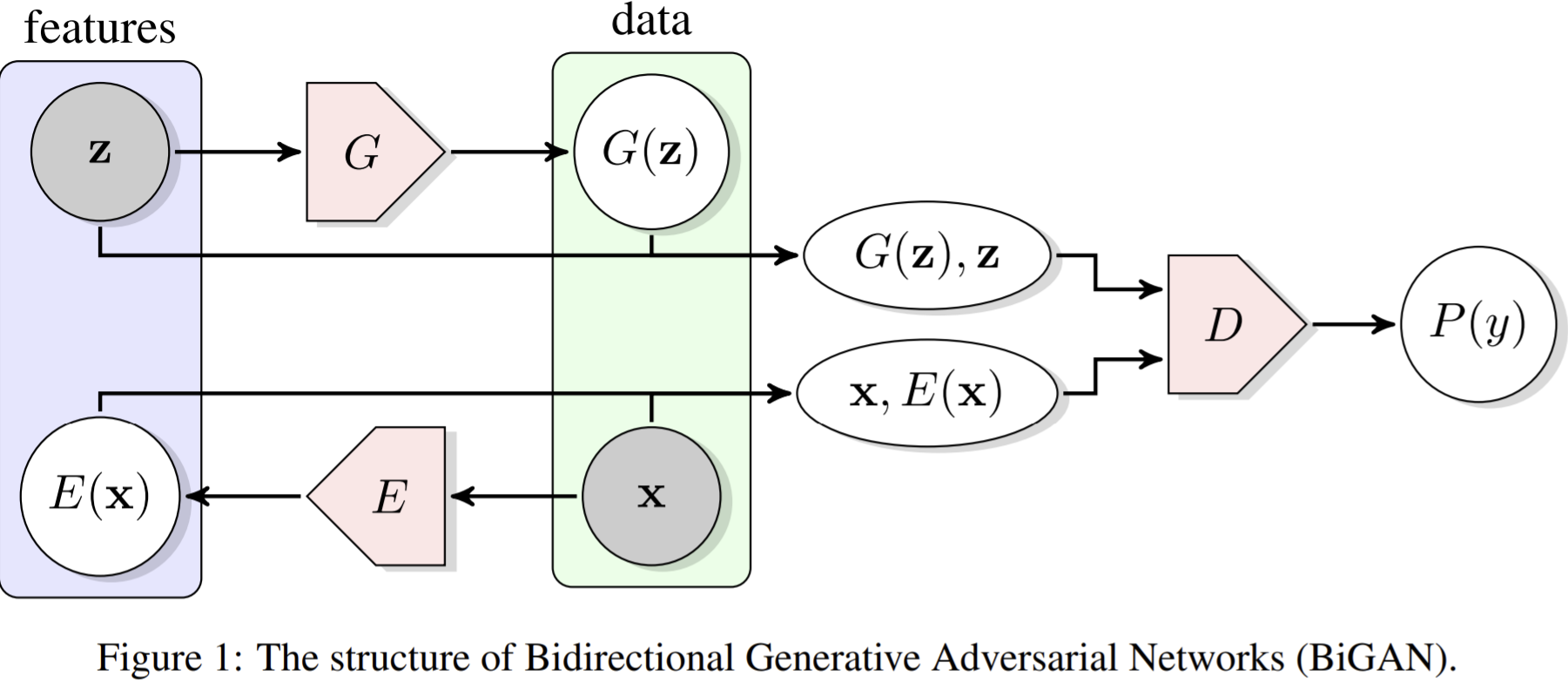

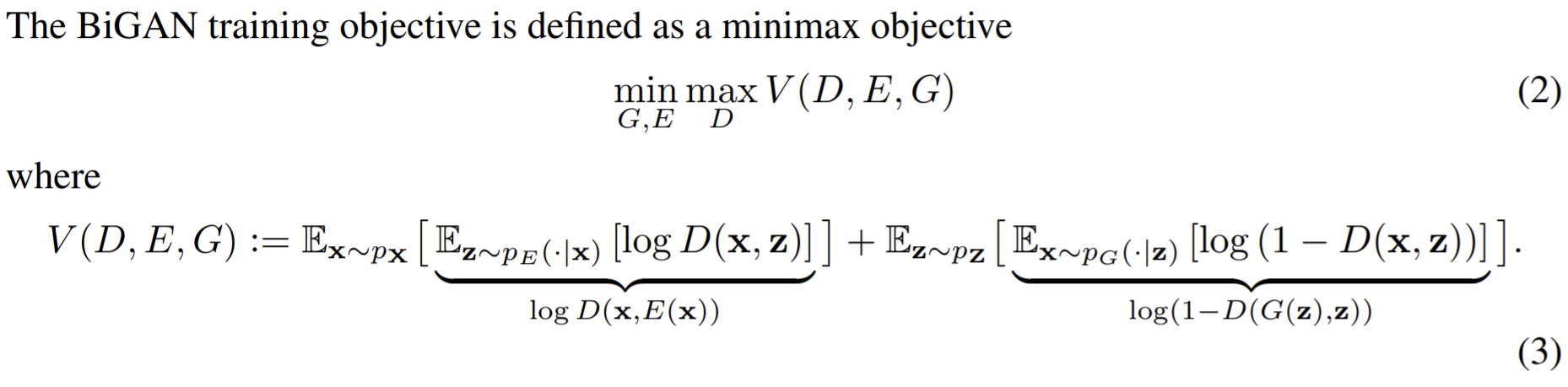

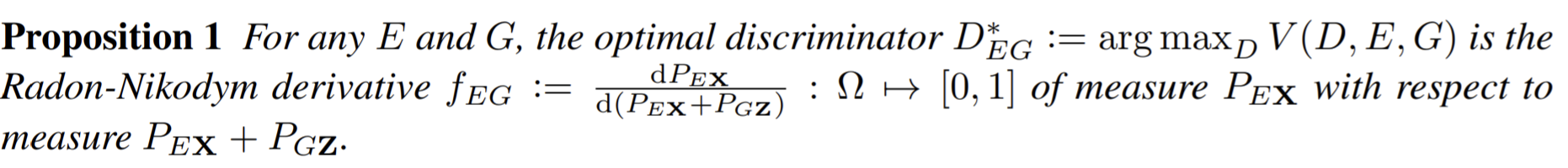

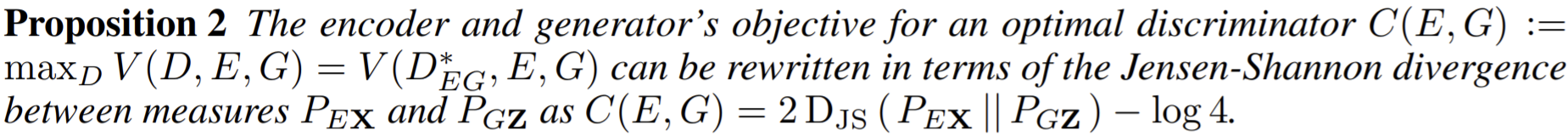

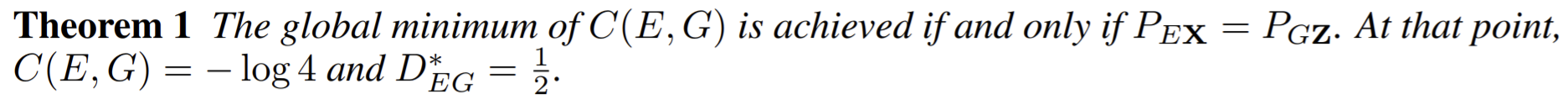

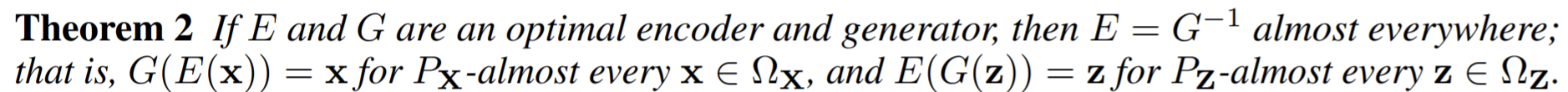

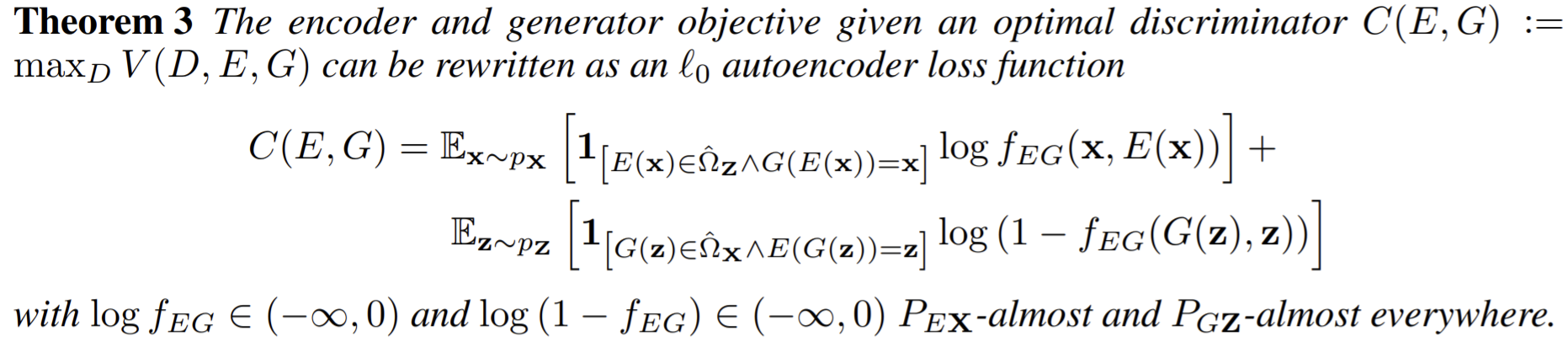

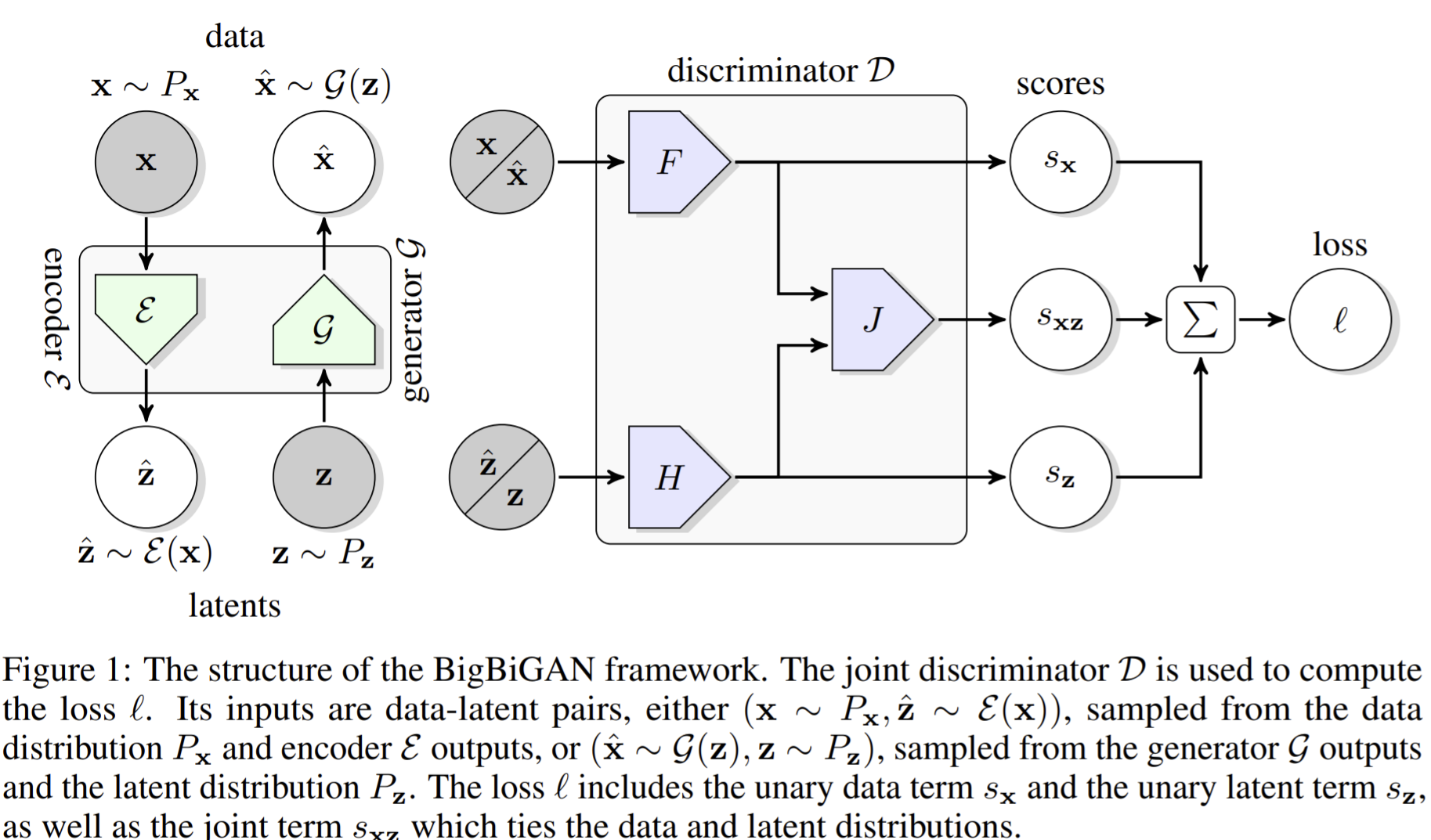

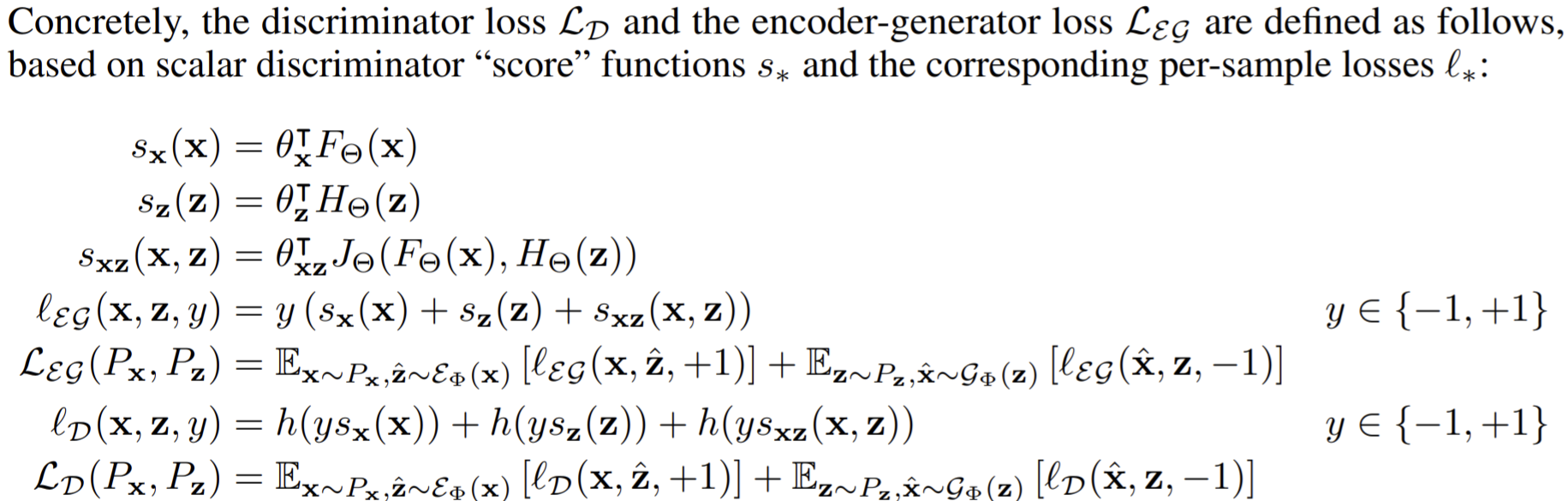

- Paper 15: Dumoulin et al (2017) Adversarially Learned Inference (ALI) ICLR 2017

- Paper 16: Donahue, Krähenbühl and Darrell Adversarial Feature Learning (BiGAN, concurrent and similar to ALI) ICLR 2017

- Paper 17: Donahue and Simonyan (2019) Large Scale Adversarial Representation Learning (Big BiGAN) ArXiv 2019

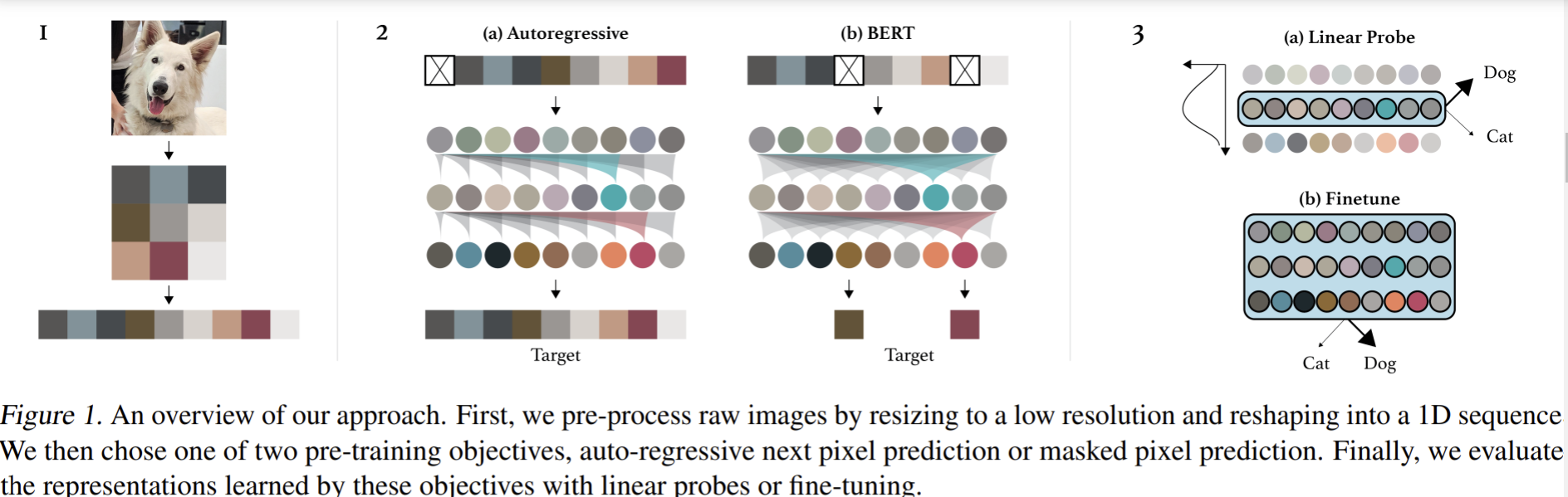

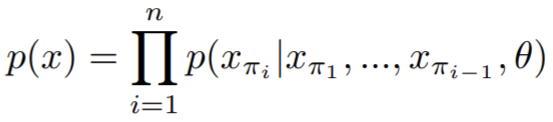

- Paper 18: Chen et al (2020) Generative Pretraining from Pixels (iGPT) ICML 2020

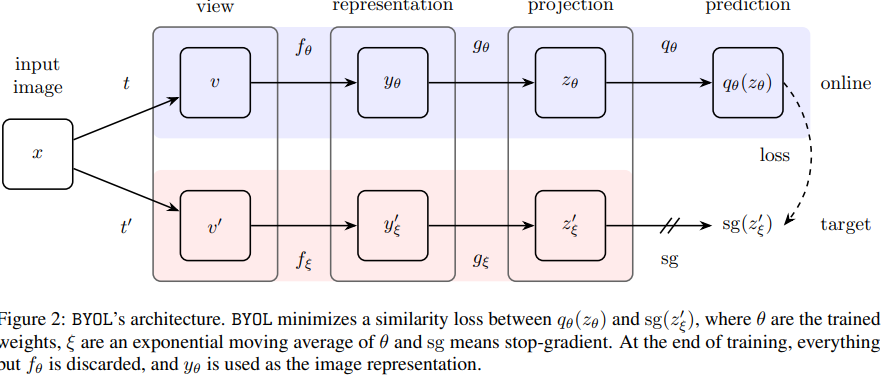

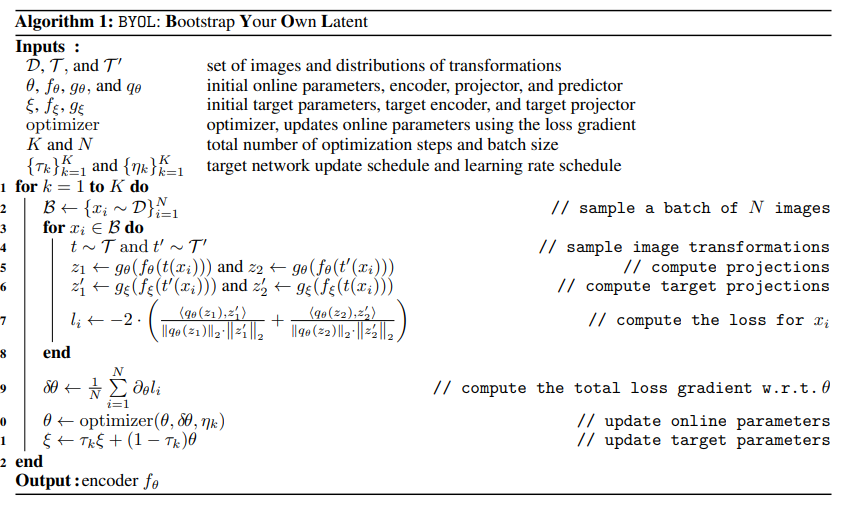

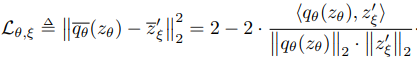

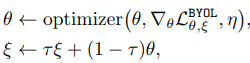

BYoL: boostrap your own latents

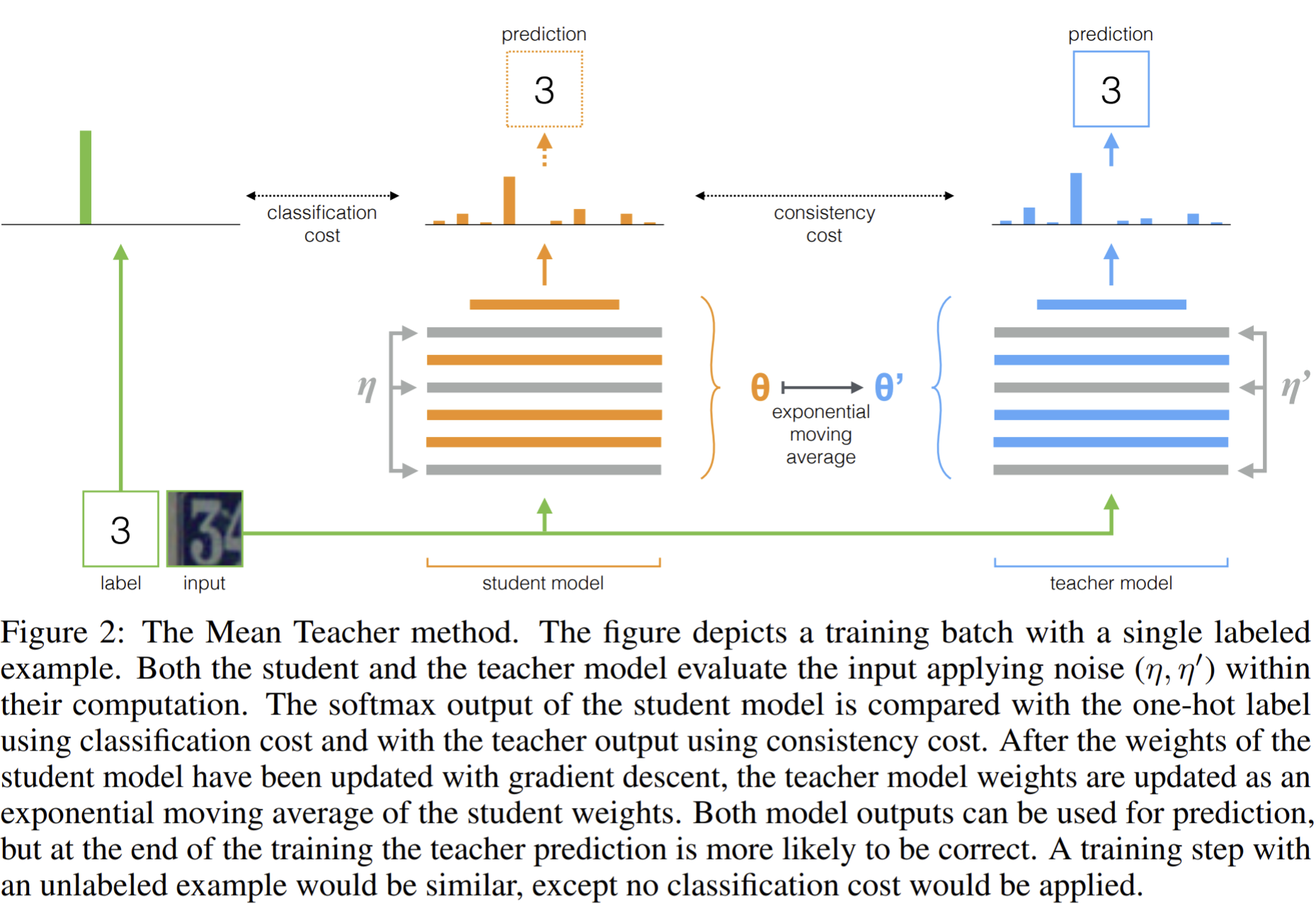

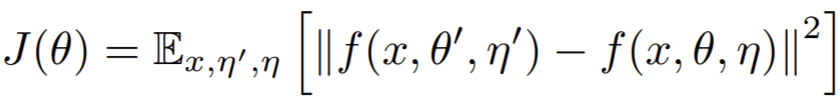

- Paper 19: Tarvainen and Valpola (2017) Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. NeurIPS 2017

- Paper 20: Grill et al (2020) Bootstrap Your Own Latent: A New Approach to Self-Supervised Learning (BYoL). ArXiv 2020

- Paper 21: Abe Fetterman, Josh Albrecht, (2020) Understanding self-supervised and contrastive learning with "Bootstrap Your Own Latent" (BYOL) Blog post

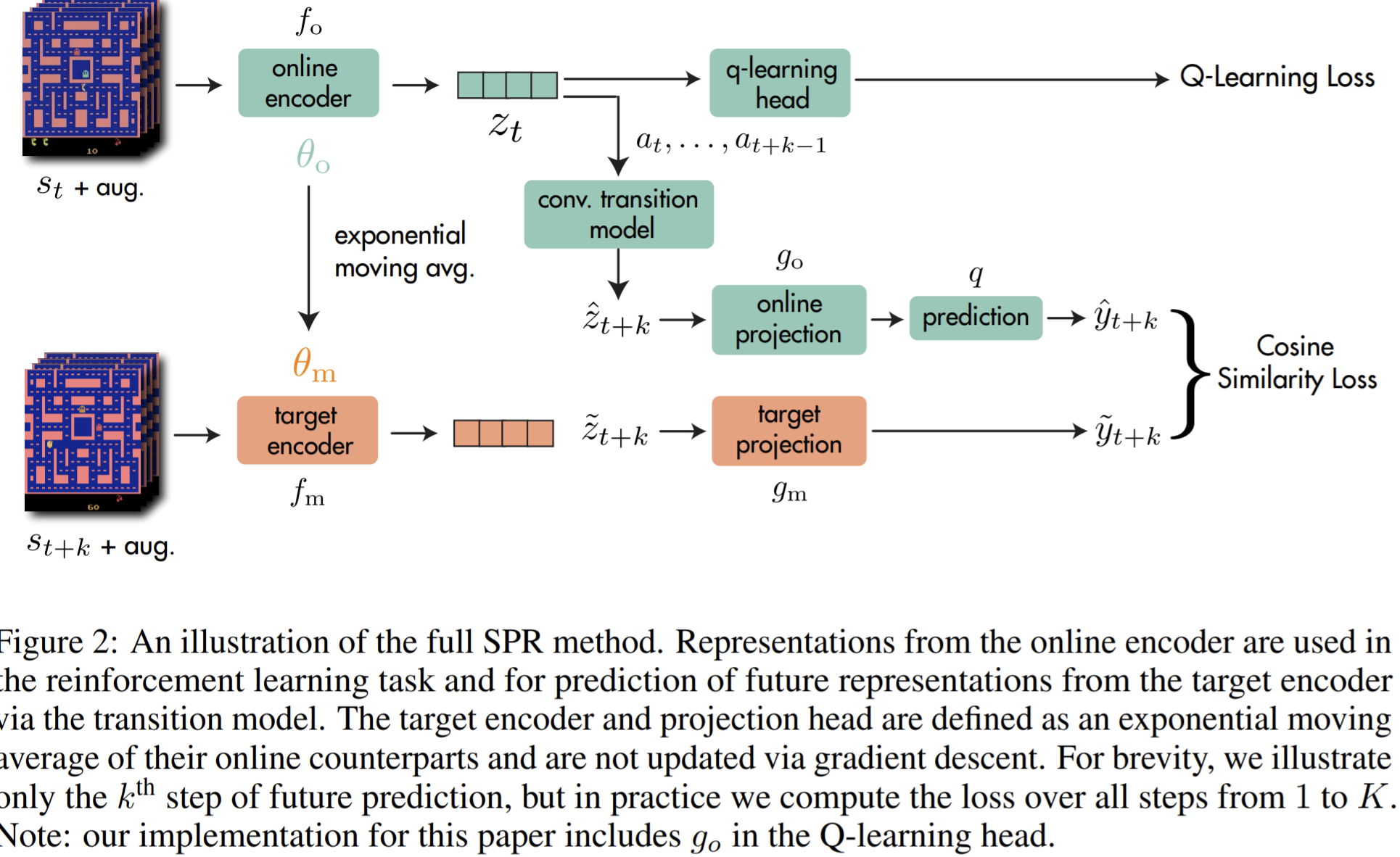

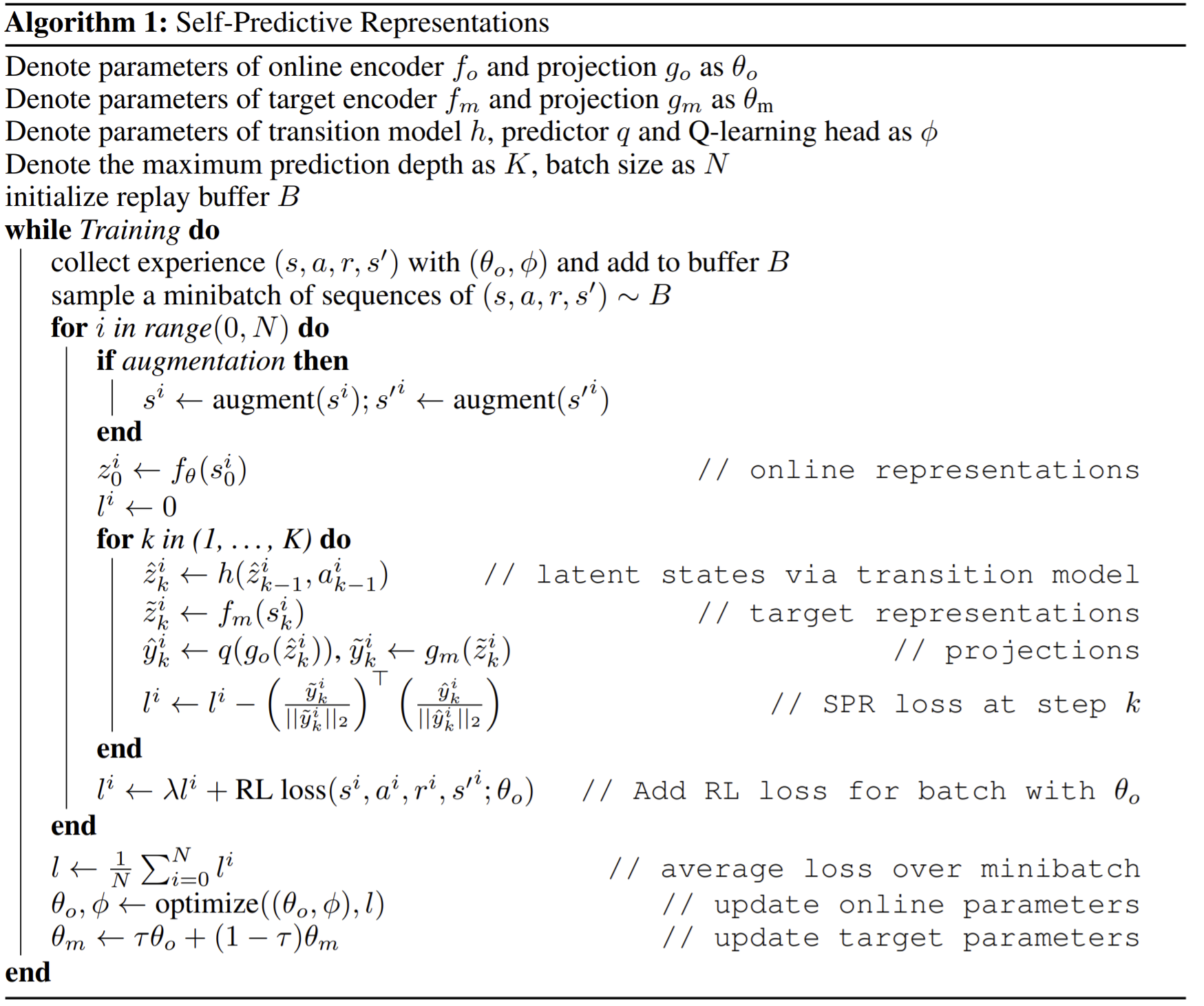

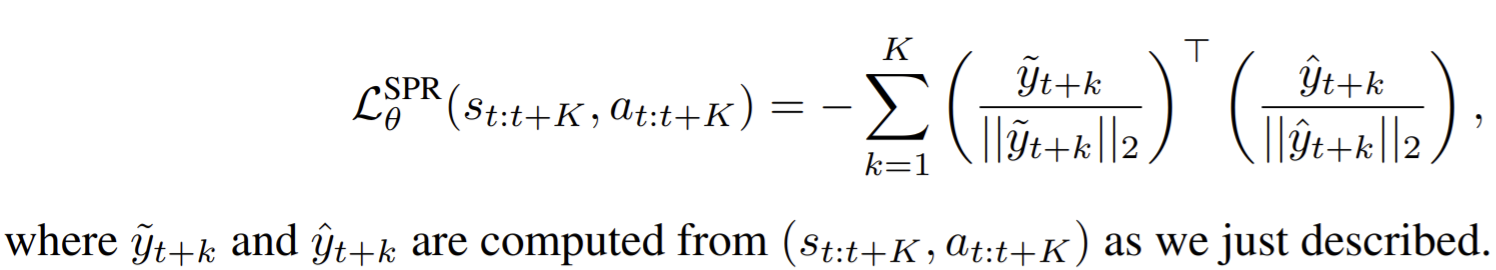

- Paper 22: Schwarzer and Anand et al. (2020) Data-Efficient Reinforcement Learning with Momentum Predictive Representations. ArXiv 2020

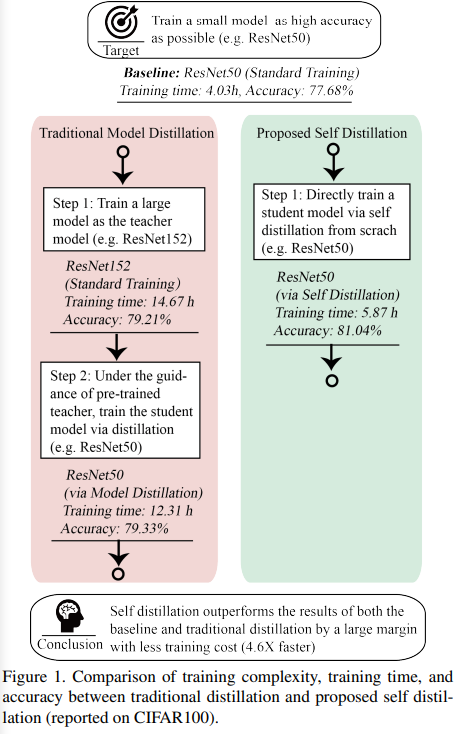

self-distillation methods

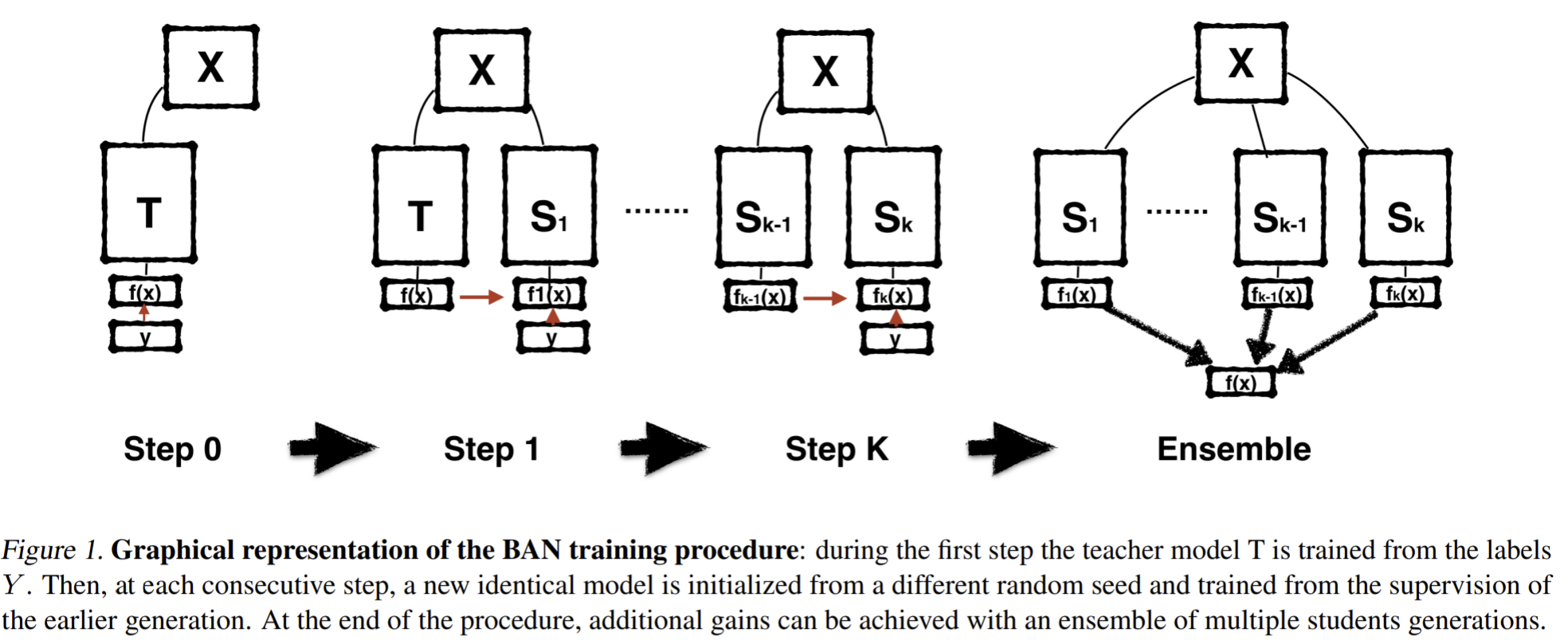

- Paper 23: Furlanello et al (2017) Born Again Neural Networks. NeurIPS 2017

- Paper 24: Yang et al. (2019) Training Deep Neural Networks in Generations: A More Tolerant Teacher Educates Better Students. AAAI 2019

- Paper 25: Ahn et al (2019) Variational information distillation for knowledge transfer. CVPR 2019

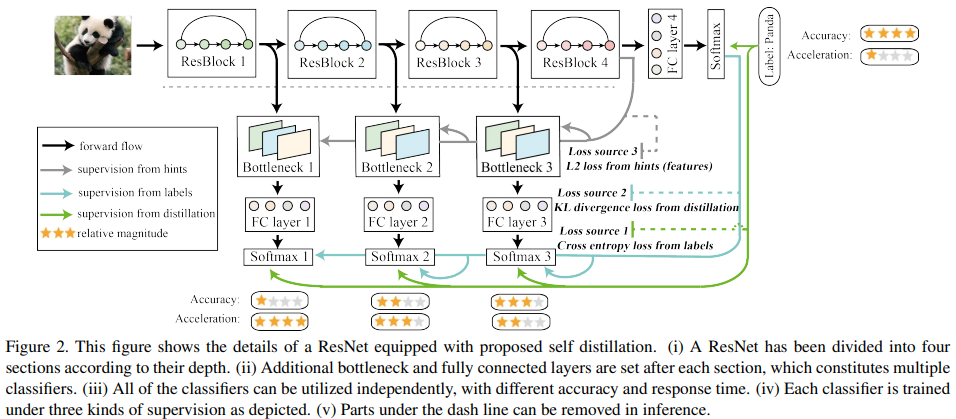

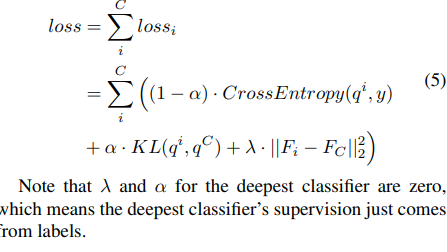

- Paper 26: Zhang et al (2019) Be Your Own Teacher: Improve the Performance of Convolutional Neural Networks via Self Distillation ICCV 2019

- Paper 27: Müller et al (2019) When Does Label Smoothing Help? NeurIPS 2019

- Paper 28: Yuan et al. (2020) Revisiting Knowledge Distillation via Label Smoothing Regularization. CVPR 2020

- Paper 29: Zhang and Sabuncu (2020) Self-Distillation as Instance-Specific Label Smoothing ArXiv 2020

- Paper 30: Mobahi et al. (2020) Self-Distillation Amplifies Regularization in Hilbert Space. ArXiv 2020

self-training / pseudo-labeling methods

- Paper 31: Xie et al (2020) Self-training with Noisy Student improves ImageNet classification. CVPR 2020

- Paper 32: Sohn and Berthelot et al. (2020) FixMatch: Simplifying Semi-Supervised Learning with Consistency and Confidence. ArXiv 2020

- Paper 33: Chen et al. (2020) Self-training Avoids Using Spurious Features Under Domain Shift. ArXiv 2020

Iterated learning/emergence of compositional structure

- Paper 34: Ren et al. (2020) Compositional languages emerge in a neural iterated learning model. ICLR 2020

- Paper 35: Guo, S. et al (2019) The emergence of compositional languages for numeric concepts through iterated learning in neural agents. ArXiv 2020

- Paper 36: Cogswell et al. (2020) Emergence of Compositional Language with Deep Generational Transmission ArXiv 2020

- Paper 37: Kharitonov and Baroni (2020) Emergent Language Generalization and Acquisition Speed are not tied to Compositionality ArXiv 2020

NLP

- Paper 38: Peters et al (2018) Deep contextualized word representations (ELMO), NAACL 2018

- Paper 39: Devlin et al (2019) BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. (BERT) NAACL 2019

- Paper 40: Brown et al (2020) Language Models are Few-Shot Learners (GPT-3, see also GPT-1and 2for more context) ArXiv 2020

- Paper 41: Clark et al (2020) ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators ICLR 2020

- Paper 42: He and Gu et al. (2020) REVISITING SELF-TRAINING FOR NEURAL SEQUENCE GENERATION (Unsupervised NMT) ICLR 2020

video/multi-modal data

- Paper 43: Wang and Gupta (2015) Unsupervised Learning of Visual Representations using Videos ICCV 2015

- Paper 44: Misra, Zitnick and Hebert (2016) Shuffle and Learn: Unsupervised Learning using Temporal Order Verification ECCV 2016

- Paper 45: Lu et al (2019) ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks, NeurIPS 2019

- Paper 46: Hjelm and Bachman (2020) Representation Learning with Video Deep InfoMax. (VDIM) Arxiv 2020

the role of noise in representation learning

- Paper 47: Bachman, Alsharif and Precup (2014) Learning with Pseudo-Ensembles NeurIPS 2014

- Paper 48: Bojanowski and Joulin (2017 ) Unsupervised Learning by Predicting Noise. ICML 2017

SSL for RL, control and planning

- Paper 49: Pathak et al. (2017) Curiosity-driven Exploration by Self-supervised Prediction (see also a large-scale follow-up) ICML 2017

- Paper 50: Aytar et al. (2018) Playing hard exploration games by watching YouTube (TDC) NeurIPS 2018

- Paper 51: Anand et al. (2019) Unsupervised State Representation Learning in Atari (ST-DIM) NeurIPS 2019

- Paper 52: Sekar and Rybkin et al. (2020) Planning to Explore via Self-Supervised World Models. ICML 2020

- Paper 53: Schwarzer and Anand et al. (2020) Data-Efficient Reinforcement Learning with Momentum Predictive Representations. ArXiv 2020

SSL theory

- Paper 54: Arora et al (2019) A Theoretical Analysis of Contrastive Unsupervised Representation Learning. ICML 2019

- Paper 55: Lee et al (2020) Predicting What You Already Know Helps: Provable Self-Supervised Learning ArXiv 2020

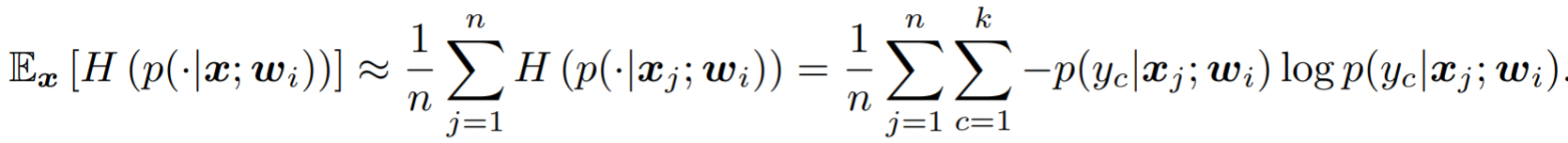

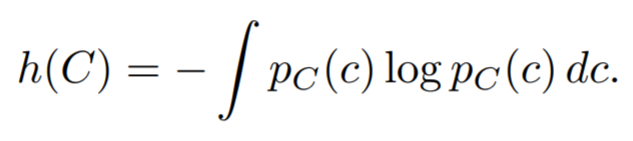

- Paper 56: Tschannen, et al (2019) On mutual information maximization for representation learning. ArXiv 2019.

Unsupervised domain adaption

- Paper 57: Shu et al (2018) A DIRT-T APPROACH TO UNSUPERVISED DOMAIN ADAPTATION. ICLR 2018

- Paper 58: Wilson and Cook (2019) A Survey of Unsupervised Deep Domain Adaptation. ACM Transactions on Intelligent Systems and Technology 2020.

- Paper 59: Mao et al. (2019) Virtual Mixup Training for Unsupervised Domain Adaptation. CVPR 2019

- Paper 60: Vu et al. (2018) ADVENT: Adversarial Entropy Minimization for Domain Adaptation in Semantic Segmentation CVPR 2019

Scaling

- Paper 61: Kaplan et al (2020) Scaling Laws for Neural Language Models. ArXiv 2020

Paper 1: Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion

Codes:

Previous

- What works much better is to initially use a local unsupervised criterion to (pre)train each layer in turn, with the goal of learning to produce a useful higher-level representation from the lower-level representation output by the previous layer.

- Initializing a deep network by stacking autoencoders yields almost as good a classification performance as when stacking RBMs. But why is it almost as good?

- looking for unsupervised learning principles likely to lead to the learning of feature detectors that detect important structure in the input patterns.

Traditional classifiers training with noisy inputs

- Training with noise is equivalent to applying generalized Tikhonov regularization (Bishop, 1995), which means L2 weight decay penalty but on linear regression with additive noise. For non-linear case, the regularization is more complex. Authors of this paper even show that different result when using DAEmon and when using regular autoencoders with a L2 weight decay.

Pseudo-Likelihood and Dependency Networks

- Pseudo-likelihood , dependency network paradigms etc.

Ideas

stacking layers of denoising autoencoders which are trained locally to denoise corrupted versions of their inputs. Find that denoising autoencoders are able to learn Gabor-like edge detectors from natural image patches and larger stroke detectors from digit images. our results clearly establish the value of using a denoising criterion as an unsupervised objective to guide the learning of useful higher level representations.

Reasoning: what makes good representations

- A good representation is those that retain a significant amount of information about the input, can be expressed in information-theoretic terms as maximizing the mutual information

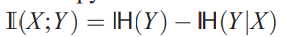

- Mutual information can be decomposed into an entropy and a conditional entropy term in two different ways

- ICA:

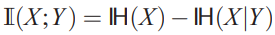

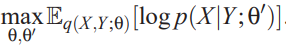

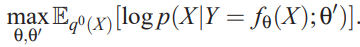

- consider a parameterized distribution $p(X|Y;') $ that parameterized by \(\mathrm{\theta}'\), then

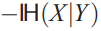

leads to maximizing a lower bound on

leads to maximizing a lower bound on and thus on the mutual information.

and thus on the mutual information. - ICA is when \(Y=f_\theta(X)\).

- As \(q(X)\) is unknown, but with samples, the empirical average over the training samples can be used instead as an unbiased estimate (i.e., replacing \(\mathbb{E}_{q(X)}\) by $_{q^0(X)} $:

- consider a parameterized distribution $p(X|Y;') $ that parameterized by \(\mathrm{\theta}'\), then

- ICA:

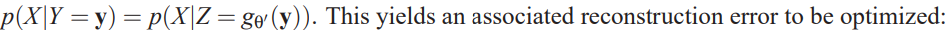

- The equation above corresponds to the reconstruction error criterion used to train autoencoders

:

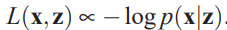

:  To choose \(p(\mathrm{x|z}),L(\mathrm{x|z})\)

To choose \(p(\mathrm{x|z}),L(\mathrm{x|z})\)

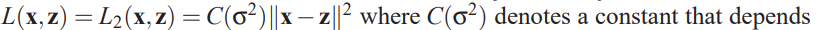

- For real-valued $ $ squared error:

on \(\sigma^2\), the variance of \(X\). Since gaussian, not to use a squashing nonlinearity in the decoder.

on \(\sigma^2\), the variance of \(X\). Since gaussian, not to use a squashing nonlinearity in the decoder. - Binary $ $: finally become cross-entropy loss, which can be used when $ $ is not strictly binary but rather $^d $

- For real-valued $ $ squared error:

- training an autoencoder to minimize reconstruction error amounts to maximizing a lower bound on the mutual information between input X and learnt representation Y

- Merely Retaining Information is Not Enough

- non-zero reconstruction error to separate useful information from noise: traditional AE uses bottleneck to produce an under-complete representation where \(d'<d\), and result in a lossy compressed representation of \(X\). When using affine encoder and decoder without any nonlinearity an a squared error loss, the AE performs PCA actually. But not in cross-entropy loss.

- using over-complete (i.e., higher dimensional than the input) but sparse representations is popular now, which is a special case of imposing on \(Y\) different constraints than that of a lower dimensionality. A sparse over-complete representations can be viewed as an alternative “compressed” representation.

How?

DAE: denoising autoencoder

Procedures

- first denoising: corrupt the initial input $ $ into \(\tilde{\mathrm{x}}\) by a stochastic mapping. This will lead to force the learning of a mapping that extracts features useful for denoising.

- Then map \(\tilde{\mathrm{x}}\) to \(\mathrm{y}\). And reonstruct \(\mathrm{z}\) to be close to the $ $ but a function of \(\mathrm{y}\).

Geometric Interpretation

- Thus stochastic operator \(p(X|\tilde{X})\) learns a map that tends to go from lower probability points \(\tilde{X}\) to nearby high probability points \(X\), on or near the manifold.

- Successful denoising implies that the operator maps even far away points to a small region close to the manifold

- Think of \(Y=f(X)\) as a representation of \(X\) which is well suited to capture the main variations in the data, that is, those along the manifold.

Types of corruption considered

- additive: natural

- masking noise: natural for input domains which are interpretable as binary or near binary such as black an white images or the representation produced at the hidden layer after a signoid squashing function

- salt and pepper noise: same as masking noise, only earse a changing subsets of the inputs components while leaving others untouched

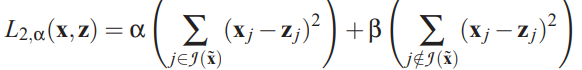

Emphasize corrupted dimensions

only put an emphasis on the corrupted dimensions. Like use \(\alpha\) for the Reconstruction error on corrupted components and \(\beta\) for untouched components

Sqaured loss

Cross-entropy loss

If \(\alpha=1, \beta=0\), then this is full emphasis that only consider the error on the prediction of corrupted elements

Stacking DAE for deep architecture

Experiments

Single DAE

- feature detectors from natural image patches

- The under-complete autoencoder appears to learn rather uninteresting local blob detectors. Filters obtained in the overcomplete case have no recognizable structure, looking entirely random

- training with sufficiently large noise yields a qualitatively very different outcome than training with a weight decay regularization and this proves that the two are not equivalent for a non-linear autoencoder.

- Salt-and-pepper noise yielded Gabor-like edge detectors, whereas masking noise yielded a mixture of edge detectors and grating filters. They all yield some potentially useful edge detectors.

- feature detectors from handwrite digits

- With increased noise levels, a much larger proportion of interesting (visibly non random and with a clear structure) feature detectors are learnt. These include local oriented stroke detectors and detectors of digit parts such as loops.

- But denoising a more corrupted input requires detecting bigger, less local structures.

- feature detectors from natural image patches

Stacked DAE (SDAE): compare with SAE and DBN.

- Classification problem and experimental methodology: As increasing the noise level, denoising training forces the filters to differentiate more, and capture more distinctive features. Higher noise levels tend to induce less local filters, as expected.

- Compared with other strategies: denoising pretraining with a non-zero noise level is a better strategy than pretraining with regular autoencoders

- Influence of Number of Layers, Hidden Units per Layer, and Noise Level

- Depth: denoising pretraining being better than autoencoder pretraining being better than no pretraining. The advantage appears to increase with the number of layers and with the number of hidden units

- noise levels: SDAE appears to perform better than SAE (0 noise) for a rather wide range of noise levels, regardless of the number of hidden layers.

Denoising pretraining v.s. training with noisy input

- Note: SDAE uses a denoising criterion to learn good initial feature extractors at each layer that will be used as initialization for a noiseless supervised training; which is different from training with noisy inputs that amounts to training with a virtually expanded data set.

- Denoising pretraining with SDAE, for a large range of noise levels, yields significantly improved performance, whereas training with noisy inputs sometimes degrades the performance, and sometimes improves it slightly but is clearly less beneficial than SDAE.

Variations on the DAE, alternate corruption types and emphasizing

- An emphasized SDAE with salt-and-pepper noise appears to be the winning SDAE variant.

- A judicious choice of noise type and added emphasis may often buy us a better performance.

Are Features Learnt in an Unsupervised Fashion by SDAE Useful for SVMs?

- SVM performance can benefit significantly from using the higher level representation learnt by SDAE

- linear SVMs can benefit from having the original input processed non-linearly

Generating Samples from Stacked Denoising Autoencoder Networks

- Top-Down Generation of a Visible Sample Given a Top-Layer Representation: it is thus possible to generate samples at one layer from the representation of the layer above in the exact same way as in a DBN.

- Bottom-up (infer representation of the top layer based on representation on the bottom layer) is similar as approximate inference of a factorial Bernoulli top-layer distribution given the low level input. The top-layer representation is to be understood as the parameters (the mean) of a factorial Bernoulli distribution for the actual binary units.

- SDAE and DBN are able to resynthesize a variety of similarly good quality digits, whereas the SAE trained model regenerates patterns with much visible degradation in quality, this also prove that evidence of the qualitative difference resulting from optimizing a denoising criterion instead of mere reconstruction criterion.

- Contrary to SAE, the regenerated patterns from SDAE or DBN look like they could be samples from the same unknown input distribution that yielded the training set.

Paper 2: Revisiting Self-Supervised Visual Representation Learning

https://github.com/google/revisiting-self-supervised

Previous

- Compared with pretext task, the choice of CNN has not received equal attention

- patch-based self-supervised visual representation learning methods: predicting the relative location of image patches; “jigsaw puzzle” created from the full image etc.

- image-level classification tasks: randomly rotate an image by one of four possible angles and let the model predict that rotation; use clustering of the images

- tasks with dense spatial outputs: image inpainting, image colorization, its improved variant split-brain and motion segmentation prediction.

- equivariance relation to match the sum of multiple tiled representations to a single scaled representation; predict future patches in via autoregressive predictive coding.

- many works have tried to combine multiple pretext tasks in one way or another

What?

- Standard architecture design recipes do not necessarily translate from the fully-supervised to the self-supervised setting. Architecture choices which negligibly affect performance in the fully labeled setting, may significantly affect performance in the self-supervised setting.

- The quality of learned representations in CNN architectures with skip-connections does not degrade towards the end of the model.

- Increasing the number of filters in a CNN model and, consequently, the size of the representation significantly and consistently increases the quality of the learned visual representations.

- The evaluation procedure, where a linear model is trained on a fixed visual representation using stochastic gradient descent, is sensitive to the learning rate schedule and may take many epochs to converge.

How

- revisit a prominent subset of the previously proposed pretext tasks and perform a large-scale empirical study using various architectures as base models.

- The CNN candidates

- ResNet:

- RevNet: stronger invertibility guarantees while being structurally similar to ResNets. Set it to have the same depth and number of channels as the original Resnet50 model.

- VGG: no skip, has BN

- The pretext tasks candidates

- Rotation: 0,90,180,270

- Exemplar: heavy random data augmentation such as translation, scaling, rotation, and contrast and color shifts

- Jigsaw: recover relative spatial position of 9 randomly sampled image patches after a random permutation of these patches. They extract representations by averaging the representations of nine uniformly sampled, colorful, and normalized patches of an image.

- Relative patch location: 8 possible relative spatial relations between two patches need to be predicted. use the same patch prepossessing as in the Jigsaw model and also extract final image representations by averaging representations of 9 cropped patches.

- Dataset candidates

- ImageNet

- Places205

- Evaluation protocol

- measures representation quality as the accuracy of a linear regression model trained and evaluated on the ImageNet dataset

- the pre-logits of the trained self-supervised networks as representation.

Experiments

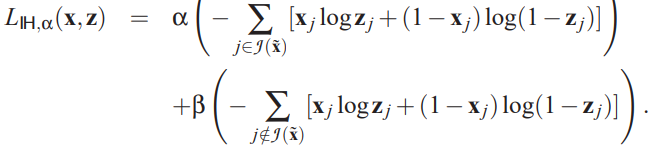

similar models often result in visual representations that have significantly different performance. Importantly, neither is the ranking of architectures consistent across different methods, nor is the ranking of methods consistent across architectures

increasing the number of channels in CNN models improves performance of self-supervised models.

ranking of models evaluated on Places205 is consistent with that of models evaluated on ImageNet, indicating that our findings generalize to new datasets.

self-supervised learning architecture choice matters as much as choice of a pretext task

MLP provides only marginal improvement over the linear evaluation and the relative performance of various settings is mostly unchanged. We thus conclude that the linear model is adequate for evaluation purposes.

Better performance on the pretext task does not always translate to better representations. For residual architectures, the pre-logits are always best.

Skip-connections prevent degradation of representation quality towards the end of CNNs. We hypothesize that this is a result of ResNet’s residual units being invertible under some conditions. RevNet, boosts performance by more than 5 % on the Rotation task, albeit it does not result in improvements across other tasks.

Model width and representation size strongly influence the representation quality: disentangle the network width from the representation size by adding an additional linear layer to control the size of the pre-logits layer. self-supervised learning techniques are likely to benefit from using CNNs with increased number of channels across wide range of scenarios.

SGD optimization hyperparameters play an important role and need to be reported. very long training (≈ 500 epochs) results in higher accuracy. They decay lr at 480 epochs.

Paper 3: Zhai et al (2019) A Large-scale Study of Representation Learning with the Visual Task Adaptation Benchmark (A GLUE-like benchmark for images)

https://github.com/google-research/task_adaptation

Previous

- the absence of a unified evaluation for general visual representations hinders progress. Each sub-domain has its own evaluation protocol, and the lack of a common benchmark impedes progress.

- Popular protocols are often too constrained (linear classification), limited in diversity (ImageNet, CIFAR, Pascal-VOC), or only weakly related to representation quality (ELBO, reconstruction error)

What?

- present the Visual Task Adaptation Benchmark (VTAB), which defines good representations as those that adapt to diverse, unseen tasks with few examples. (i) minimal constraints to encourage creativity, (ii) a focus on practical considerations, and (iii) make it challenging.

- Conduct a large-scale study of many popular publicly-available representation learning algorithms on VTAB.

- They found:

- Supervised ImageNet pretraining yields excellent representations for natural image classification tasks

- Self-supervised is less effective than supervised learning overall, but surprisingly, can improve structured understanding

- Combining supervision and self-supervision is effective, and to a large extent, self-supervision can replace, or compliment labels.

- Discriminative representations appear more effective than those trained as part of a generative model, with the exception of adversarially trained encoders.

- GANs perform relatively better on data similar to their pre-training source (here, ImageNet), but worse on other tasks.

- Evaluation using a linear classifier leads to poorer transfer and different conclusions.

How?

- To design, must ensure that the algorithms are not pre-exposed to specific evaluation samples.

- VTAB benchmark

- practical benchmark

- Define task distribution as Tasks that a human can solve, from visual input alone.”

- For each evaluation sample a new task.

- mitigating meta-overfitting : treat the evaluation tasks unseen, and thus algorithms use pretraining must not pre-train on any of the evaluation tasks.

- Unified implementation: algorithms must have no prior knowledge of the downstream tasks, and hyperparameter searches need to work well across the benchmark.

- All tasks are classification in this paper, such as the detection task is mapped to the classification of the \((x,y,z)\) coordinates. The diverse set of visual features are learnt from object identification, scene classification, pathology detection, counting, localization and 3D geometry.

- Tasks

- NATURAL: classical vision problems, group includes Caltech101, CIFAR100, DTD, Flowers102, Pets, Sun397, and SVHN

- SPECIALIZED: images are captured through specialist equipment, and human can recognize the structures. One is remote sensing, the other is medical

- STRUCTURED: assesses comprehension of the structure of a scene, for example, object counting, or 3D depth prediction.

- pretraining on pretext tasks and then fine tune

- practical benchmark

- The methods are divided into five groups: Generative, training from-scratch, all methods using 10% labels (Semi Supervised), and all methods using 100% labels (Supervised),

Experiments

- Upstream, control the data and architecture (pretrained on ImageNet). They find bigger architectures perform better on VTAB, and use resnet or resnet-similar nets for all models as the encoder.

- Downstream, run VTAB in two modes: the light weight mode sweepstakes 2 initial learning rates and 2 learning rate schedules but fix other parameters while the heavy weights perform a large random search over learning rate, schedule, optimizers, batch size, train preprocessing functions, evaluation preprocessing and weight decay. The main study is done in lightweight.

- Evaluate with top-1 accuracy. To aggregate scores across tasks, take the mean accuracy.

- Lightweight

- Generative models perform worst, GANs fit more strongly to ImageNet’s domain (natural images), than self-supervised alternatives.

- All self-supervised representations outperform from-scratch training. Methods applied to the entire image outperform patch-based method, and these tasks require sensitivity to local textures. self supervised is worst than supervised on natural tasks, similar on specialized tasks and slightly better than structured tasks.

- Supervised models perform the best. additional self-supervision even improves on top of 100% labelled ImageNet, particularly on STRUCTURED tasks

- Heavyweight

- across all task groups, pre-trained representations are better than a tuned from-scratch model.

- a combination supervision and self-supervision (SUP-EXEMPLAR-100%) getting the best performance.

- Frozen feature extractors

- linear evaluation significantly lowers performance, even when downstream data is limited to 1000 examples. Linear transfer would not by used in practice unless infrastructural constraints required it. These self-supervised methods extract useful representations, just without linear separability. Linear evaluation results are sensitive to additional factors that we do not vary, such as ResNet version or pre-training regularization parameters.

- Vision benchmarks

- The methods ranked according to VTAB are more likely to transfer to new tasks, than those ranked according to the Visual Decathlon

- VTAB is more flexible than Facebook AI SSL challenge, such as the diversity of domain, the evaluation form.

- Meta-dataset: designed for few-shot learning rather than 1000 examples which may entail different solutions.

- Some details

- while training from scratch , inception crop, horizontal flip preprocessing, 1e-3 in weight decay, 0.1~1 lr in SGD mostly give good results.

- While doing SSL by ImageNet and then finetuning, Inception crop, non-horizontal flip give good results.

Discussion

- how effective are supervised ImageNet representations? ImageNet labels are indeed effective for natural tasks.

- how do representations trained via generative and discriminative models compare? The generative losses seem less promising as means towards learning how to represent data. BigBiGAN is notable.

- To what extent can self-supervision replace labels?

- self-supervision can almost (but not quite) replace 90% of ImageNet labels; the gap between pre-training on 10% labels with self-supervison, and 100% labels, is small

- self-supervision adds value on top of ImageNet labels on the same data.

- simply adding more data on the SPECIALIZED and STRUCTURED tasks is better than the pre-training strategies we evaluated

- Varying other factors to improve VTAB is valuable future research. The only approach that is out-of-bounds is to condition the algorithm explicitly on the VTAB tasks

Paper 4: A critical analysis of self-supervision, or what we can learn from a single image

https://github.com/yukimasano/linear-probes

Previous

- For a given model complexity, pre-training by using an off-the-shelf annotated image datasets such as ImageNet remains much more efficient.

- Methods often modify information in the images and require the network to recover them. However, features are learned on modified images which potentially harms the generalization to unmodified ones.

- Learning from a single sample

- Object tracking: max margin correlation filters learn robust tracking templates from a single sample of the patch.

- learn and interpolate multi-scale textures with a GAN framework

- semi-parametric exemplar SVM model

- we do not use a large collection of negative images to train our model. Instead we restrict ourselves to a single or a few images with a systematic augmentation strategy.

- Classical learned and hand-crafted low-level feature extractors: insufficient to clarify the power and limitation of self-supervision in deep networks.

What

- Aim to investigate the effectiveness of current self-supervised approaches by characterizing how much information they can extract from a given dataset of images. Then try to answer whether a large dataset is beneficial to unsupervised learning, especially for learning early convolutional features

- Three different and representative methods, BiGAN, RotNet and DeepCluster, can learn the first few layers of a convolutional network from a single image as well as using millions of images and manual labels, provided that strong data augmentation is used.

- For deeper layers the gap with manual supervision cannot be closed even if millions of unlabelled images are used for training.

- Conclusion

- the weights of the early layers of deep networks contain limited information about the statistics of natural images

- such low-level statistics can be learned through self-supervision just as well as through strong supervision, and that

- the low-level statistics can be captured via synthetic transformations instead of using a large image dataset. (training these layers with self-supervision and a single image already achieves as much as two thirds of the performance that can be achieved by using a million different images.)

How

- Data: use DAA augmentation to replace some source images.

- Augmentations: involving cropping, scaling, rotation, contrast changes, and adding noise. Augmentation can be seen as imposing a prior on how we expect the manifold of natural images to look like

- limit the size of cropped patch: the smallest size of the crops is limited to be at least βWH and at most the whole image. Additionally, changes to the aspect ratio are limited by γ. In practice we use β = 10e−3 and γ = 3/4 .

- before cropping, rotate in \((-35,35)\) degrees. And also flip images in 50% possibility

- linear transformation in RGB space, color jitter with additive brightness, contrast and saturation

- Real samples: give some drawn samples which are not real captured but has plenty of textures and in small size, and a real picture in large size but with large areas no objects.

- Augmentations: involving cropping, scaling, rotation, contrast changes, and adding noise. Augmentation can be seen as imposing a prior on how we expect the manifold of natural images to look like

- Representation learning methods

- BiGAN with leaky ReLU nonlinearities in discriminators

- Rotation: do it on horizontal flips and non-scaled random crops to 224 × 224

- clustering:

Experiments

- ImageNet and CIFAR-10/100 using linear probes

- Base encoder: AlexNet. They insert the probes right after the ReLU layer in each block

- Learning lasts for 36 epochs and the learning rate schedule starts from 0.01 and is divided by five at epochs 5, 15 and 25

- extracting 10 crops for each validation image (four at the corners and one at the center along with their horizontal flips) and averaging the prediction scores before the accuracy is computed.

- Effect of augmentations: Random rescaling adds at least ten points at every depth (see Table 1 (f,h,i)) and is the most important single augmentation. Color jittering and rotation slightly improve the performance of all probes by 1- 2% points

- Benchmark evaluation

- Mono is enough: Mono means train with one source image and its augmented images.

- Image contents:

- RotNet cannot extract photographic bias from a single image. the method can extract rotation from low level image features such as patches which is at first counter intuitive=> lighting and shadows even in small patches can indeed give important cues on the up direction which can be learned even from a single (real) image.

- the augmentations can even compensate for large untextured areas and the exact choice of image is not critical. A trivial image without any image gradient (e.g. picture of a white wall) would not provide enough signal for any method.

- More than one image

- for conv1 and conv2, a single image is enough

- In deeper layers, DeepCluster seems to require large amounts of source images to yield the reported results as the deka- and kilo- variants start improving over the single image case

- Generalization

- GAN trained on the smaller Image B outperforms all other methods including the fully-supervised trained one for the first convolutional layer

- our method allows learning very generalizable early features that are not domain dependent.

- the neural network is only extracting patterns and not semantic information because we do not find any neurons particularly specialized to certain objects even in higher levels as for example dog faces or similar which can be fund in supervised networks

Paper 5: Representation Learning with Contrastive Predictive Coding (CPC), ArXiv 2018

Previous

- It is not always clear what the ideal representation is and if it is possible that one can learn such a representation without additional supervision or specialization to a particular data modality.

- One of the most common strategies for unsupervised learning has been to predict future, missing or contextual information

- Recently in unsupervised learning some learn word representations by predicting neighboring words.

- For images, predicting color from grey-scale or the relative position of image patches

- predicting high-dimensional data

- unimodal losses such as mean-squared error and cross-entropy are not very useful

- powerful conditional generative models which need to reconstruct every detail in the data are usually required.

What

- Main contributions

- Compress features into a latent embedding space in which conditional predictions are easier to model.

- predict the future in latent space by autoregressive models

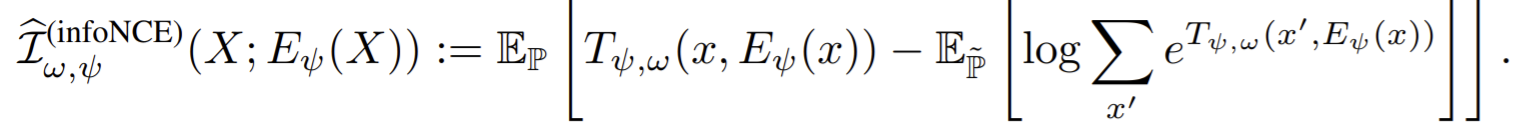

- Use NCE loss

- Intuition

- learn the representations that encode the underlying shared information between parts of the signal

- meanwhile discard low-level information and noise that is more local.

How

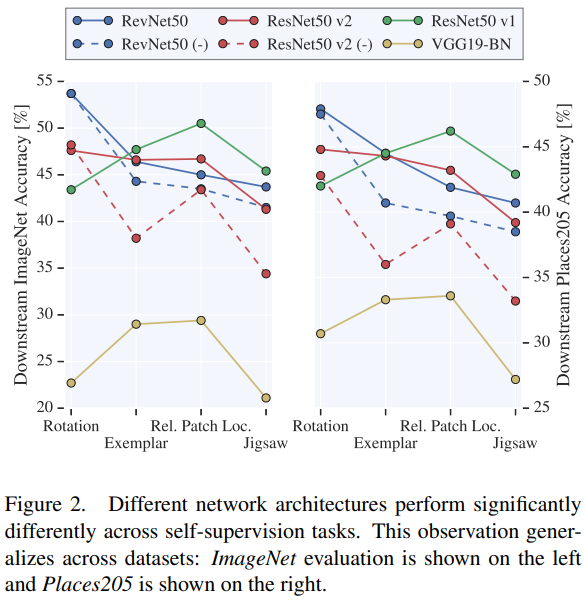

- use a NCE which induces the latent space to capture information that is maximally useful to predict future samples

- Model a density ratio which preserves the mutual information between \(x_{t+k},c_t\) as \(f_k(x_{t+k},c_t)\propto \frac{p(x_{t+k}|c_t)}{p(x_{t+k})}\), they choose \(f_k(x_{t+k},c_t)=\exp(z_{t+k}^TW_kc_t)\), the log-bilinear model.

Experiments

For every domain we train CPC models and probe what the representations contain with either a linear classification task or qualitative evaluations.

- Audio

- use a 100-hour subset of the publicly available LibriSpeech dataset

- use a GRU for the autoregressive part of the model

- We found that not all the information encoded is linearly accessible.

- CPCs capture both speaker identity and speech contents

- Vision

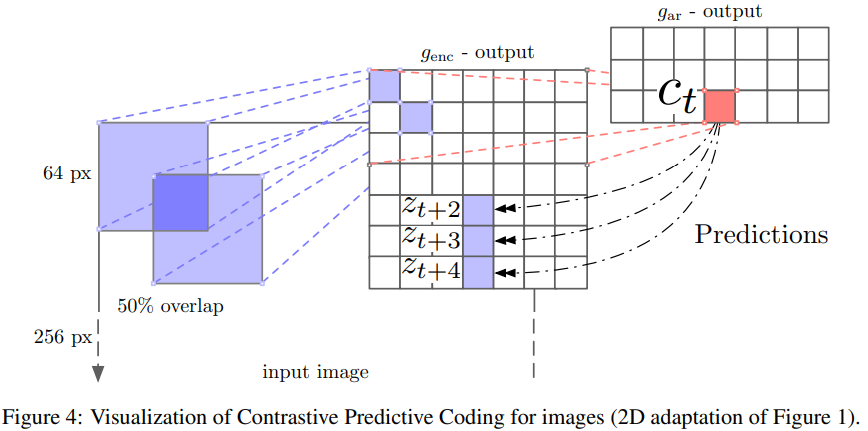

- Use ImageNet and ResNet v2 101, no BN. use the outputs from the third residual block, and spatially mean-pool to get a single 1024-d vector per 64x64 patch. This results in a 7x7x1024 tensor. Next, we use a PixelCNN-style autoregressive model to make predictions about the latent activations in following rows top-to-bottom.

- CPCs improve upon state-of-the-art by 9% absolute in top-1 accuracy, and 4% absolute in top-5 accuracy.

- Natural language

- a linear mapping is constructed between word2vec and the word embeddings learned by the model. A L2 regularization weight was chosen via cross-validation (therefore nested cross-validation for the first 4 datasets)

- found that more advanced sentence encoders did not significantly improve the results, which may be due to the simplicity of the transfer tasks, and the fact that bag-of-words models usually perform well on many NLP tasks.

- The performance of our method is very similar to the skip-thought vector model, with the advantage that it does not require a powerful LSTM as word-level decoder, therefore much faster to train

- Reinforcement learning

- take the standard batched A2C agent as base model and add CPC as an auxiliary loss

- The unroll length for the A2C is 100 steps and we predict up to 30 steps in the future to derive the contrastive loss

- 4 out of the 5 games performance of the agent improves significantly with the contrastive loss after training on 1 billion frames.

Paper 6: Learning deep representations by mutual information estimation and maximization (DIM Deep InfoMax ) ICLR 2019

https://github.com/rdevon/DIM

Previous

- in typical settings, models with reconstruction-type objectives provide some guarantees on the amount of information encoded in their intermediate representations.

- MI estimation

- MINE: strongly consistent, can be used to learn better implicit bidirectional generative models

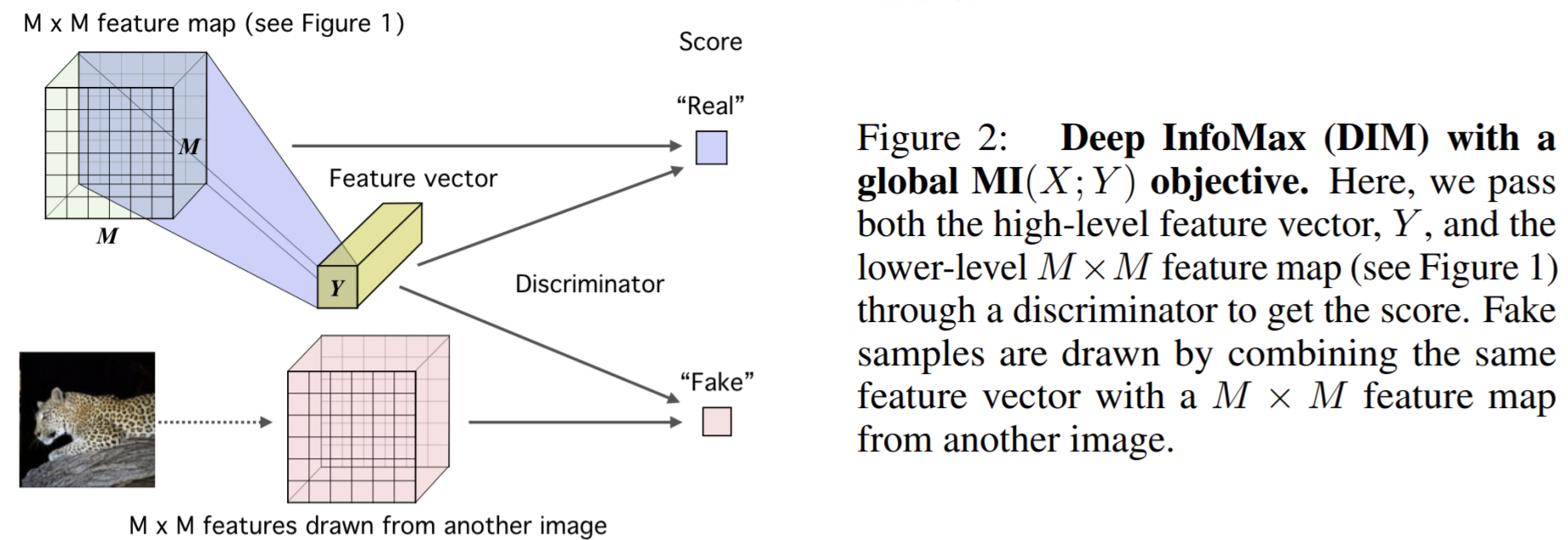

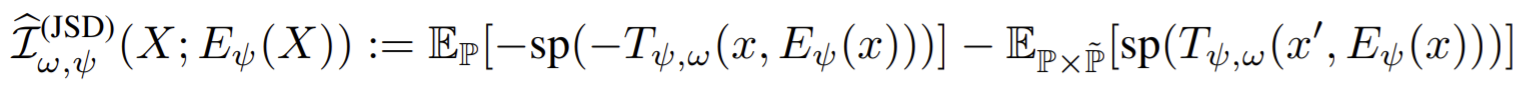

- DIM: follow MINE, but they find the generator is unnecessary. And no necessary for the exact KL-divergence, alternatively the JSD is more stable and provides better results.

- CPC and DIM

- CPC: make predictions about specific local features in the “future” of each summary feature. This equates to ordered autoregression over the local features, and requires training separate estimators for each temporal offset at which one would like to predict the future.

- DIM uses a single summary feature that is a function of all local features, and this “global” feature predicts all local features simultaneously in a single step using a single estimator.

What

- structure matters: maximizing the average MI between the representation and local regions of the input can improve performance while maximizing MI between the complete input and the encoder output not always do this.

- JSD helps MI estimation. JSD and DVD all maximize the expected log-ratio of the joint over the product of marginals.

How

Mutual information estimation and maximization

Basic MI maximization framework

share layers between encoder and mutual information

The different losses of DIM with different estimator

- With non-KL divergences such as JSD:

- With NCE,

- For DIM, a key difference between the DV, JSD, and infoNCE formulations is whether an expectation over \(\mathrm{\mathbb{P/ \tilde{P}}}\) appears inside or outside of a \(\log\). DIM sets the noise distribution to the product of marginals over \(X/Y\) , and the data distribution to the true joint.

- With non-KL divergences such as JSD:

infoNCE often outperforms JSD on downstream tasks, though this effect diminishes with more challenging data and also requires more negative samples compare with the JSD version.

DIM with the JSD loss is insensitive to the number of negative samples, and in fact outperforms infoNCE as the number of negative samples becomes smaller.

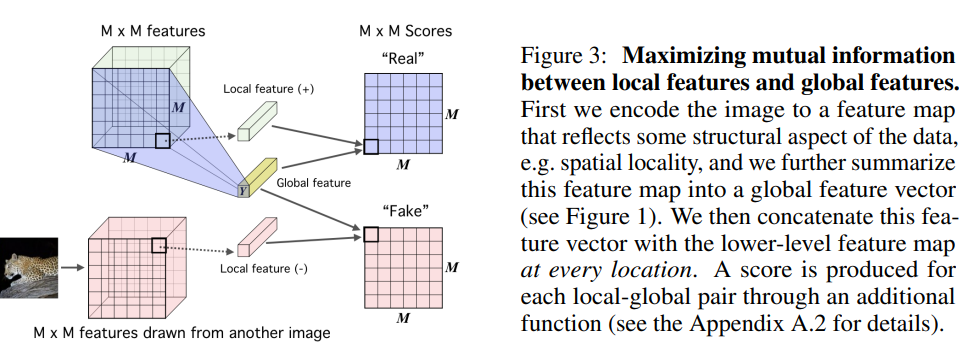

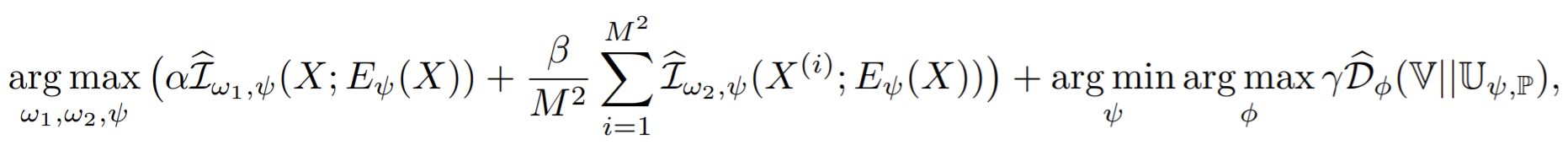

Local mutual information maximization

- To obtain a representation more suitable for classification, one can maximize the average MI between the high-level representation and local patches of the image.

- summarize this local feature map into a global feature

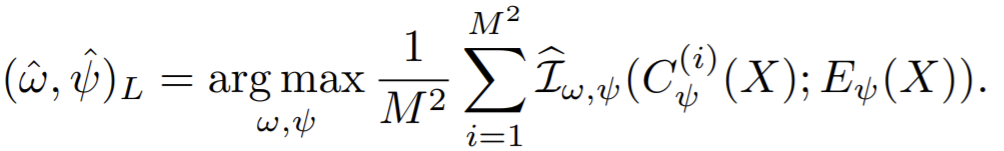

- then the MI estimator on global/local pairs, maximizing the average estimated MI:

Matching representation to a prior distribution

A good representation can be compact, independent, disentangled or independently controllable.

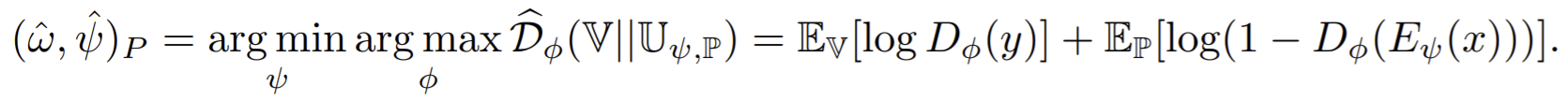

DIM imposes statistical constraints onto learned representations by implicitly training the encoder so that the push-forward distribution, \(\mathrm{\mathbb{U}}_{\psi,\mathrm{\mathbb{P}}}\), matches a prior, \(\mathrm{\mathbb{V}}\).

This is done by training a discriminator \(D_\phi: \mathcal{Y}\rightarrow \mathrm{\mathbb{R}}\) to estimate the divergence, \(\mathcal{D}(\mathrm{\mathbb{V}}|\mathrm{\mathbb{U}}_{\psi,\mathrm{\mathbb{P}}})\). Then training the encoder to minimize

trains the encoder to match the noise implicitly rather than using a priori noise samples as targets

Complete loss

Experiments

- Datasets: CIFAR10+CIFAR100, Tiny ImageNet, STL-10, CelebA (a face image dataset )

- Compared methods: VAE, \(\beta\)-VAE, adversarial AE, BiGAN, NAT, and CPC.

Evaluate the quality of a representation

- Linear separability has no help in showing the representation has high MI with the class labels when the representation is not disentangled.

- To measure:

- They use MINE to more directly measure the MI between the input the the output of the encoder.

- NDM (neural dependency measure): Then measure the independence of the representation using a discriminator. train a discriminator to estimate the KL-divergence between the original representations (joint distribution of the factors) and the shuffled representations. The higher the KL-divergence, the more dependent the factors. NDM is sensible and empirically consistent.

- The classification

- Linear classification

- Non-linear classification (with a single hidden layer NN)

- Semi-supervised learning: finetuning the encoder by adding a small NN.

- MS-SSIM: decoder trained on the L2 Reconstruction loss.

- MINE: maximized the DV estimator of the KL-divergence

- NDM: using a second discriminator to measure the KL between \(E_\psi(x)\) and a batch-wise shuffled version of \(E_\psi(x)\).

Representation learning comparison across models

- Test DIM(G) the global only, DIM (L) the local only and ablation study.

- Classification:

- DIM(L) outperforms all models. The representations are as good as or better than the raw pixels given the model constraints in this setting

- infoNCE tends to perform best, but differences between infoNCE and JSD diminish with larger datasets

- Overall DIM only slightly outperforms CPC in this setting, which suggests that the strictly ordered autoregression of CPC may be unnecessary for some tasks.

- Extended comparison: For MI, DIM combining local and global DIM objectives had very high scores .

- Adding coordinate information and occlusions

- can be interpreted as context prediction and generalizations of inpainting respectively.

- For occlusion: occluded part of input for global representations, but no occlusion for local representations. Maximizing MI between occluded global features and unoccluded local features aggressively encourages the global features to encode information which is shared across the entire image.

Appendix

- KL (traditional definition of mutual information) and the JSD have an approximately monotonic relationship. Overall, the distributions with the highest mutual information also have the highest JSD.

- We found both infoNCE and the DV-based estimators were sensitive to negative sampling strategies, while the JSD-based estimator was insensitive.

- DIM with a local-only objective, DIM(L), learns a representation with a much more interpretable structure across the image.

- In general, good classification performance is highly dependent on the local term, \(\beta\), while good reconstruction is highly dependent on the global term, \(\alpha\). the local objective is crucial, the global objective plays a stronger role here than with other datasets.

Paper 7: Contrastive Multiview Coding (CMC) ArXiv 2019

http://github.com/HobbitLong/CMC/

Previous

- some bits are in fact better than others.

- In these models, an input \(X\) to the model is transformed into an output \(\hat{X}\), which is supposed to be close to another signal \(Y\) (usually in Euclidean space), which itself is related to \(X\) in some meaningful way. and provides us with nearly infinite amounts of training data.

- The objective functions are usually reconstruction-based loss or contrastive losses.

- CPC learns from the past and the future view simultaneously, while Deep InfoMax takes the past as the input and the future as the output. They both use instance discrimination learns to match two sub-crops of the same image.

- They extend the objective to the case of more than two views and explore a different set of view definitions, architectures and application settings.

What

- Idea: a powerful representation is one that models view-invariant factor. We learn a representation that aims to maximize mutual information between different views of the same scene but is otherwise compact.

- study the setting where the different views are different image channels, such as luminance, chrominance, depth, and optical flow. The fundamental supervisory signal we exploit is the co-occurrence, in natural data, of multiple views of the same scene.

- Goal: learn information shared between multiple sensory channels but that are otherwise compact (i.e. discard channel-specific nuisance factors). we learn a feature embedding such that views of the same scene map to nearby points (measured with Euclidean distance in representation space) while views of different scenes map to far apart points.

- Use CPC as the backbone but remove the recurrent network part.

- We find that the quality of the representation improves as a function of the number of views used for training.

- demonstrate that the contrastive objective is superior to cross-view prediction.

How

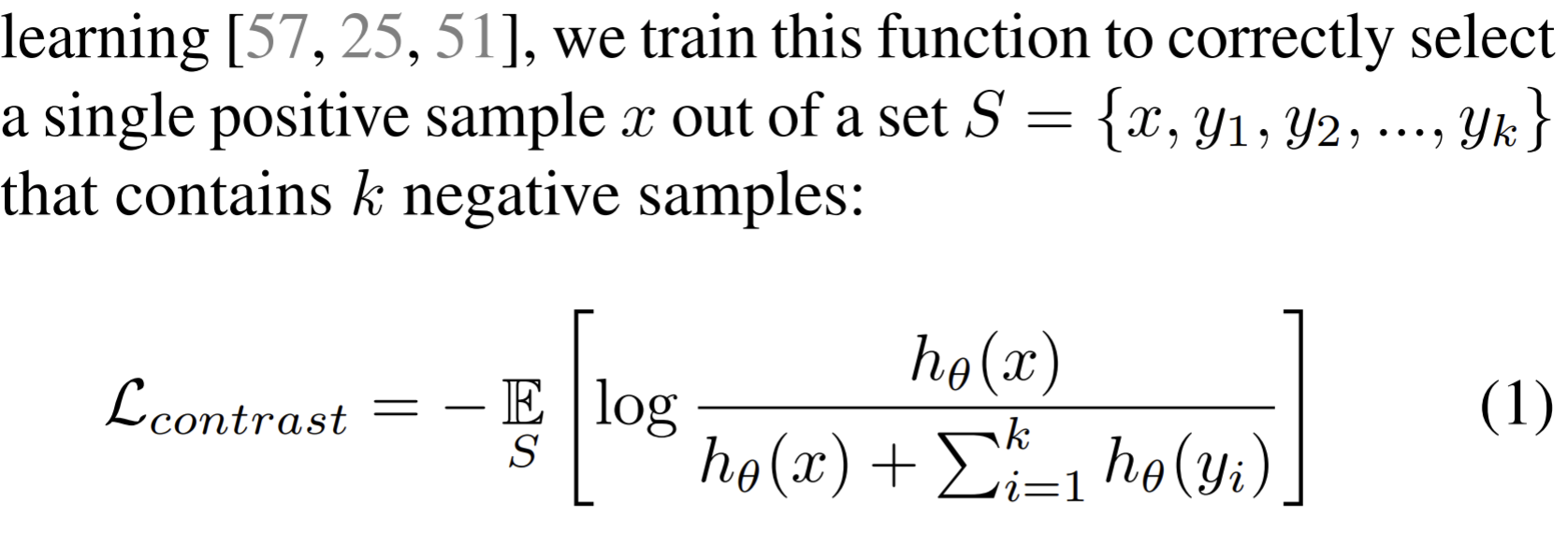

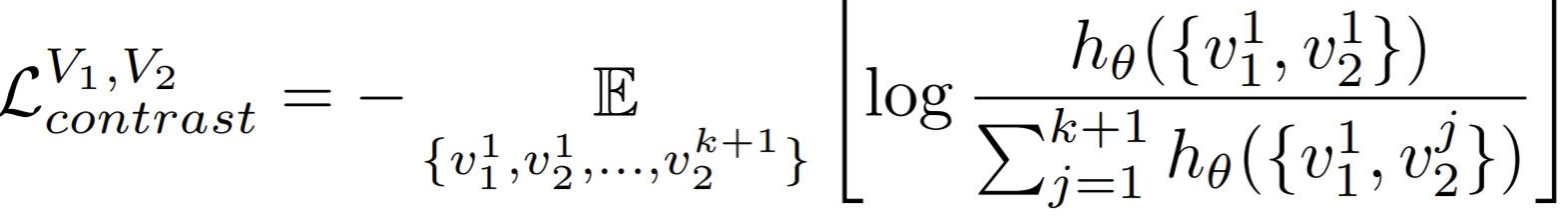

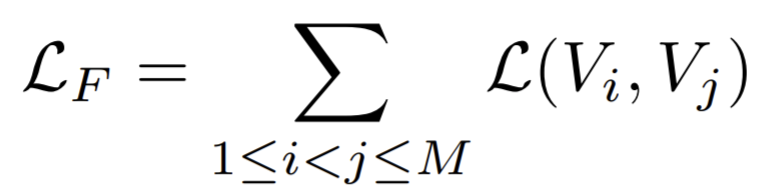

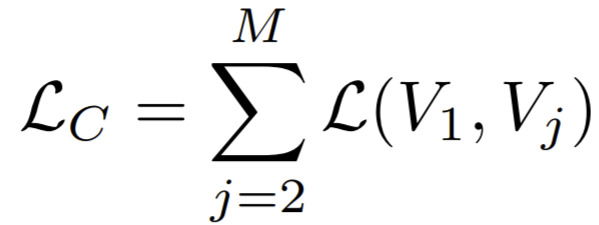

Suppose a dataset of \(V_1, V_2\) that consists of a collection of samples \(\{v_1 ^i, v_2 ^i\}_{i=1}^N\), consider $x={v_1 ^i, v_2 ^i} $ as the positive and the \(y=\{v_1 ^i, v_2 ^j\}\) as the negatives, aka the positive are the same picture in different views while the negative are the dissimilar images in different views.

, simply fix one view and enumerate positives and negatives from the other view, then the objective is written as

, simply fix one view and enumerate positives and negatives from the other view, then the objective is written as

But directly minimizing the above function is infeasible since \(k\) is pretty large. To approximate,

- Implementing the critic: implement \(h_\theta(\cdot)\) as a neural network. For each view, build a NN as the encoder, then compute the features cosine similarity as score and adjust its dynamic range by a hyper-parameter \(\tau\). The two view loss is then \(\mathcal{L} (V_1,V_2) = \mathcal{L}_{constrast}^{V_1,V_2}+\mathcal{L}_{constrast}^{V_2,V_1}\).

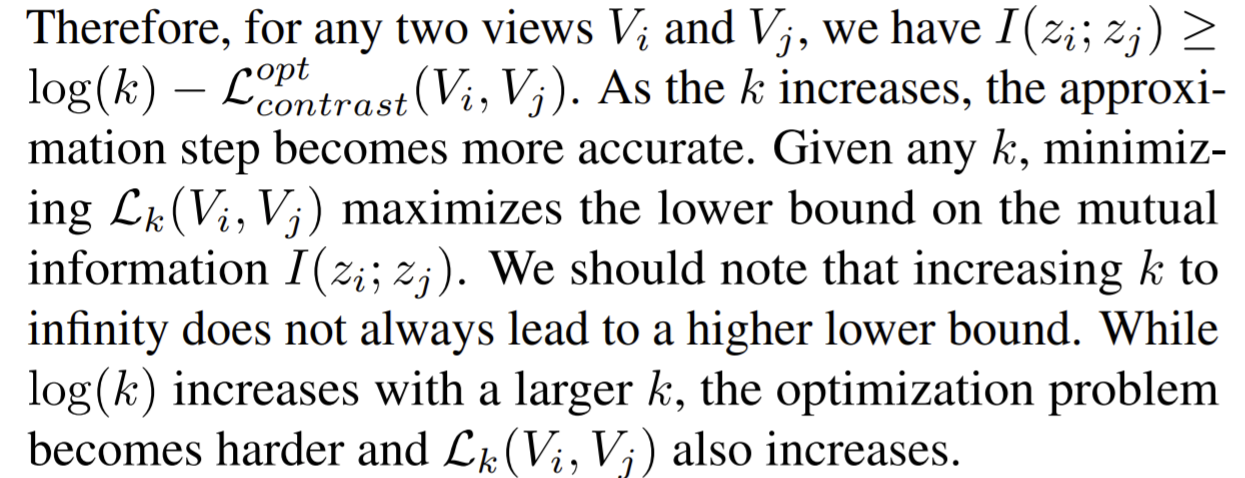

- Connecting to mutual information: minimizing the objective L maximizes the lower bound on the mutual information. But recent works show that the bound can be very weak.

Multi-views

- the full graph formulation is that it can handle missing information (e.g. missing views) in a natural manner.

- The core view is

- the full graph formulation is that it can handle missing information (e.g. missing views) in a natural manner.

To estimate, they use memory bank to store latent features for each training sample.

Experiments

- Benchmarks: ImageNet and STL-10.

Benchmarking CMC on ImageNet

- Convert the RGB images to the Lab image color space and split each image into L and ab channels.

- L and ab from the same image are treated as the positive pair, and ab channels from other randomly selected images are treated as a negative pair (for a given L)

- set the temperature τ as 0.07 and use a momentum 0.5 for memory update.

- learning from luminance and chrominance views in two colorspaces, {L, ab} and {Y, DbDr}.

- {Y, DbDr} provides \(0.7\%\) improvement, strengthening data augmentation with RandAugment yields better or comparable results to other SOTA methods.

CMC on videos

- given an image it that is a frame centered at time \(t\), the ventral stream associates it with a neighbouring frame \(i_{t+k}\), while the dorsal stream connects it to optical flow \(f_t\) centered at \(t\).

- extract \(i_t\), \(i_{t+k}\) and \(f_t\) from two modalities as three views of a video.

- Take \((i_t,i_{t+k})\) as the positive, and negative pairs for \(i_t\) is chosen as a random frame from another randomly chosen video;

- Take \((i_t,f_t)\) as the positive, then negative pairs for \(i_t\) are those flow corresponding to a random frame in another randomly chosen video.

- Pretrain the encoder on UCF101 and use two CaffeNets for extracting features from images and optical flows.

- Increasing the number of views of the data from 2 to 3 (using both streams instead of one) provides a boost for UCF-101

Extending CMC to more views

- Consider views: luminance (L channel), chrominance (ab channel), depth, surface normal, and semantic labels.

- the sub-patch based contrastive objective to increase the number of negative pairs

- Does representation quality improve as number of views increases?

- UNet style architecture

- The 2-4 view cases contrast L with ab, and then sequentially add depth and surface normals.

- measured by mean IoU over all classes and pixel accuracy.

- performance steadily improves as new views are added

- Is CMC improving all views?

- train these encoders following the full graph paradigm, where each view is contrasted with all other views.

- evaluate the representation of each view v by predicting the semantic labels from only the representation of v, where v is L, ab, depth or surface normals.

- the full-graph representation provides a good representation learnt for all views.

Predictive Learning vs. Contrastive Learning

- consider three view pairs on the NYU-Depth dataset: (1) L and depth, (2) L and surface normals, and (3) L and segmentation map. For each of them, we train two identical encoders for L, one using contrastive learning and the other with predictive learning.

- evaluate the representation quality by training a linear classifier on top of these encoders on the STL-10 dataset

- For predictive learning, pixel-wise reconstruction losses usually impose an independence assumption on the modeling. While contrastive learning does not assume conditional independence across dimensions of \(v_2\). Also the use of random jittering and cropping between views allows the contrastive learning approach to benefit from spatial co-occurrence (contrasting in space) in addition to contrasting across views.

How does mutual information affect representation quality?

cross-view representation learning is effective because it results in a kind of information minimization, discarding nuisance factors that are not shared between the views.

a good collection of views is one that shares some information but not too much

To test, build two domains: learning representations on images with different colorspaces forming the two views; and learning representations on pairs of patches extracted from an image, separated by varying spatial distance. (use high resolution images to avoid overlapping and cropped patches around boundary.)

using colorspaces with minimal mutual information give the best downstream accuracy

For patches with different offset with each other, views with too little or too much MI perform worse.

the relationship between mutual information and representation quality is meaningful but not direct.

patch-based contrastive loss is computed within each mini-batch and does not require a memory bank, but usually yields suboptimal results compared to NCE-based contrastive loss, according to our experiments

combining CMC with the MoCo mechanism or JigSaw branch in PIRL can consistently improve the performance, verifying that they are compatible.

Paper 8: Data-Efficient Image Recognition with Contrastive Predictive Coding (CPC v2: Improved CPC evaluated on limited labelled data)

Previous

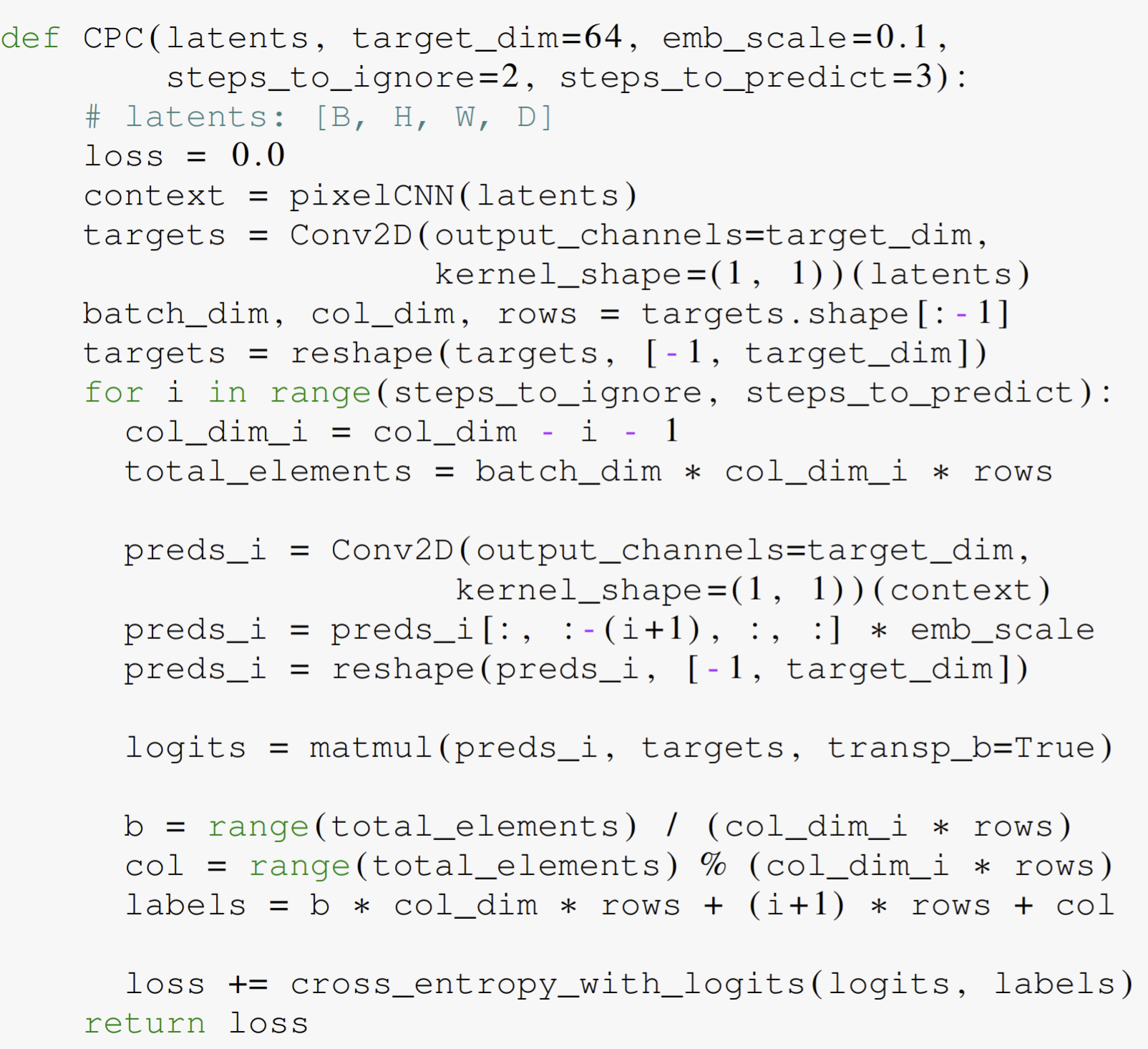

CPC only requires in its definition that observations be ordered along e.g. temporal or spatial dimensions. It learns representations by training neural networks to predict the representations of future observations from those of past ones.

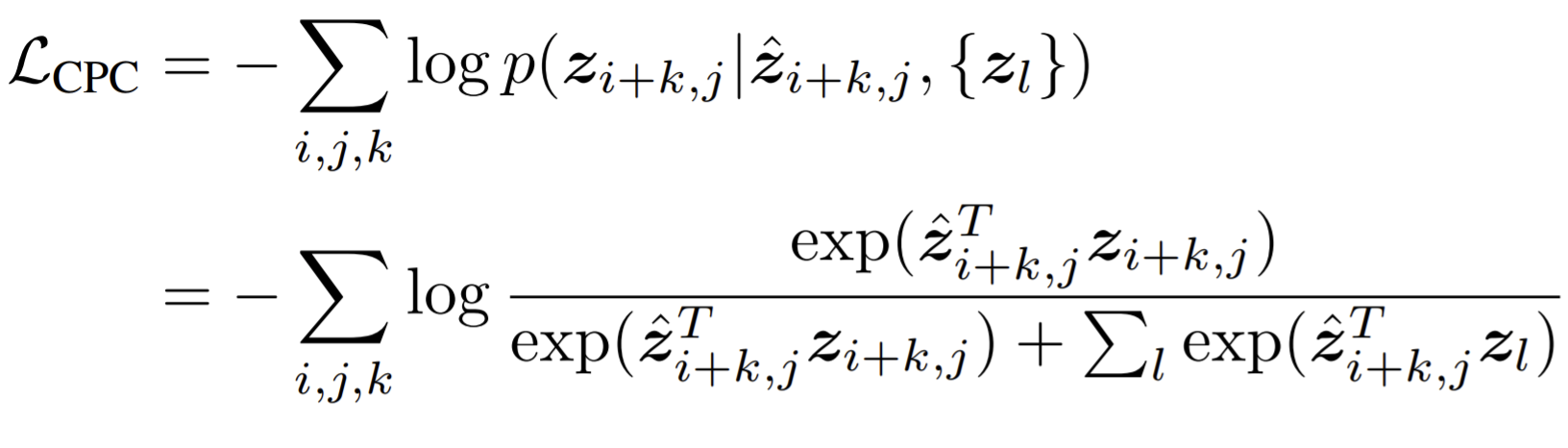

Loss

The loss is inspired by NCE, called as InfoNCE.

The negative samples \(\{z_l\}\) are taken from other locations in the image and other images in the mini-batch.

AMDIM is most similar to CPC in that it makes predictions across space, but differs in that it also predicts representations across layers in the model.

For improving data efficiency , one way is label-propogation.

- a classifier is trained on a subset of labeled data

- then used to label parts of the unlabeled dataset

Representation learning and label propagation have been shown to be complementary and can be combined to great effect (Zhai et al., 2019).

This work focus on representation learning.

What

- hypothesize that data-efficient recognition is enabled by representations which make the variability in natural signals more predictable

- removing low-level cues which might lead to degenerate solutions.

How

- Pretrain the encoder by CPC on local patches, and during test, apply the encoder on the entire image.

- Evaluation

- Linear classification: mean pooling followed by a single linear layer as the classifier. Use cross-entropy loss.

- Efficient classification: fix / fine tune the pretrained encoder, and then train the classifier (ResNet-33). Use a smaller learning rate and early-stopping for fine tuning incase the encoder deviates too much from the solution by the CPC objective.

- transfer learning: transfer the pretrained encoder to faster-RCNN to do classification. This is a multi-task.

- supervised training: directly train classifier by the input data, use cross-entropy loss.

Experiments

- Compare \(1\%\) supervised DNN based on CPC encoder with Semi-supervised methods and then supervised ResNets.

From CPC v1 to CPC v2

- Four axes for model capacity

- increasing depth and width: \(+5\%\). $+2% $ Top-1 accuracy with larger patches.

- improves training efficiency by importing layer normalization: can reclaim much of batch normalization’s training efficiency by using layer normalization (\(+2\%\) accuracy)

- making predictions in all four direction: Additional predictions tasks incrementally increased accuracy (adding bottom-up predictions: +2% accuracy; using all four spatial directions: +2.5% accuracy).

- perform patch-based augmentation: ‘color dropping’ (+3% accuracy); adding a fixed, generic augmentation scheme using the primitives from (shearing, rotation etc.), as well as random elastic deformations and color transforms +4.5% accuracy in total.

Efficient image classification

- fine-tune the entire stack hψ ◦ fθ for the supervised objective, for a small number of epochs (chosen by cross-validation)

- with only 1% of the labels, our classifier surpasses the supervised baseline given 5% of the labels

- the family of ResNet-50, -101, and -200 architectures are designed for supervised learning, and their capacity is calibrated for the amount of training signal present in ImageNet labels; larger architectures only run a greater risk of overfitting.

- fine-tuned representations yield only marginal gains over fixed ones

- we find that CPC provides gains in data efficiency that were previously unseen from representation learning methods, and rival the performance of the more elaborate label-propagation algorithms.

Transfer learning: image detection on PASCAL VOC 2007

- unsupervised pre-training surpasses supervised pretraining for transfer learning

Conclusion

- images are far from the only domain where unsupervised representation learning is important.

Paper 9: Momentum Contrast for Unsupervised Visual Representation Learning (MoCo, see also MoCo v2)

Have read MoCo before, here only list MoCo v2.

Previous

- Momentum Contrast (MoCo) shows that unsupervised pre-training can surpass its ImageNet-supervised counterpart in multiple detection and segmentation tasks.

- the negative keys are maintained in a queue, and only the queries and positive keys are encoded in each training batch.

- MoCo decouples the batch size from the number of negatives.

- SimCLR further reduces the gap in linear classifier performance between unsupervised and supervised pre-training representations.

- In an end-to-end mechanism, the negative keys are from the same batch and updated endto-end by back-propagation. SimCLR is based on this mechanism and requires a large batch to provide a large set of negatives.

What

- verify the effectiveness of two of SimCLR’s design improvements by implementing them in the MoCo framework

- using an MLP projection head and more data augmentation—we establish stronger baselines that outperform SimCLR and do not require large training batches.

How

- Evaluation

- ImageNet linear classification: features are frozen and a supervised linear classifier is trained

- Transferring to VOC object detection: a Faster R-CNN detector (C4-backbone) is fine-tuned end-to-end on the VOC 07+12 trainval set and evaluated on the VOC 07 test set using the COCO suite of metrics

- Amending

- replace the fc head in MoCo with a 2-layer MLP head (hidden layer 2048-d, with ReLU). This MLP only influences the unsupervised training stage; the linear classification or transferring stage does not use this MLP head.

- including the blur augmentation because stronger color distortion in A simple framework for contrastive learning of visual representations has diminishing gains in our higher baselines.

Experiments

- pre-training with the MLP head improves from 60.6% to 62.9%. in contrast to the big leap on ImageNet, the detection gains are smaller

- linear classification accuracy is not monotonically related to transfer performance in detection

- large batches are not necessary for good accuracy, and state-of-the-art results can be made more accessible.

Paper 10: A Simple Framework for Contrastive Learning of Visual Representations (SimCLR). ICML 2020

https://github.com/google-research/simclr

Previous

- Many such approaches have relied on heuristics to design pretext tasks, which could limit the generality of the learned representations.

- Types of augmentations

- spatial/geometric transformation of data: cropping and resizing (with horizontal flipping), rotation and cutout

- appearance transformation, such as color distortion (including color dropping, brightness, contrast, saturation, hue), Gaussian blur, and Sobel filtering.

- it is not clear if the success of contrastive approaches is determined by the mutual information, or by the specific form of the contrastive loss

What

- composition of multiple data augmentations plays a critical role in defining effective predictive tasks

- introducing a learnable nonlinear transformation between the representation and the contrastive loss substantially improves the quality of the learned representations

- contrastive learning benefits from larger batch sizes and more training steps compared to supervised learning

- Representation learning with contrastive cross entropy loss benefits from normalized embeddings and an appropriately adjusted temperature parameter.

How

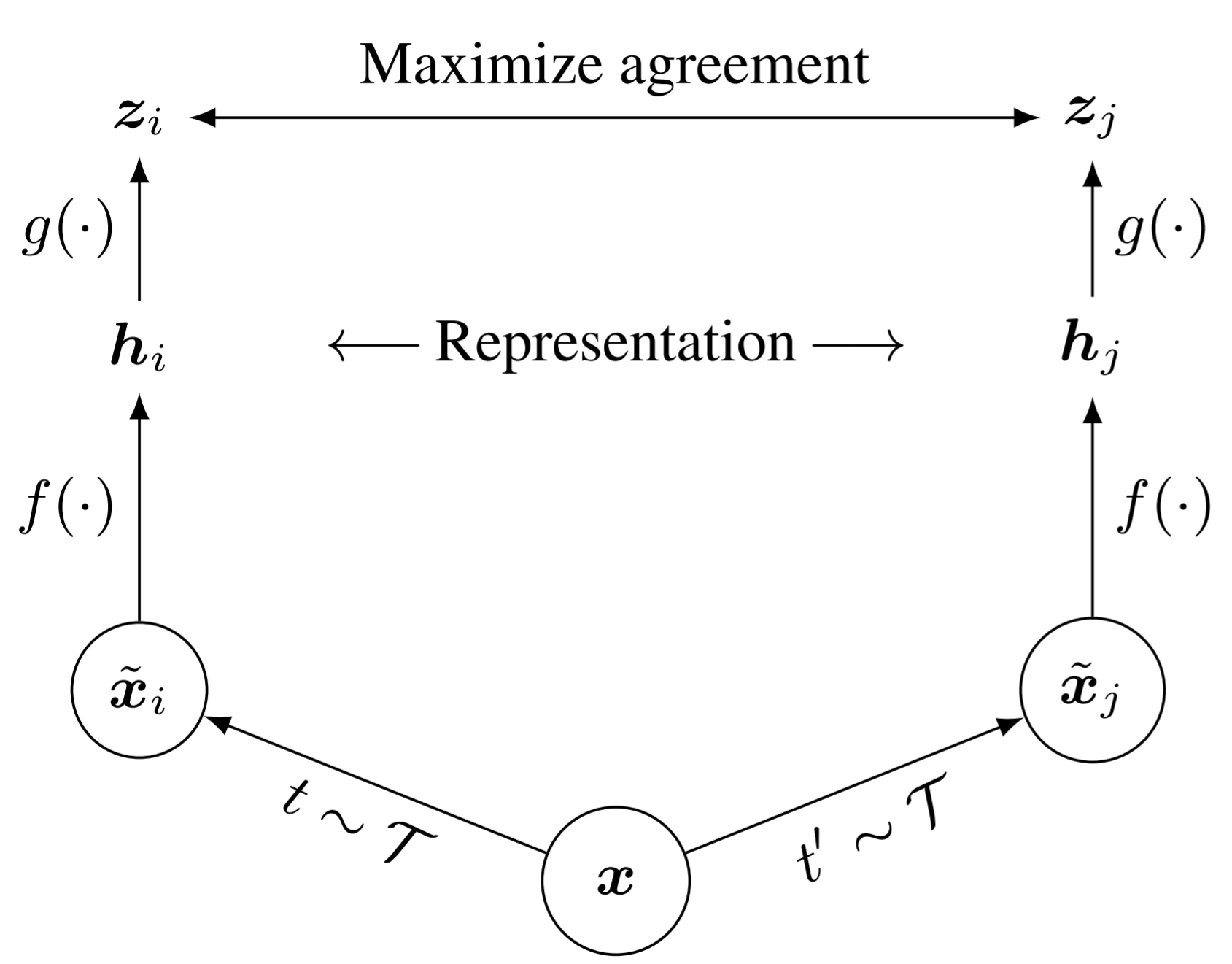

SimCLR learns representations by maximizing agreement between differently augmented views of the same data example via a contrastive loss in the latent space.

Where the \(x\) is the input data from one image, \(t\sim\mathcal{T}\) is the augmentation operator, and \(f(\cdot)\) is the encoder operator, \(g(\cdot)\) is the projection head. In downstream task, the \(h\) is used as the extracted features for classification.

- define the contrastive prediction task on pairs of augmented examples derived from the minibatch. Negative samples are not sampled explicitly.

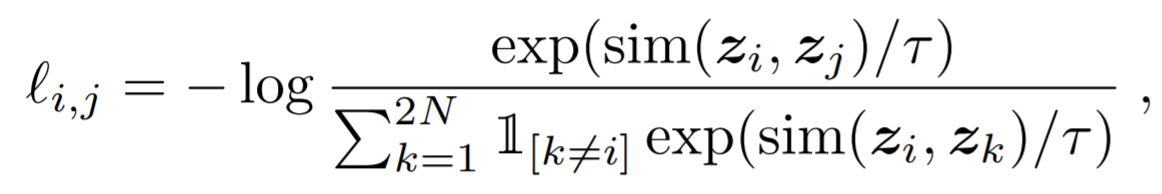

- Use dot product as the similarity measurement. The final loss is termed as NT-Xent (the normalized temperature-scaled cross entropy loss).

- vary the training batch size N from 256 to 8192. A batch size of 8192 gives us 16382 negative examples per positive pair from both augmentation views. Since large batch size with SGD will induce to unstable, use LARS optimizer instead.

- as positive pairs are computed in the same device, use global BN by aggregating BN mean and variance over all devices during the training.

- shuffling data examples across devices, replacing BN with layer norm

Evaluation protocol

- Linear classifier, and compare with SOTA on Semi-supervised and transfer learning.

- default settings

- augmentation: random crop and resize (with random flip), color distortions, and Gaussian blur

- use ResNet-50 as the base encoder network

- a 2-layer MLP projection head to project the representation to a 128-dimensional latent space

- train at batch size 4096 for 100 epochs

- linear warmup for the first 10 epochs, and decay the learning rate with the cosine decay schedule without restarts

Experiments

Data augmentation

- Found no single transformation suffices to learn good representations

- One composition of augmentations stands out: random cropping and random color distortion

- Though color histograms alone suffice to distinguish images, most patches from an image share a similar color distribution.

- Stronger color augmentation substantially improves the linear evaluation of the learned unsupervised models. But for supervised methods, the stronger color augmentation even hurt the performance.==> unsupervised contrastive learning benefits from stronger (color) data augmentation than supervised learning

Architectures for encoder and head

- Unsupervised contrastive learning benefits (more) from bigger models

- the gap between supervised models and linear classifiers trained on unsupervised models shrinks as the model size increases,

- suggesting that unsupervised learning benefits more from bigger models than its supervised counterpart.

- training logger does not improve supervised methods.

- the gap between supervised models and linear classifiers trained on unsupervised models shrinks as the model size increases,

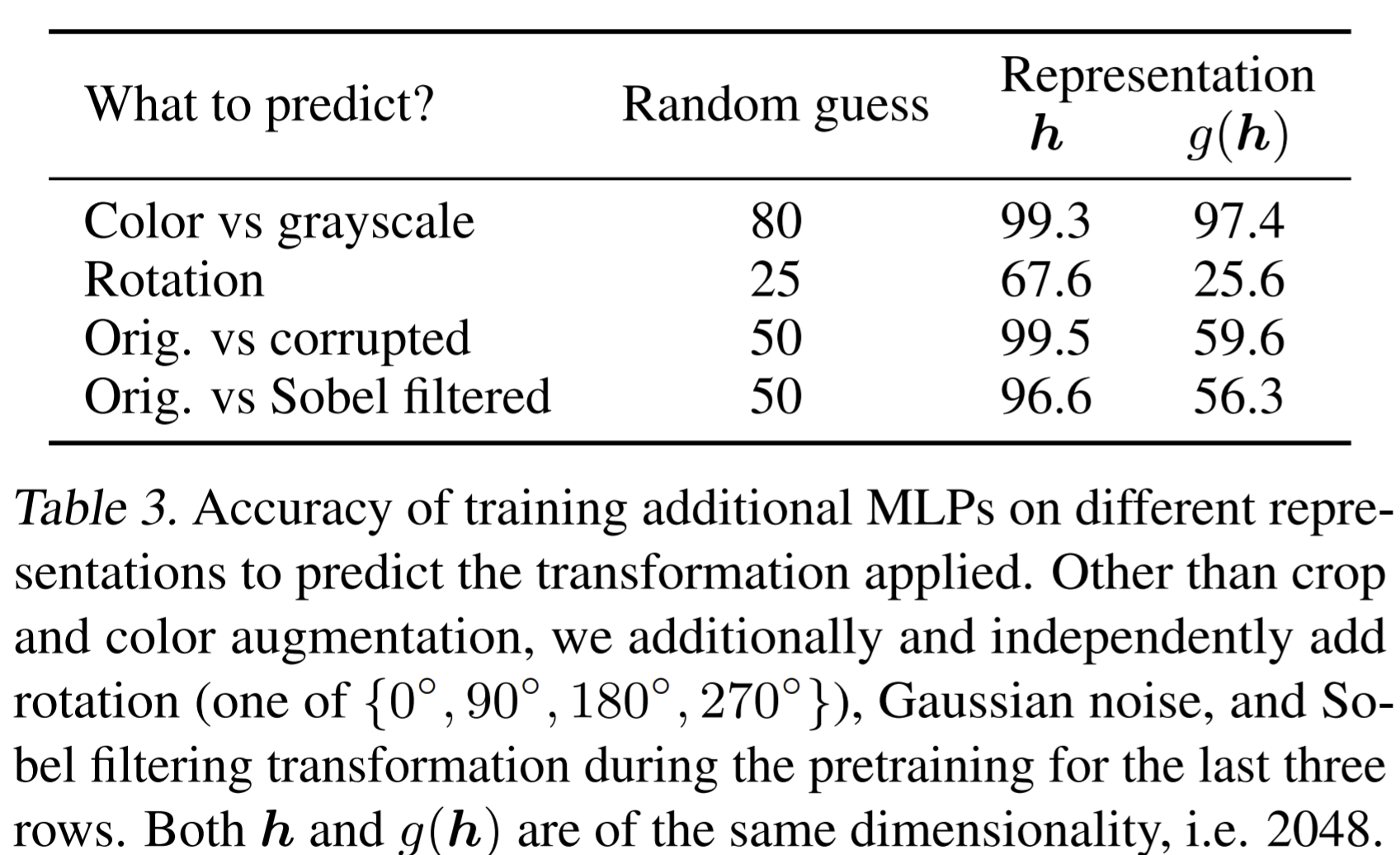

- A nonlinear projection head improves the representation quality of the layer before it

- a nonlinear projection is better than a linear projection (+3%), and much better than no projection (>10%)

- hidden layer before the projection head is a better representation than the layer after

- They explain this is due to loss of information induced by the contrastive loss

- \(g\) (projection head) can remove information that may be useful for the downstream task, such as the color or orientation of objects

Loss functions and batch size

- Normalized cross entropy loss with adjustable temperature works better than alternatives

- NT-Xent loss against such as logistic loss and margin loss.

- \(\ell_2\) normalization (i.e. cosine similarity) along with temperature effectively weights different examples, and an appropriate temperature can help the model learn from hard negatives

- unlike cross-entropy, other objective functions do not weigh the negatives by their relative hardness

- without normalization and proper temperature scaling, performance is significantly worse

- NT-Xent loss against such as logistic loss and margin loss.

- Contrastive learning benefits (more) from larger batch sizes and longer training

- when the number of training epochs is small (e.g. 100 epochs), larger batch sizes have a significant advantage over the smaller ones

- With more training steps/epochs, the gaps between different batch sizes decrease or disappear, provided the batches are randomly resampled

- Training longer also provides more negative examples, improving the results.

Learning rate and projection matrix

- square root learning rate scaling improves the performance for models trained with small batch sizes and in smaller number of epoch

- The linear projection matrix for computing \(z\) is approximately low rank, indicating by the plot of eigenvalues.

The way to train downstream classifier

attaching the linear classifier on top of the base encoder (with a stop_gradient on the input to linear classifier to prevent the label information from influencing the encoder) and train them simultaneously during the pretraining achieves similar performance

The best temperature parameter

- the optimal temperature in {0.1, 0.5, 1.0} is 0.5 and seems consistent regardless of the batch sizes.

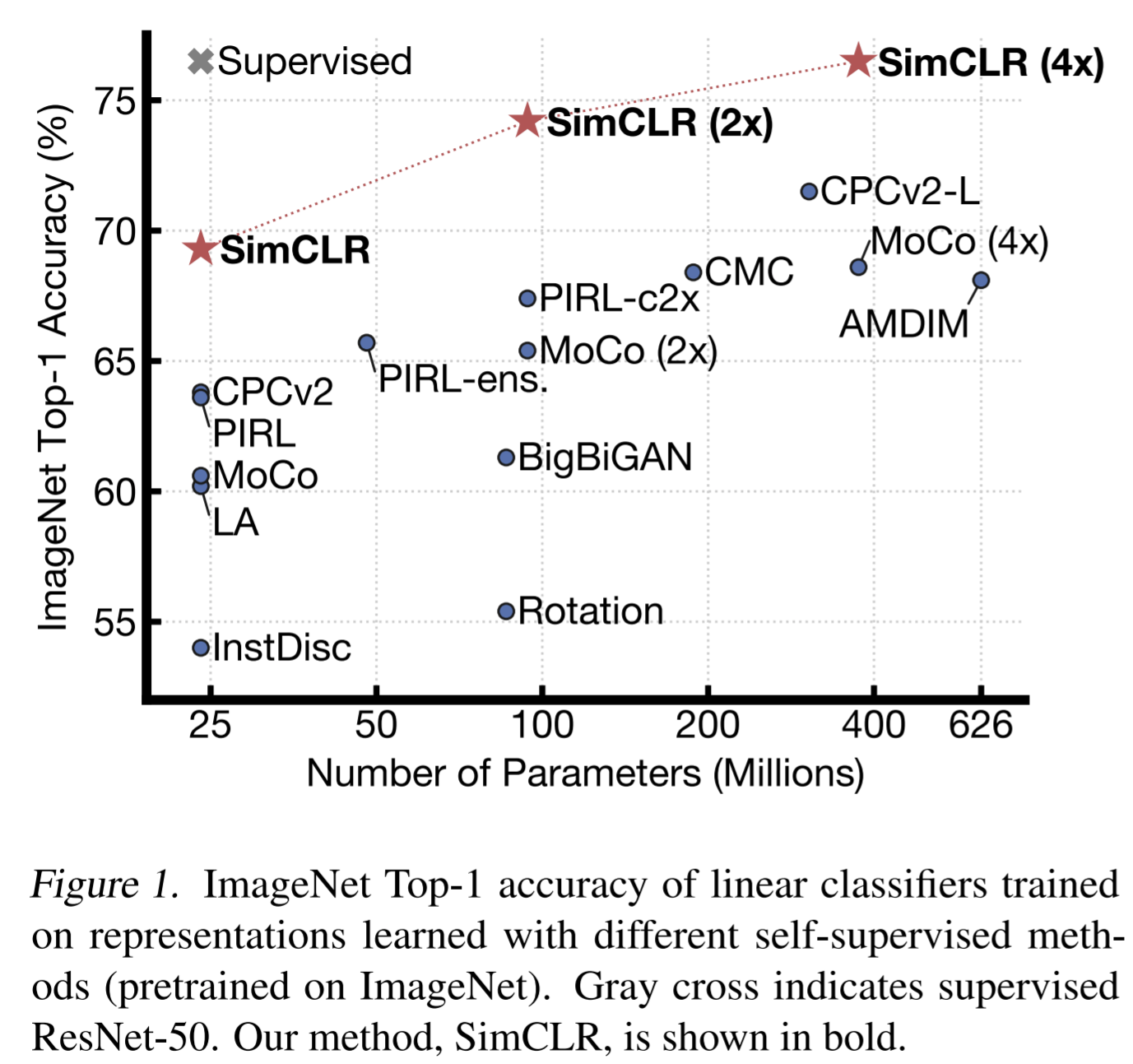

Compared with SOTA

- fine-tuning our pretrained ResNet-50 (2×, 4×) on full ImageNet are also significantly better then training from scratch (up to 2%)

- our self-supervised model significantly outperforms the supervised baseline on 5 datasets, whereas the supervised baseline is superior on only 2

Paper 11: Big Self-Supervised Models are Strong Semi-Supervised Learners (SimCLRv2) ArXiv 2020

Previous

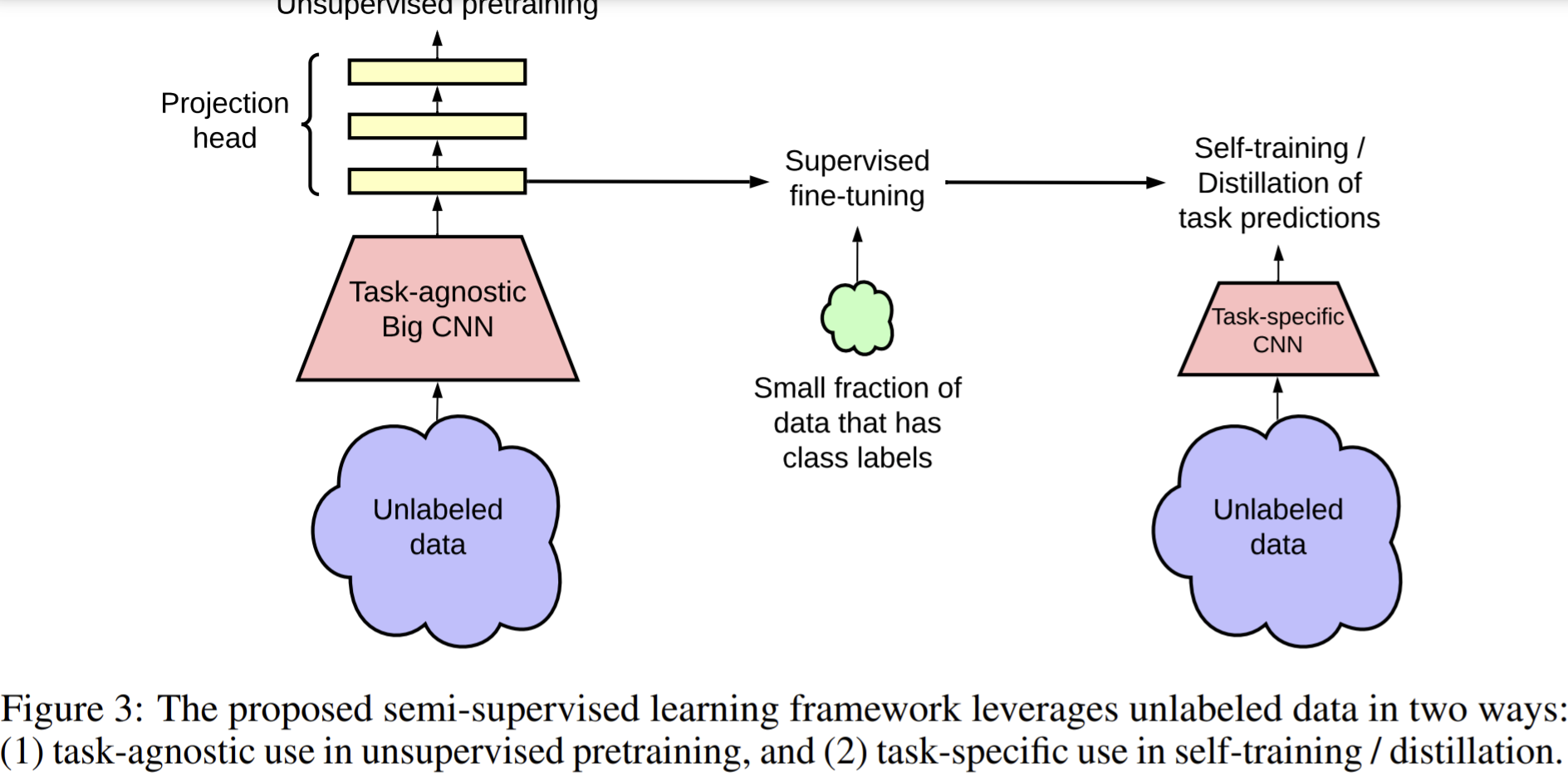

- semi-supervised learning involves unsupervised or self-supervised pretraining. It leverages unlabeled data in a task-agnostic way during pretraining, as the supervised labels are only used during fine-tuning.

- An alternative approach, directly leverages unlabeled data during supervised learning, as a form of regularization. uses unlabeled data in a task-specific way to encourage class label prediction consistency on unlabeled data among different models or under different data augmentations.

What

- Found the fewer the labels, the more this approach (task-agnostic use of unlabeled data) benefits from a bigger network

- The proposed semi-supervised learning algorithm in three steps:

- unsupervised pretraining of a big ResNet model using SimCLRv2,

- supervised fine-tuning on a few labeled examples,

- and distillation with unlabeled examples for refining and transferring the task-specific knowledge.

- make use of unlabeled data for a second time to encourage the student network to mimic the teacher network’s label predictions

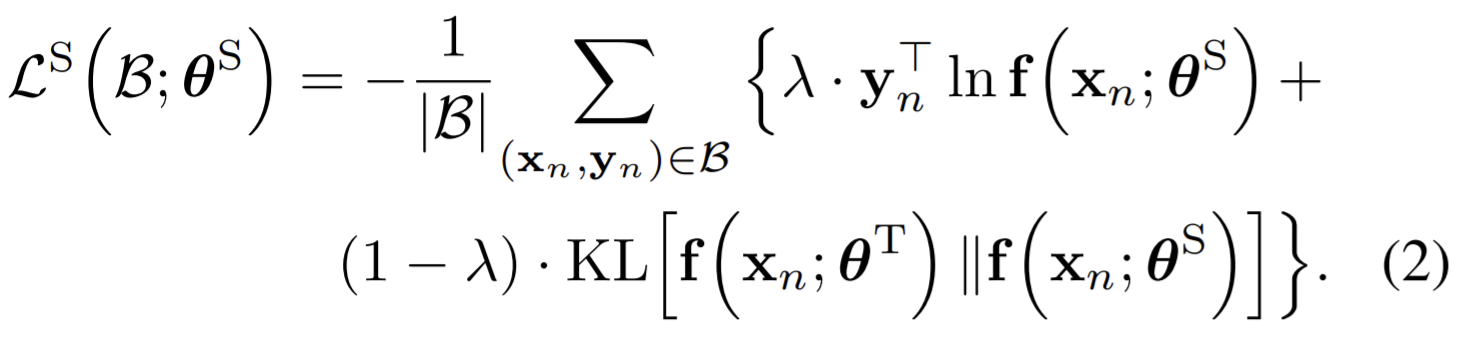

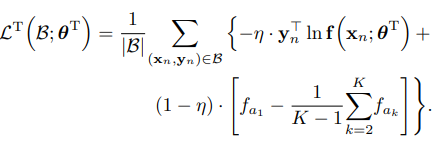

How

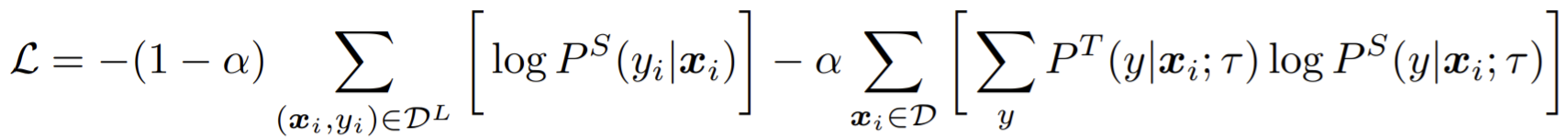

- train the student network by the labels predicted by the teacher network, also can add some real labels, while the student and teacher network are both trained by unlabeled data.

- SimCLR v2

- With bigger backbone (encoder): The largest model we train is a 152-layer ResNet [25] with 3× wider channels and selective kernels (SK). From ResNet-50 to ResNet-152 (3×+SK), we obtain a 29% relative improvement in top-1 accuracy when fine-tuned on 1% of labeled examples

- Increase the capacity of the projection head by making it deeper. Use a 3-layer projection head and fine tuning from the 1st layer of projection head. It results in as much as 14% relative improvement in top-1 accuracy when fine-tuned on 1% of labeled examples.

- Incorporate the memory mechanism from MoCo. It yields an improvement of ∼1% for linear evaluation as well as when fine-tuning on 1% of labeled examples when the SimCLR is trained in larger batch size.

- Fine-tuning

- Instead of throwing away the projection head directly, they fine-tune the model from a middle layer of the projection head, instead of the input layer of the projection head as in SimCLR.

- Self-training

- leverage the unlabeled data directly for the target task

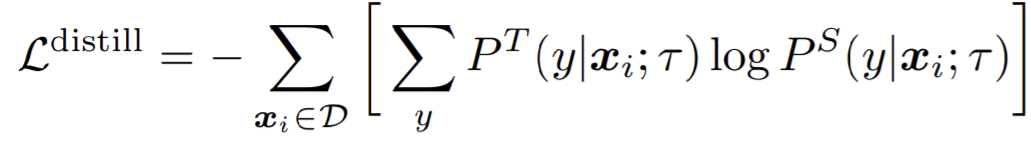

- minimize the following distillation loss where no real labels are used:

- during distillation the teacher network is fixed.

- If do it in semi-supervised:

- The student model can be the same as the teacher or be smaller.

Experiments

- Benchmarks: ImageNet ILSVRC-2012. only a randomly sub-sampled 1% (12811) or 10% (128116) of images are associated with labels.

- with a batch size of 4096 and global batch normalization, for total of 800 epochs

- The memory buffer is set to 64K, use the same augmentations as in SimCLR v1, namely random crop, color distortion, and Gaussian blur.

- Two student networks, one is the same as the teacher, the other is smaller than the teacher to test the self-distillation and big-to-small distillation. Only random crop and horizontal flips of training images are applied during fine-tuning and distillation.

Bigger models are more label-efficient

- train ResNet by varying width and depth as well as whether or not to use selective kernels (SK). If use SK, they use the ResNet-D version.

- Use SK will cause larger model size.

- increasing width and depth, as well as using SK, all improve the performance. But the benefits of width will plateau.

- bigger models are more label-efficient for both supervised and semi-supervised learning, but gains appear to be larger for semi-supervised learning.

Bigger/deeper projection heads improve representation learning

- using a deeper projection head during pretraining is better when fine-tuning from the optimal layer of projection head, and this optimal layer is typically the first layer of projection head rather than the input (0th layer).

- when using bigger ResNets, the improvements from having a deeper projection head are smaller.

- it is possible that increasing the depth of the projection head has limited effect when the projection head is already relatively wide.

- Correlation is higher when fine-tuning from the optimal middle layer of the projection head than when fine-tuning from the projection head input, which indicates the accuracy of fine-tuned models is more related with the optimal middle layer of the projection head.

Distillation using unlabeled data improves semi-supervised learning

Two loss: distillation loss and an ordinary supervised cross-entropy loss on the labels.

Using the distillation loss alone works almost as well as balancing distillation and label losses when the labeled fraction is small (1%, 10%).

To get the best performance for smaller ResNets, the big model is self-distilled before distilling it to smaller models

when the student model has a smaller architecture than the teacher model, it improves the model efficiency by transferring task-specific knowledge to a student model; even when the student model has the same architecture as the teacher model (excluding the projection head after ResNet encoder), self-distillation can still meaningfully improve the semi-supervised learning performance.

task-agnostically learned general representations can be distilled into a more specialized and compact network using unlabeled examples.

The benefits are larger when (1) regularization techniques (such as augmentation, label smoothing) are used, or (2) the model is pretrained using unlabeled examples

a better fine-tuned model (measured by its top-1 accuracy), regardless their projection head settings, is a better teacher for transferring task specific knowledge to the student using unlabeled data.

Paper 12: Unsupervised Learning of Visual Features by Contrasting Cluster Assignments (SwAV) ArXiv 2020

https://github.com/facebookresearch/swav

Previous

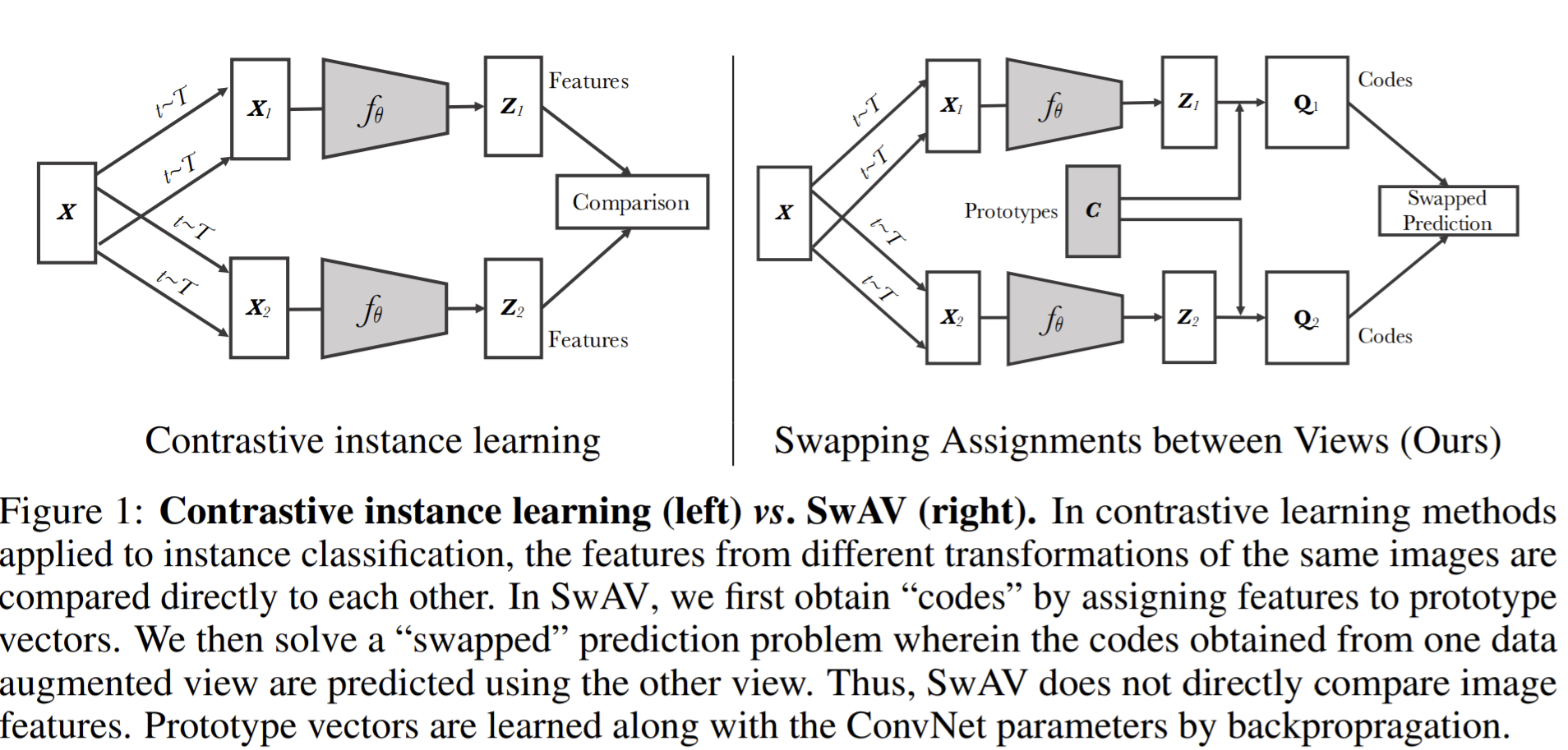

- contrastive methods typically work online and rely on a large number of explicit pairwise feature comparisons, which is computationally expensive.

- In contrastive methods, the loss function and augmentations both contribute to the performance.

- most implementations approximate the loss by reducing the number of comparisons to random subsets of images during training

- An alternative to approximate the loss is to approximate the task—that is to relax the instance discrimination problem. E.g., clustering-based methods discriminate between groups of images with similar features instead of individual images. But it does not scale well with the dataset as it requires a pass over the entire dataset to form image “codes” (i.e., cluster assignments) that are used as targets during training.

- Amendment: They avoid comparing every pair of images by mapping the image features to a set of trainable prototype vectors.

- Typical clustering-based methods are offline in the sense that they alternate between a cluster assignment step where image features of the entire dataset are clustered, and a training step where the cluster assignments, i.e., “codes” are predicted for different image views.

What

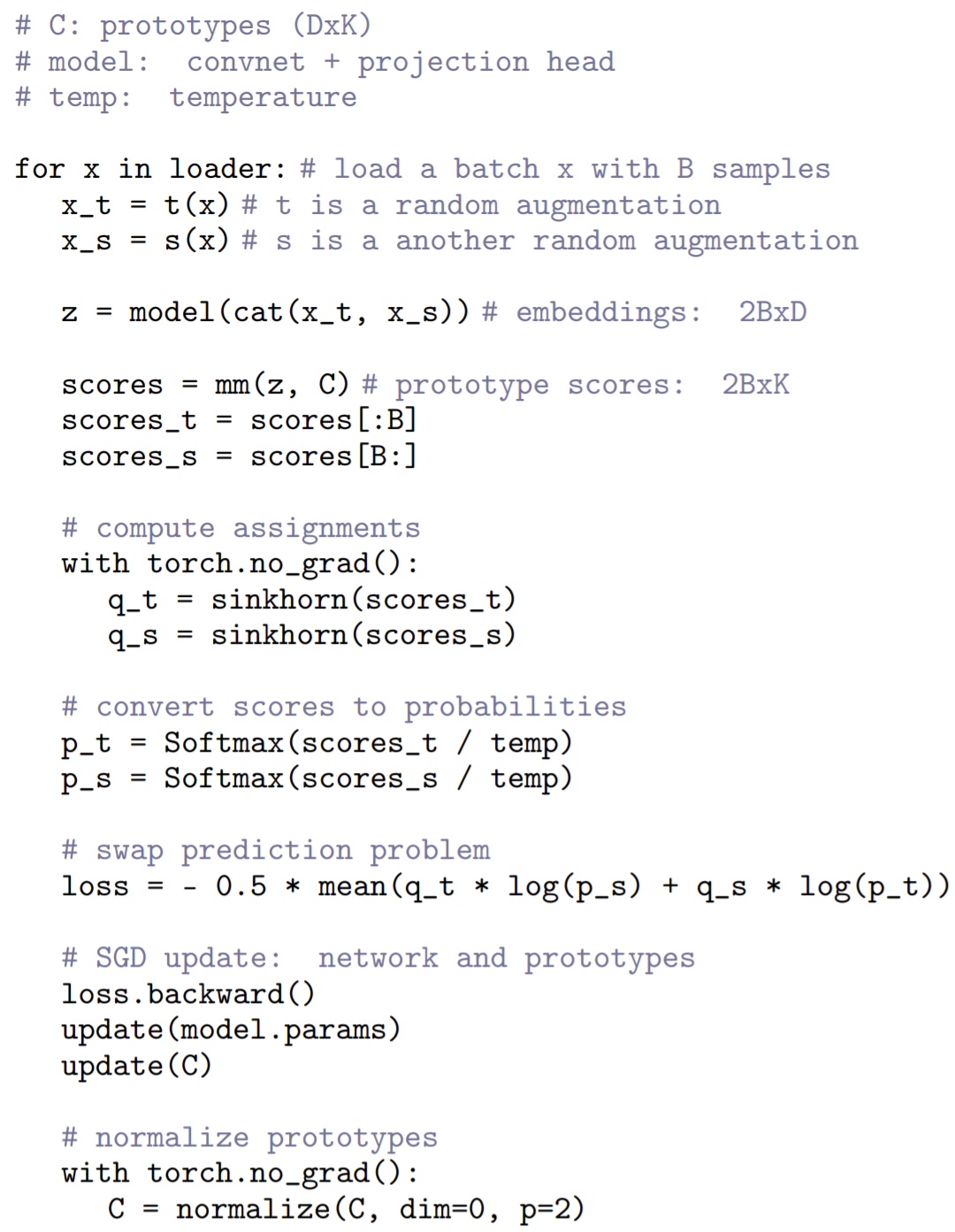

- propose an online algorithm, SwAV, that takes advantage of contrastive methods without requiring to compute pairwise comparisons. Their method can be interpreted as a way of contrasting between multiple image views by comparing their cluster assignments instead of their features.

- simultaneously clusters the data while enforcing consistency between cluster assignments produced for different augmentations (or “views”) of the same image

- predict the code of a view from the representation of another view, and called it as swapped assignments between multiple views of the same image (SwAV)

- memory efficient since it does not require a large memory bank or a special momentum network.

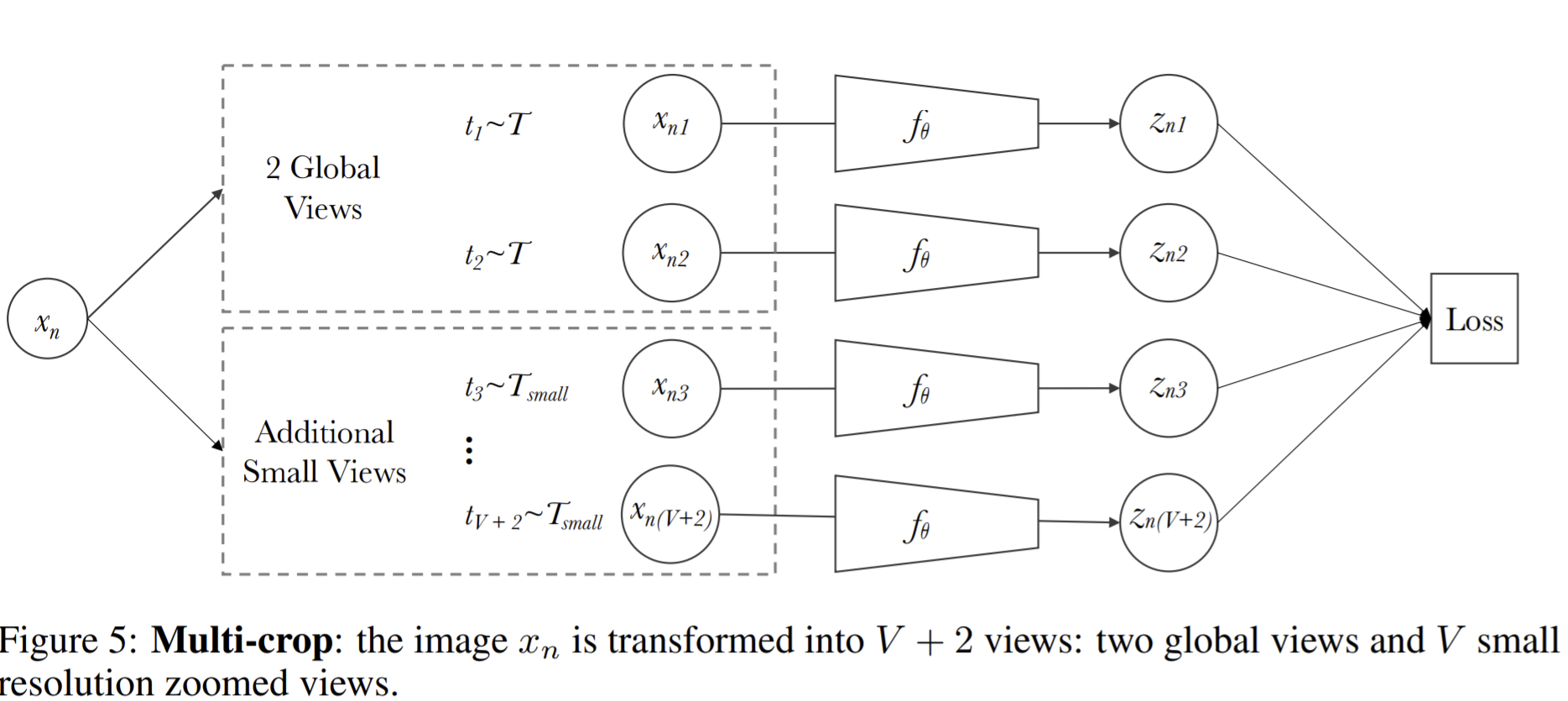

- Propose multi-crop as a new augmentation, uses a mix of views with different resolutions in place of two full-resolution views. It consists in simply sampling multiple random crops with two different sizes: a standard size and a smaller one.

- mapping small parts of a scene to more global views significantly boosts the performance.

- Use ResNet as the backbone and ImageNet as the benchmark.

How

Prototype vectors \(C\) are learned along with the ConvNet parameters by backpropragation, and they are mapped with the predicted \(z\) to search the codes \(Q\).

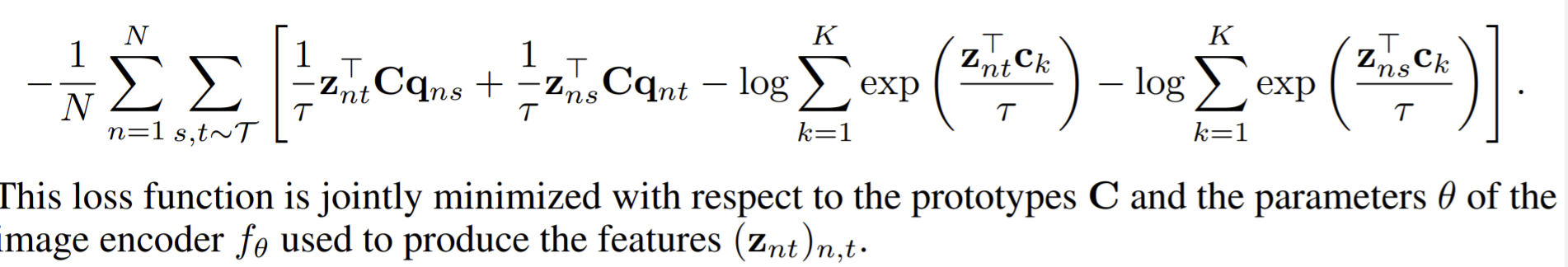

- For two image features \(z_t,z_s\), predict the codes \(q_t,q_s\), the the loss is \(L(z_t,z_s)=\ell(z_t,q_s)+\ell(z_s,q_t)\). \(\ell(z,q)\) measures the fit between features \(z\) and a code \(q\). \(\ell(z_t,q_s)=-\sum_k q_s^{(k)}\log p_t^{(k)}\), where \(p_t^{(k)}=\frac{\exp{(\frac{1}{\tau}}z_t^Tc_k)}{\sum_{k'}\exp{(\frac{1}{\tau}z_t^Tc_{k'}})}\).

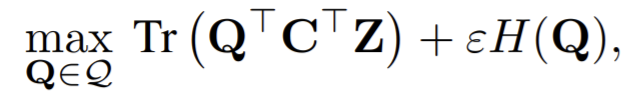

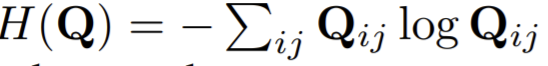

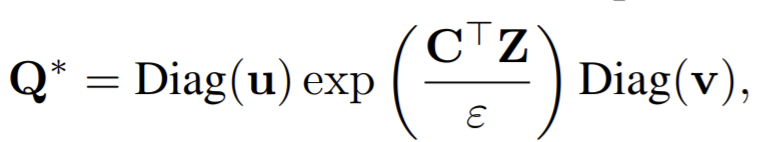

Online codes computing: to get the soft predicted codes \(Q^*\)

- optimize \(Q\) to maximized the similarity between the features and the prototypes, i.e.,

- the entropy \(H(Q)\) controls the diversity of the codes, and a strong entropy regularization (i.e. using a high ε) generally leads to a trivial solution where all samples collapse into an unique representation and are all assigned uniformely to all prototypes. They keep \(\varepsilon\) small.

- Enforce that on average each prototype is selected at least \(\frac{B}{K}\) times in the batch.

- In online version, the continuous codes work better than discrete codes (rounding the continuous codes)

- An explanation is that the rounding needed to obtain discrete codes is a more aggressive optimization step than gradient updates. While it makes the model converge rapidly, it leads to a worse solution.

- The soft codes \(Q^*\) is

, where \(u,v\) are renormalization vectors in \(\mathbb{R}^K, \mathbb{R}^B\) respectively (prototypes and feature space).

, where \(u,v\) are renormalization vectors in \(\mathbb{R}^K, \mathbb{R}^B\) respectively (prototypes and feature space). - In practice, we observe that using only 3 iterations is fast and sufficient to obtain good performance for solving \(Q^*\).

- When working with small batches, we use features from the previous batches to augment the size of \(Z\) to equally partition the batch into the \(K\) prototypes. Then, we only use the codes of the batch features in our training loss

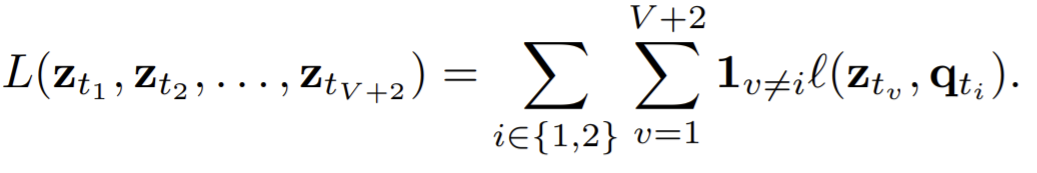

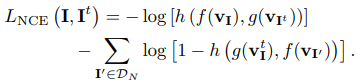

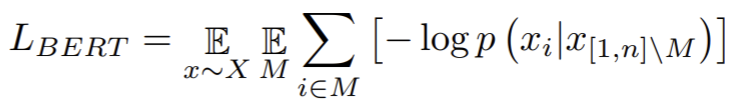

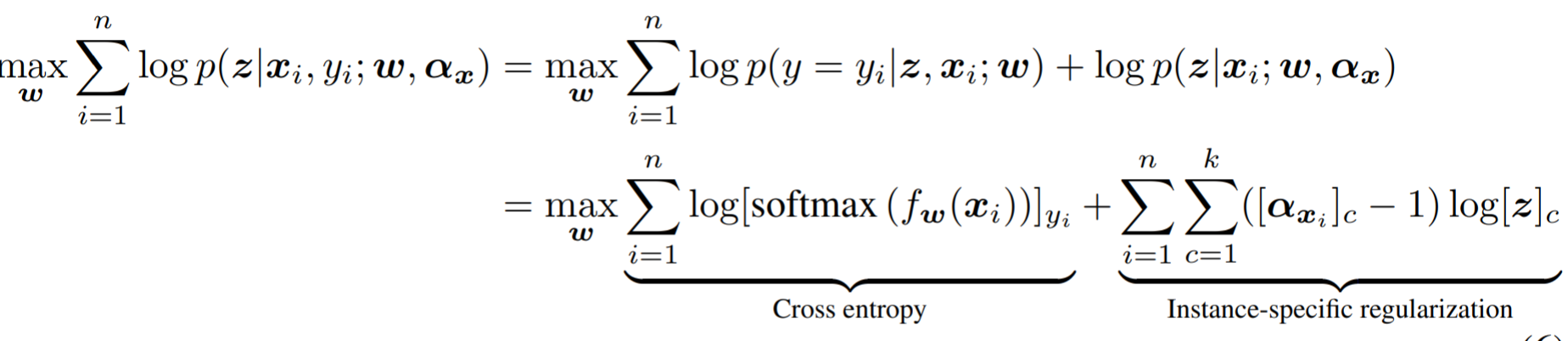

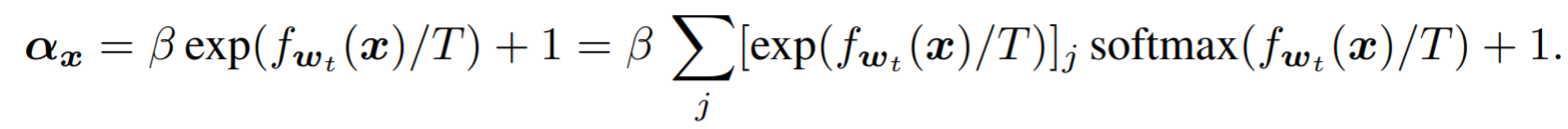

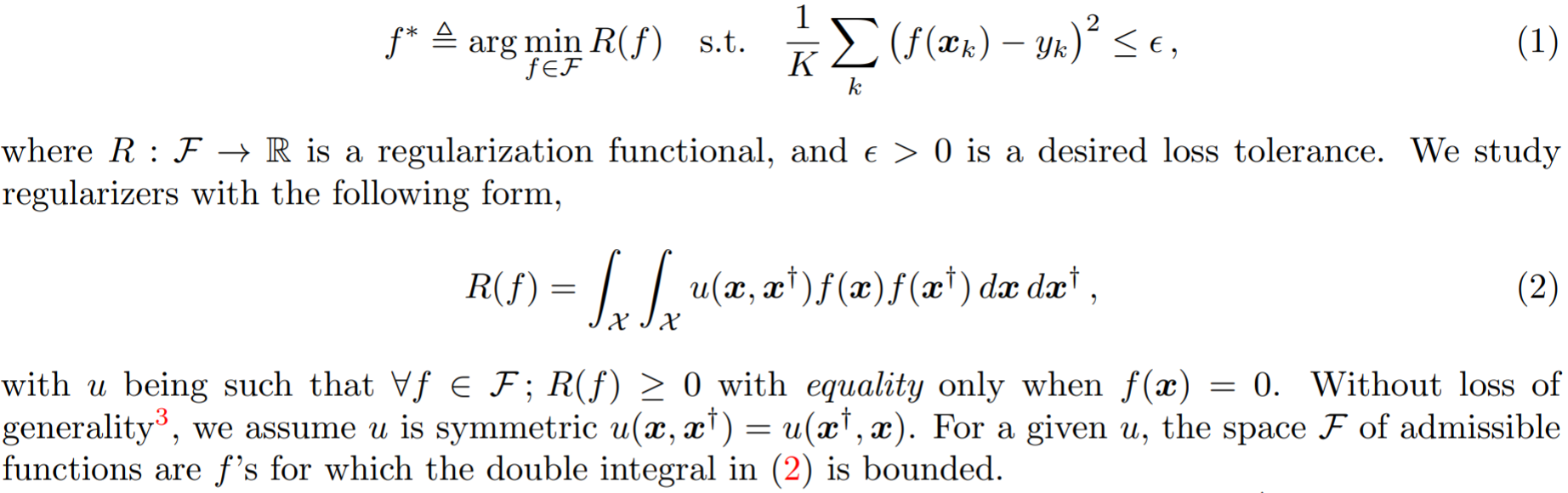

- optimize \(Q\) to maximized the similarity between the features and the prototypes, i.e.,